Yesterday, (Gazing Back to the Future) I looked at how good it would be for PCs to be able to add gaze recognition to supplement the 40 year plus paradigm of GUI, keyboard, mouse and monitor and make PC use more intuitive and efficient. Today, I want to dig into this further. (note that both articles only count as one for your free allowance).

One of the factors that has been a challenge and that I spoke of yesterday is the limit on the size of the display that can be supported. That’s not so good for the large desktop monitors or multiple monitors that have been adopted over recent years. When I first talked to Tobii about that, some years ago, the firm suggested that this could be sorted out by simply adding more sensors, but in its FAQ, the firm says that this is not possible. It also highlights that a single tracker can only support multiple or larger screens with lower accuracy.

Gaze is not that accurate. It cannot replace a mouse or pen input for accuracy and precision, but it can enhance a mouse by taking over the coarse moving of a cursor or pointer. Early mice were very frustrating, either being too precise and taking too much movement to move the cursor across the whole display, or being too coarse, which made fine work too difficult. The answer was ‘ballistic mice’ which were coarse when the mouse moved fast and became more sensitive when moving slowly. (I’m sure things have got even more sophisticated, but I haven’t been watching mouse development recently!).

Gaze can work well to move the cursor to the approximate area of interest, but then a very precise mouse could be used for detailed interaction. That would meet what Jon wanted – just move the cursor to where I’m looking. (Windows, of course, can put a circle around the mouse using the control key, a feature I use. I even used it with young family members to play ‘where’s the cursor’ on my displays!).

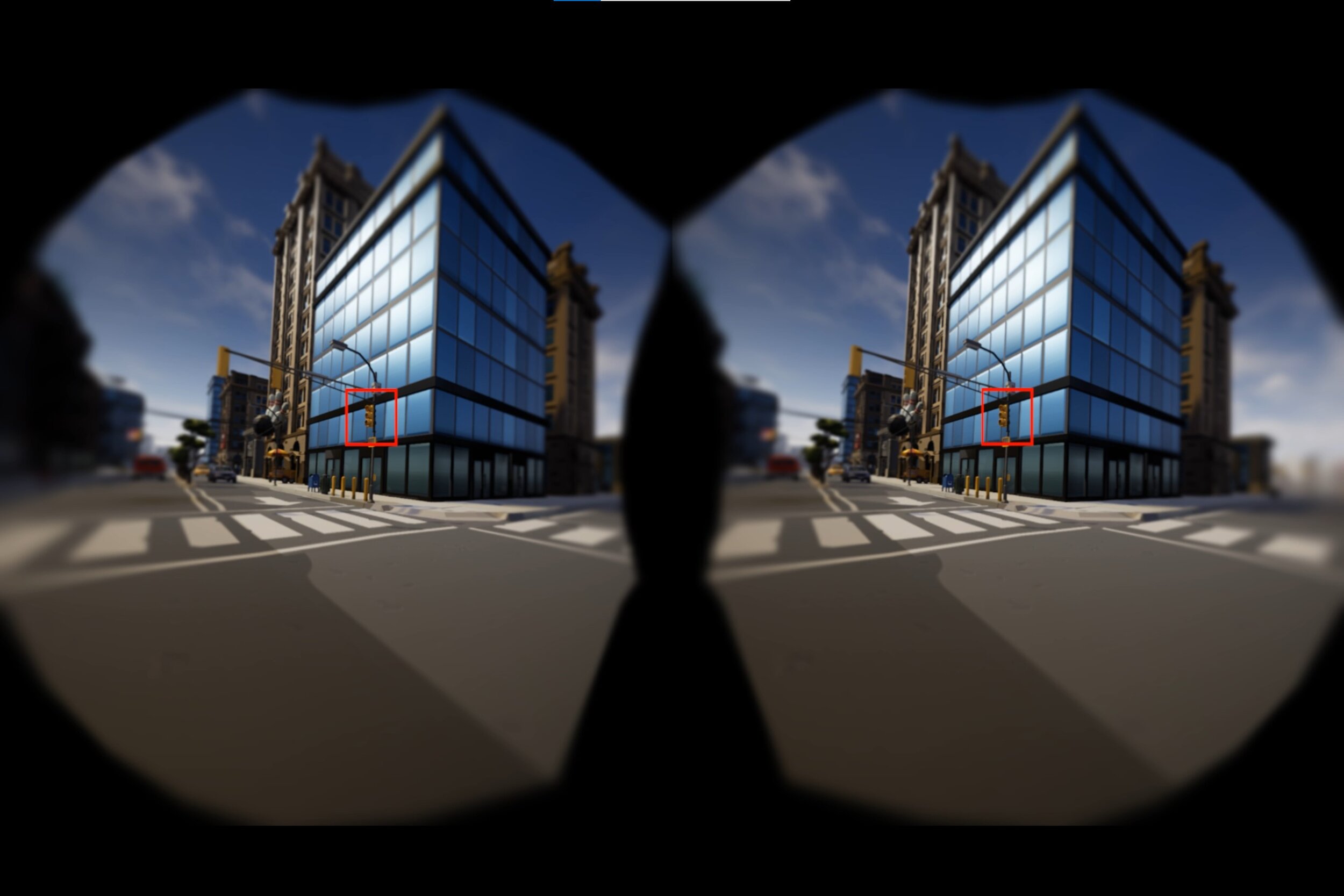

Tobii has had some success with getting support for gaming with gaze and, typically, the gaze data is simply used to move a viewport – effectively emulating head movement in games such as first person shooters (FPS). Of course, this kind of motion can be coarser than typical IT applications. As Tobii acknowledges, this kind of approximate motion can allow bigger displays to be used as the movements can be approximate. I’m not a gamer, but in demos, I did like the idea of being able to look around in an FPS allowing the mouse to be used for aiming weapons or movement.

Foveation

The other big topic with gaze is the support of foveation technology. I wrote last week about foveated displays (Synchronicity in Foveation – It’s a Kind of Magic) and I’ve been writing about foveated rendering (creating images with high resolution in one area, with lower resolution everywhere else) for a long time. Tobii developed its technology to integrate with Nvidia’s rendering technology, allowing optimisation. Nvidia gave a talk on the latest version of this integration and wrote about it in a blog earlier this year.

Just a few weeks ago, Tobii introduced a lightweight foveation coded for AR/VR/MR that is said to allow a 5:1 reduction in content by pre-processing video streams using gaze data to preserve quality where the eye is looking. A 5:1 compression ratio is a useful reduction in bandwidth, although the processing has to be done very quickly. Low latency is absolutely critical for this application. A reduction in video bandwidth helps to allow wireless technologies to work well.

Of course, the gaze technology can do more than simply see where the user is looking. Among other things, the technology can look at pupil size or, more usefully, changes in pupil size. This kind of physiological reaction to an experience or content can help to signal the emotional response or the cognitive load of the user. Nearly a year ago, I wrote a Display Daily about the work that HP is doing with its Reverb G2 (HP Extends XR Beyond the Visuals) to track facial expressions and pupil size. That may, in time, allow tracking of user emotions, but can also be used to optimise training and procedures by optimising cognitive load.

Gaze is also an important part of developing different levels of autonomy in vehicles. Watching the driver’s eyes is a key way of understanding the level of attention that the driver is applying to the road, but that’s another whole topic!

Using a PC

Finally, I have often wondered why we use our feet as well as our hands when driving a car (or playing an organ, piano, harp or electric guitar), so why do we not routinely add foot control to our PCs as well*? I want to drive my PC as efficiently as I do my car so give me gaze, foot control (and, probably, voice**) in addition to the mouse and keyboard to optimise my PC experience. That might drive the demand for PCs up a notch. (BR)

Note that the two parts of this Display Daily count only as one for viewing without a subscription.

* Last time I wrote about this, I bought a USB pedal for my PC to allow something to be controlled by my feet, but it’s still sitting around, somewhere. I must dig it out! That highlights that this kind of interaction needs standardisation and a ‘grammar’ to really develop well.

** I often use Alexa to convert currencies or units as it’s more convenient to ask Alexa for an answer, rather than taking my hands away from the keyboard. I’m sure there’s more that could be done with Cortana. Perhaps I should dig into it – at the moment I have it disabled, it seems.