Synchronicity has been defined as “describing circumstances that appear meaningfully related yet lack a causal connection”. Yesterday, I had a moment of synchronicity on one of my favourite topics – foveation – the optimisation of displays to match human vision. And the link was Avegant.

Yesterday, I got a press release from Ed Tang, CEO of Avegant about its new LED light sources for AR applications. That was interesting, but later I was digging around for a topic for my Display today and spotted on Karl Guttag’s blog a reference to a talk by Tang at the SPIE last year. He recommended it and that’s good enough for me. It turned out to be a really interesting and well presented talk. If you have enough time, it’s worth a look. If not, I’ll summarise it for you.

His talk was called ‘Foveation is Coming’ and that got my interest already as that has been a hot topic for me for years. We’ve published many articles since 2014 on the topic, but let’s take up Tang’s talk.

Everybody wants better image quality and wider field of view with lower power and smaller form factors, but it’s a kind of ‘zero sum’ game. There doesn’t seem to be a ‘sweet spot’ for a solution, he said.

Looking at what’s in the market today in terms of device displays, all your displays, your watch, your smartphone and your PC are, or can be, ‘retina resolution’ – say 60 pixels per degree. However devices that are for AR with wide FOV do not have that level of resolution and those with retina resolution are not wide FOV (well, I might argue the case for 49″ curved monitors if they have enough vertical resolution!).

Physics is In Our Way

Tang said that ‘Physics is in our way’. If you want to put a 60° x 60° display in glasses, it has to be small – say 10mm per side. At 60 ppd, that means 3600 x 3600 resolution. In turn, that mandates ‘sub-micron’ pixel sizes, even if you are generous with the fill factor of the pixel. Making the chip is difficult, but there are other limitations in optics, power, etc. Just driving that level of resolution probably needs multiple big GPUs, which is not good for power consumption.

So What’s the Resolution?

The key is to optimise the display for human vision and that’s where foveation comes in. Tang was clear to differentiate between foveated rendering – the production of the image – and foveated displays that are showing the image. Foveated rendering just reduces the power needed for driving the display. However, foveated displays actually have more and better pixels where the fovea is looking.

What we think we see is not what our eyes are seeing. For example, the eye sees your nose and eyelashes. There is your ‘blind spot’ and blood vessels and floaters move around in your eye. Yet your brain sees clear images.

What the eye sees is not what the eye perceives

What the eye sees is not what the eye perceives

What if you could use a high resolution projector to move a high resolution image around the display, according to where you were looking to optimise the resolution for the fovea? If you can do that, the number of pixels that you need to give a great image at the fovea is just 1/10 or so of the number needed for high resolution everywhere. A 10X reduction of pixels means bringing down the pixels needed from around 30 megapixels to 3 megapixels.

How do you do this? First, you need a deeper understanding of human vision and better eye tracking. The second thing you need is an active optical system that can direct the image and designed for foveation. You also need sophisticated, yet very fast, software to drive the system.

Human Vision & Saccades

The eyes move in two ways. Although they can move in ‘smooth pursuit’, the usual way of looking is with ‘saccades’ that are sharp movements (up to 3-5 times per second) that can change the view up to 300°/second. That’s very fast and hard to keep up with. However, you can take advantage of the phenomenon of saccade masking. With such sharp movements, you might expect to see some blurring, as you would with a camera moving so fast, but your vision starts to shut down before the eye moves and ramps back up after the move. That’s called saccadic masking. There’s a lot needed to be sure when that is happening, but ‘if you do it right’, you win time to do something.

Tang gave a great way to see this effect. Look with both eyes into a mirror. Look at one eye, then the other. You won’t see your eyes move. (That’s a great demonstration and the key perception effect for most ‘sleight of hand’ magicians, I suspect – Editor). On the other hand, if you do it in front of the selfie camera on your smartphone, you can see your eyes move, because of the latency in the system.

Eye tracking has become a standard kind of technology in devices, based on using cameras, but its relatively slow and it’s very power hungry (a factor, as I understand it, in why it hasn’t become a standard feature in, for example, notebook PCs as it would reduce battery life). What’s the solution?

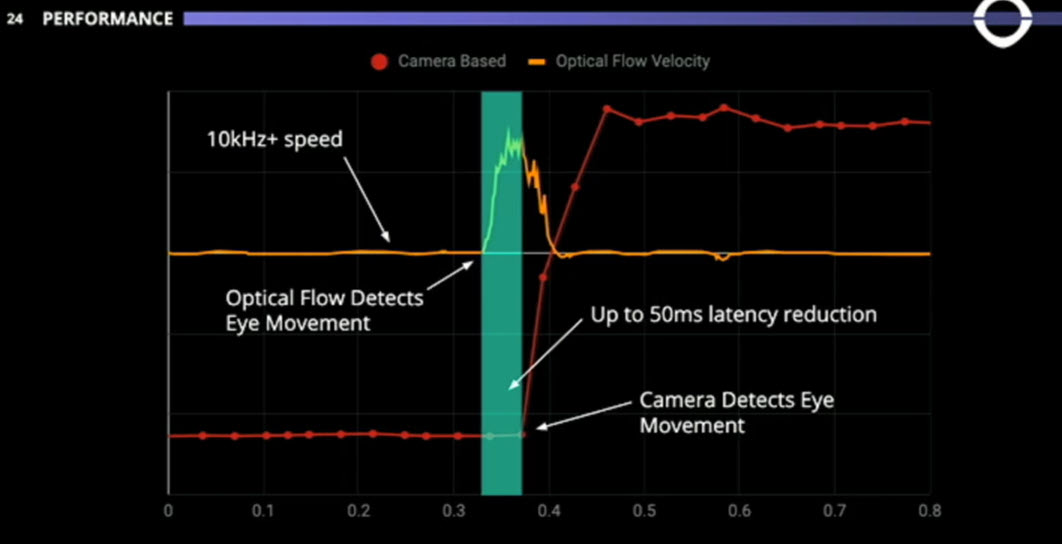

Tang said that Avegant has a technology called ‘sensor fusion of optical flow data’. That uses an extra sensor that takes just 10 mW and can run at 10s of KHz speed to detect saccadic movement. It also detects more data and at a much earlier time than a camera-based sensor. The difference can be up to 50ms in latency, but more importantly, you can power down the camera for up to 90% of the time.

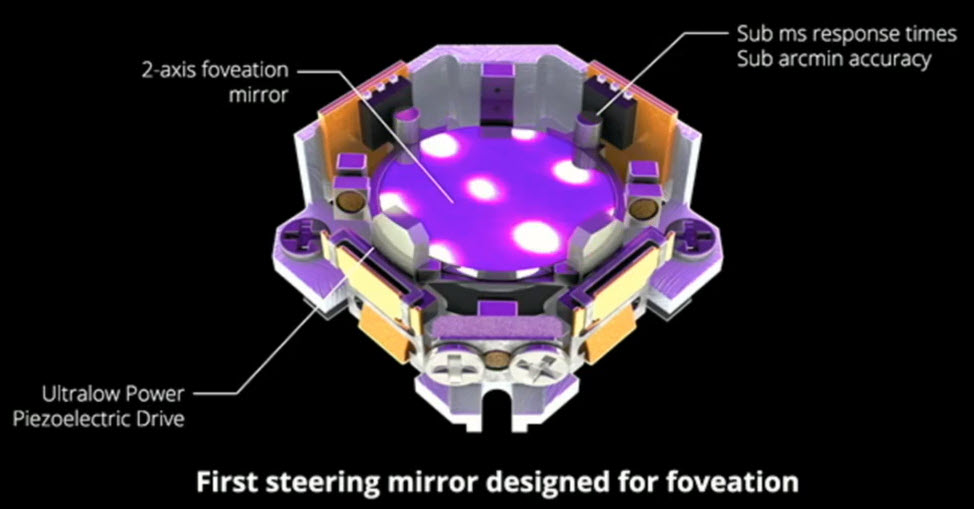

Having tracked the movement, how do you move the good pixels around fast enough? At the moment there isn’t a mirror that’s fast enough to hit all the specs needed. So Avegant has designed one. It’s a two axis mirror designed for the purpose of steering light from any source and using piezoelectric drive to be very low power, especially when it’s not moving. It has sub-millisecond response times and sub arcminute accuracy. If you want want to perform good foveated display, you need to be in that region of accuracy and to keep up with the eye, you need to be that fast. Tang said that the device is the first steering mirror designed for foveation.

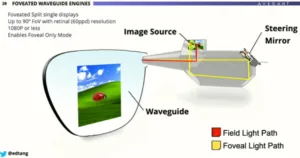

Avegant has a number of different engines from its work over a decade or so and has a single chip display engine that can use a single chip to create the two different resolution images. The two images have different light paths in the system. With an existing 1080p chip, the firm can achieve a 90° field of view with retinal resolution.

Avegant uses different optical paths for the small high resolution foveal images and the lower resolution peripheral image. But it uses the same imager.

Turning to software, latency is a big issue to increase realism and reduce discomfort, but none of the graphic pipelines have tight enough tolerance for this kind of foveated display and get the right pixels to the right place at the right time. The firm has made its own compositor software that takes all the inputs and creating the right output.

The firm can take a scene from an engine like Unity and getting a foveal and a peripheral image and the compositor decides when to display. Avegant also has light field technologies from earlier work and products, and uses that information just in the fovea as the peripheral vision doesn’t have focus, so why worry about it?

The firm has prototypes of AR and VR designs using this foveated approach and that is achieved with significant power saving, not just in the system, but in illumination as well.

Analyst Comment

I have banged on for more years than I can remember about the benefits of actually taking time to understand human vision and perception when designing displays. That’s the reason why I was so pleased to spot and publish the release of information about Candice Brown-Elliot’s Pentile Matrix technology back in November 1999 and why I have been a follower of Varjo in its development of headsets. I think the sensor work that Avegant has done to enable faster and lower powered gaze recognition is also potentially very important. (BR)

(and please excuse the headline – I couldn’t resist getting a Queen song title alongside one from the Police in a single headline!)