After the Easter break, there seems to have been something of a slow down in “big news” so Front Page stories were not so obvious as last week as we went to press. (The worldwide PC market story arrived just as we were going to press).

I had a changed week as a flight to France was cancelled because of an Air Traffic Control labour dispute. I had been intending to visit the Laval Virtual event which focuses on AR and VR, but that trip had to be called off, which is a shame as there were some interesting talks as well as local and international exhibitors, including Barco, Christie, Nvidia, Optinvent, Orange, Panasonic and Walt Disney. Laval is not far from Rennes, where there is a lot of R & D – Technicolor continues to have a centre there. The experience, sadly, reminded me of the weeks before our first DisplayForum event in Nice, when there were ATC disputes in several preceding weeks. The stress took years off my life!

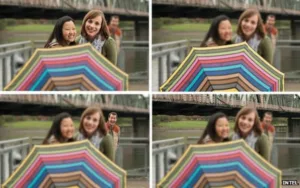

A smaller story this week was the news that Intel is developing its RealSense technology for smartphones. We’ve been reporting on the technology, which adds a 3D depth map to images captured with the system’s camera, since CES in 2014, and as the Creative Senz3D since IFA 2013. Although it’s not a display technology, it does affect the operation of the device and has a number of applications. It could be thought of as a Kinect-style device and that had a big impact on the XBox market. I have written before of the need for more sensors in displays and I include this one in that category.

Initially, the depth camera was developed as a stand-alone webcam by Creative Labs as the Senz3D and we tried it as a “gesture input” device and were unimpressed at IFA 2013. Creative has dropped the price now and it looks distinctly “end of line”. Intel dropped SDK support, it seems, so even for developers it has not been as useful as it might be. Online reviews suggest that buyers have found few apps and limited use cases. (Intel also sells a stand-alone webcam, the F200, but only to developers).

At CES 2015, I was asked by someone on the Intel booth to try the technology again on the Dell Venue Tablet which was just being launched, and initially I resisted, as I had not been impressed before, but I thought I’d better look again. I was glad I did, although I only spent a short time on the demo.

One of the obvious applications, and one of the reasons that Creative was interested in putting it into a webcam, is to detect the face and head of someone on a web conference call and allow the replacement of the background – effectively an automated bluescreen effect. The depth data allows a very accurate cut-off of backgrounds. Intel has said that the technology is supported by Skype. However, that is something of a gimmick to me.

This technique, though, can also be used to easily crop objects from photographed scenes. As a keen photographer, I often would like to remove objects from images, either just to clean up the image, or to allow them to be added to another scene. The RealSense demo I saw at CES showed how quickly this could be done. Using depth is much quicker and more accurate than other techniques that I know for isolating a shape.

Developing on this concept, because the camera knows the depth of an object and how big it is on the sensor, it can calculate the size very quickly and accurately. That alone can make it a very useful tool in a number of applications although it only really works for depths of “several metres”.

Further, with the right software, the object can then be moved within the picture and scaled appropriately. That’s very cool for creative photography (but of course, it means that there needs to be a standardised way to transmit the depth data with the image to the editing software).

One of the most annoying mistakes to make in photography is to get the focus point wrong. It’s not made any easier by the relatively low resolution displays on the backs of many digital cameras. Having to zoom in on every image to check the focus really can slow down a session. Cameras such as the Lytro light field devices promise to solve this problem, but with the amount of data needed, the resolution of images captured (480 x 800 on the Illum model) makes them impractical at the moment. On the other hand knowing the depth of the pixels on the display would allow post-processing software to do a much better job of correcting for wrong focus (especially when combined with the kind of camera and lens-specific data in software such as DXO Optics Pro).

The depth data allows sophisticated re-focusing

Anyway, it seemed to me at the time of the demo, and since, that I would really like to see the technology in a dedicated camera, rather than just in smartphones. Of course, it probably needs a fair amount of processor power and there are imlications for form factor (although the smartphone version should be acceptable) and for file formats.

Bob