ACES (the Academy Color Encoding System) version 1.0 has been released for about two years now. It was developed as a way to more easily manage color consistency across multiple cameras and through the production pipeline.

In a panel discussion at the HPA Tech Retreat, NBC Universal’s VP of creative technologies reported that 49 titles have been completed using the workflow so far, including feature films, episodic content gaming and even corporate productions.

The group also reported that a second set of tests with a wide range of cameras and test shots have been sent to different post production houses for color correction. The results now look very good with great uniformity in color from the houses when following the ACES 1.0 protocol, he reported.

The organization is also working on a remastering workflow and will report later on the work done on the digital remaster of “The Troop.” Other areas of activity include HDR and SDR/HDR transforms.

However, the big focus is on the next major upgrade called ACESnext and key members are on a “listening tour” to hear about what needs fixing on ACES 1.0. One idea being developed is to combine ACES with IMF (interoperable master format) which serves as an important delivery format in Hollywood.

In a separate technology session, Gary Demos gave his typically dense technical presentation at HPA. Demos and Joe Kane have been pushing for the industry to adopt a “single master” format for distribution in much the same way that cinema masters are distributed. In both cases, the content is adjusted at the display based on the capabilities of the display but without having to transmit metadata.

Demo actually demonstrated this at HP where he showed HDR content being displayed using his format with the only two inputs being the peak luminance of the display and the ambient light level in the room.

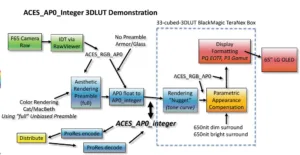

In his companion talk, he went into more detail on this concept, focusing on what he calls “parametric appearance compensation”. This has to do with an improved model for how to adjust video colors so they look more natural to the viewer. He wants to have this considered a part of the ACESnext upgrade.

Background to the Issue

By way of a little background, when a camera views a yellow flower in a dimly-lit or a bright scene, the chromaticity should be preserved and just the luminance of the flower should vary up and down. But when the yellow flower is viewed in real life, it may not always be perceived as yellow. For example, it may appear brown in dimly-lit scenes but yellow in bright scenes. This is an effect of the human visual system. How these colors appear to us is impacted by a number of parameters including the absolute luminance level, the ambient light level, our adaption state, refresh rates and more.

These phenomenon have been described and studied quite a lot (Abney effect, Stevens effect, Hunt effect, Bezold-Brucke effect and Helmholtz-Kohlrauch effect). The CIE has developed color appearance models that help transform captured color and luminance information so that the displayed scene appears to look like the original even with a reduced luminance and color range.

Demos used his paper to introduce this concept and explore the details of the above effects, going pretty deeply into color science. His main point is that much of the vision and display science was done in the days of SDR display and that with HDR, these effects are less clear at the lower and brighter ranges that HDR displays can produce. He suspects that all these effects are actually magnified at lower and brighter levels, and most of these effects are “neither small nor subtle.”

Demos has proposed a new structure for the next generation ACES system. This approach take account of the fact that we don’t yet know a lot about how we perceive colors at these lower and higher luminance levels, so he suggests that we separate this appearance compensation part of the transforms so they can be more easily updated as we learn more.

This parametric appearance model would have to know the display characteristics and setting as well as the viewer’s surround condition, and ideally, their particular color matching function (CMF) based upon their eyes and screen subtended angle. These variables would then drive the color transforms to better display the perception of the scene for that viewer. Demos even envisions the display adjusting the ambient lights for best response. It’s not clear how well that would go over with consumers, nor the need to test their eyes to determine their CMF!

The schematic below summarizes the envisioned ACES 2.0 workflow he used for the demo results that he showed separately in the Innovation Zone.

Demos summarized the advantages as:

- Built on “radiometric” (chromaticity-preserving) spine. This enables:

- colorfulness compensation

- ambient surround compensation

- unambiguous masters

- end-to-end calibration

- optional CMF accuracy improvement

- Display brightness parameter compensates for presentation display brightness differences and for ambient surround differences

He also listed a number of steps he wants to now take to explore the impact of these various visual effects on the extended luminance ranges offered by HDR displays. –CC