Christopher Pickett is CEO of Pelican Imaging which has a depth camera for mobile devices. We have previously written about the company, which uses camera arrays and use the multiple images to calculate depth. Simple patterned light sources help the process – you take the edges and the patterns and calculate the depth of objects by comparing the bit maps from the different cameras.

Pickett said that the challenge is to integrate real and virtual objects and you have to know where the objects are and where the user is. Without depth you have trouble getting scale.

Near field accuracy is about 99%, but in far field, you probably need >95%, but that is quite tricky with all technologies. In other sensors, measuring and scanning longer distances uses more power, but not in cameras. Cameras also work well both inside and outside.

Pickett said that current infrared-based pattern light systems don’t work outdoors, or even near windows. With “time of flight” systems (which measure the time light takes to bounce off objects) power consumption varies with distance and it’s hard to be accurate when objects are close.

Stereo cameras gives noisy depth data, which is why Pelican uses more cameras to smooth out the noise. Stereo also has problems with close depth mapping. Further, if the sensors get out of calibration, they don’t work.

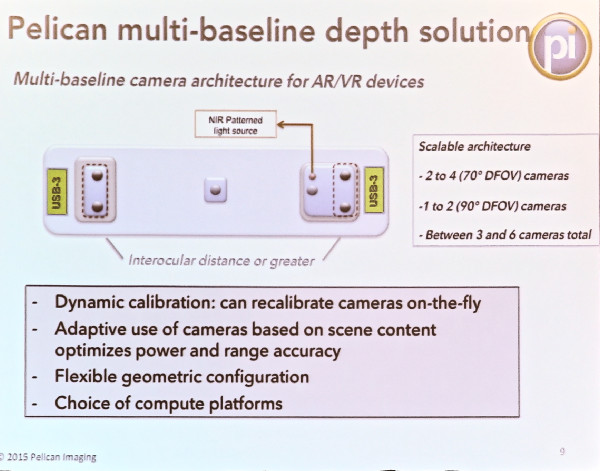

Pelican uses multiple arrays to help with small and long distances. A big advantage is that the system can re-calibrate dynamically and on the fly. There is no interference between multiple devices.

Accuracy tends to fall off with distance, but depends on the distance between the cameras. The further apart they are, the better.

Different cameras in the array can use different spectrums which may give more data (e.g. infrared). The standard cameras that Pelican supplies support both infrared and visible light.

Pelican’s camera array uses four or more low cost cameras.

Pelican’s camera array uses four or more low cost cameras.

The optics are all fixed focus, which keeps them very slim (2.5mm – 3mm) and are very standard and low cost.

Pickett said that products could be in the market in Q2 of 2016.

Analyst Comment

I first tried a depth camera when I got a demo of the RealSense system on a Dell tablet at CES this year. (Intel Gives a Keynote – and Might Have Convinced us About Realsense). I was impressed. Clearly others are also impressed and Apple bought Linx Imaging, which makes array cameras, like Pelican in April. It looks as though Pelican may have a hot product here. (Looks Like 3D Depth Capture is a Hot Topic) (BR)