Ken Lee is from VanGogh Imaging and he talked about mixed reality for smartphones and wearables. The company has expertise in software and 3D computer vision.

Ken Lee is from VanGogh Imaging and he talked about mixed reality for smartphones and wearables. The company has expertise in software and 3D computer vision.

VanGogh is seven years old and the firm has worked in medical, 3D printing and has even worked on weighing cows! There are lots of AR applications and Lee talked about some, but he said that they always need a way to locate the glasses relative to the real world. You can use markers such as simple QR codes to set locations. You can use 2D without markers, but it’s not so reliable and it is sensitive to light.

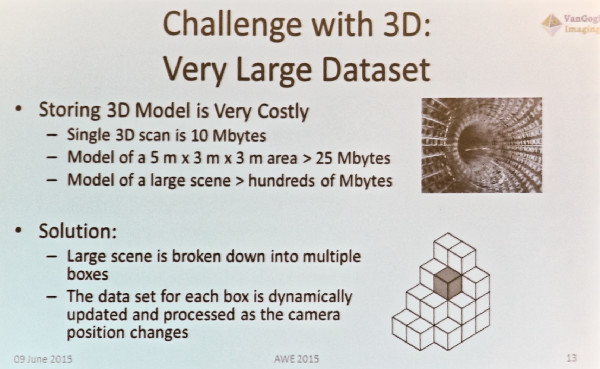

With 3D you can get scale, dimensions, motion and occlusion. There has been a big push by Intel to promote depth sensors. VanGogh has a pipeline – with scene capture (which is dynamic), object recognition and tracking, 3D model reconstruction, and then an analysis of the scene.

Challenges are that the process is computationally intensive (which is, no doubt, why Intel is so keen), but it can be done on a phone, even with a CPU, not a GPU. Around 10% – 15% of the CPU power is typically used by the depth camera. You generate very large datasets and that can be hard to handle. Lee said that many of the 3D sensors available at the moment are very noisy, so you have to do a lot of work to clean up the data.

In response to a question, Lee said it should be possible to construct photo-realistic texture maps, but “nobody is quite there, yet”.