The final keynote speaker was Mike Mayberry of Intel who looks after Intel Labs and controls Intel’s spending on university research

Chip development is “Easy to say, but hard to do” and Intel’s priorities are in extending Moores Law, but there is a second function which is working out what Intel should do – which means imagining new applications and ideas rather than simply developing.

The numbers in the semiconductor industry are very impressive and Mayberry quoted the famous Arthur C Clarke saying “Any technology that is sufficiently advanced is indistiguishable from magic”. You can now make around 2 billion transistors per cm² and that means around 100 million bits of storage per cm²

The industry is making around 500 x 10 ^ 18 transistors every year. The technology keeps developing with each generation at double the previous generation.

There are always forecasts and 22, 14, 10, 7, 5 nm production facilities are on the roadmap. What could you do if that is possible? The core for Intel is compute power, but sensing and security are becoming more important now. The real world is messy and analogue and is not good for computers, so sensing is tricky. Mayberry said that security has to be “in from the start”.

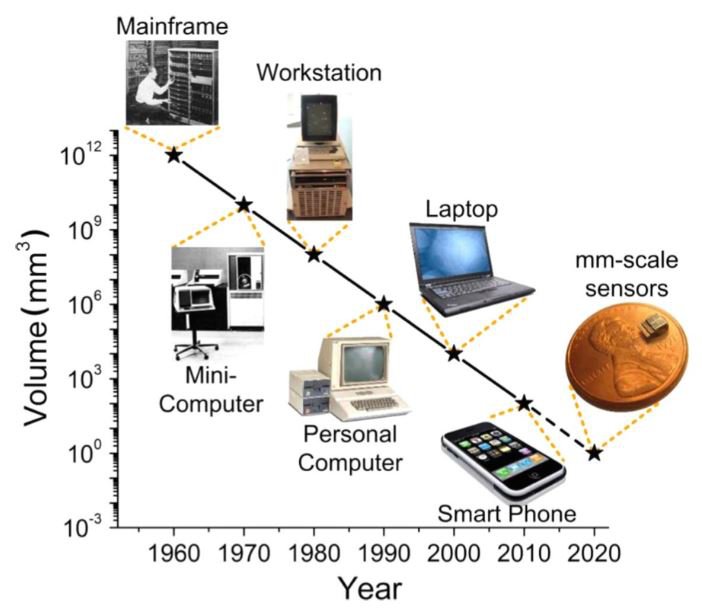

Mayberry quoted “Bell’s law of Computer Classes”, which is that new class of smaller devices every ten years – the industry has gone from mainframes to minis to workstations to PCs and then to handhelds… but that may have stopped because of human factors. But, he asked, what could you do if you weren’t limited by human factors – what could you do with smart dust? What if you had computers at millimetre scale?

Bells law of computer classes – every 10 years, a new class. Image:Finesol

Bells law of computer classes – every 10 years, a new class. Image:Finesol

Intel has built a series of these, the Quark, the Curie and Jasmine which has the equivalent of a mid-90s PC and can be powered forever from a 1 cm² tiny solar panel! (Our notes are very clear on this, but, later, in trying to check for more information on the ‘Jasmine’, we could find nothing! – Man. Ed.)

“What’s next if all devices are connected?”, Mayberry asked. You can’t connect using current mobile wavebands as there is not enough bandwidth so there needs to be a development of meshes and backbones and in the future, the bulk of communications will be machine to machine. The internet was invented when/ devices basically “trusted each other”, Mayberry said. Nowadays, trust has to be earned, not assumed. For security to be assured, you need three things – protection, detection and recovery

What about autonomous driving? That will mean that people will want to do as much as possible in the car – entertainment and maps in the cloud will be OK but in rural there may be no communication infrastructure, although there may be easier driving. On the other hand, in the city there is more communication infrastructure but driving is harder.

You can use cameras to predict pedestrians or to help manage traffic. All communication channels need to be re-thought to get the right latency, or speed, or both. That may need multiple protocols rather than a “one size fits all” single system. Security becomes a big issue and there will be challenges in the repair of devices that need to be trusted in the car.

In education, adaptive learning can be enabled and optimised by using a camera that is looking at the user and checking their engagement and combining this with analytics such as checking mouse movement, etc. There is a reasonable degree of accuracy with a single mode, but multi mode improves things, and personalisation is even better. “Duh!”, Mayberry said, “humans are different”. The more you know, the better, but that can mean you have too much data.

Today, we can do machine learning with some human intervention, Mayberry said. There has been some success in recent times, especially with deep learning. However, AI systems are still not good at handling ambiguity – you can fool AI systems multiple times. At the moment, they have little concept of context and it would be good to have autonomous learning. AI has been differently defined every decade, Mayberry said.

Designing Computers for Deep Learning

Getting back to compute – how do you design it for these new problems? Reconfigurable compute is useful, he said, but deep learning is not good for software, you need a lot of speed although algorithms are improving rapidly. The systems must also become easier to program.

Could you make more optimal hardware for AI? Yes, at least in theory although Intel is still proving it. A “neuromorphic processor” might be 100X more energy efficient than current processors in AI applications. Such processors might be much faster than current speed of learning.

There are some “truly radical things” being developed, Mayberry said and he identified the University in Delft which is working on quantum computing, Hardware is being developed for the Qubits that you need in quantum computing. However, you have to be able to interface to Qubits and that means using supercomputing for error correction, he said.

Intel is partnering with Princeton to understand the neuroscience of the brain. In the project, you show pictures to subjects and scan their brains in real time and look for patterns – which needs a lot of processing. You can show people movies, the ask them to imagine the movie. The question is, can you detect from their brain activity what they are imagining? At the moment, Mayberry said, there is around 10% accuracy, which is much better than random and is improving. However, we maybe 15 years out from real understanding of how the brain processes images. It’s not just computing, Mayberry said, it’s materials and ideas as well.