In the “Capturing Immersive Images” session, Pete Lude from Mission Rock Digital gave an overview of some of the techniques. This included lidar scanning, time of flight sensors, structured light capture and plenoptic cameras. He highlighted that Lytro debuted their cinema plenoptic camera at this same event two years ago, but that they have now been purchased by Google (well some of the staff have joined Google Lytro Is No More – Man. Ed.) and the camera’s fate is unknown. But plenoptic cameras remain on the market, including models from Raytrix. Lude cited a number of patents around light field capture and integral photography (Lipman 1908) all the way back to Leonard Da Vinci.

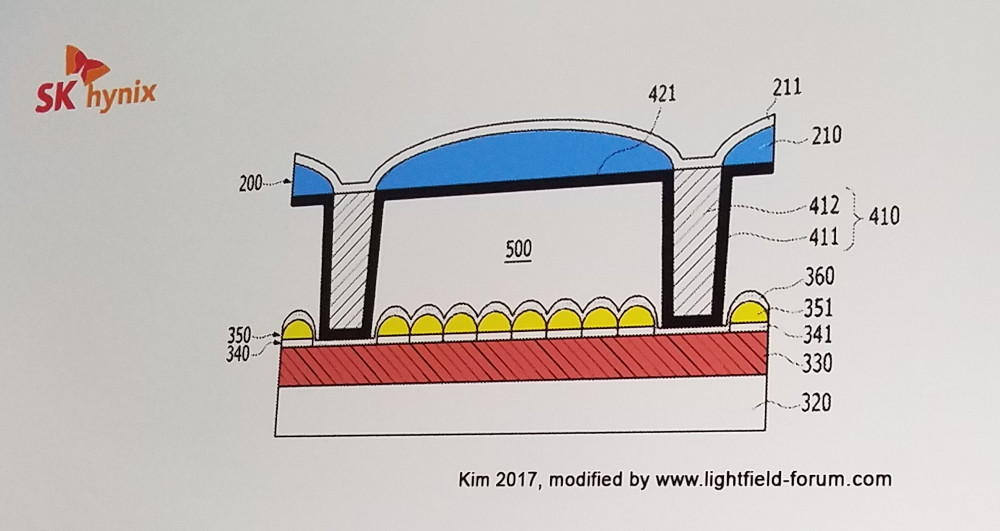

He then highlighted some miniature plenoptic capture devices

Light field capture offers cinematographers a lot post-production control of the framing and composition; depth of field, focal point and camera movement; lighting and reflections. That means a lot more flexibility to fix compromised shots and a big potential savings in CG and compositing. But there is no standard light field workflow today so it is much more expensive than conventional filmmaking.

Lude also gave a brief overview of the state of standards development for AR/VR and light fields such as activities in MPEG-I, JPEG PLENO, IEEE, Khronos Group, CTA , the Streaming Media for Field of Light Displays (SMFoLD) group and SMPTE

Ryan Damm from Visby focused his talk on “holographic capture”, which he very loosely defined as useful for today’s tethered VR/AR headsets and future light field displays. 360º capture is here today and the creation of 2D versions is fairly mature.

But if you really want to look and move around in the immersive media, you need to go to holographic capture. However, it is very early days for that. There are no established workflows or standards, tools are broken, repurposed or missing all together, and there is no clear grammar. And where is the audience?

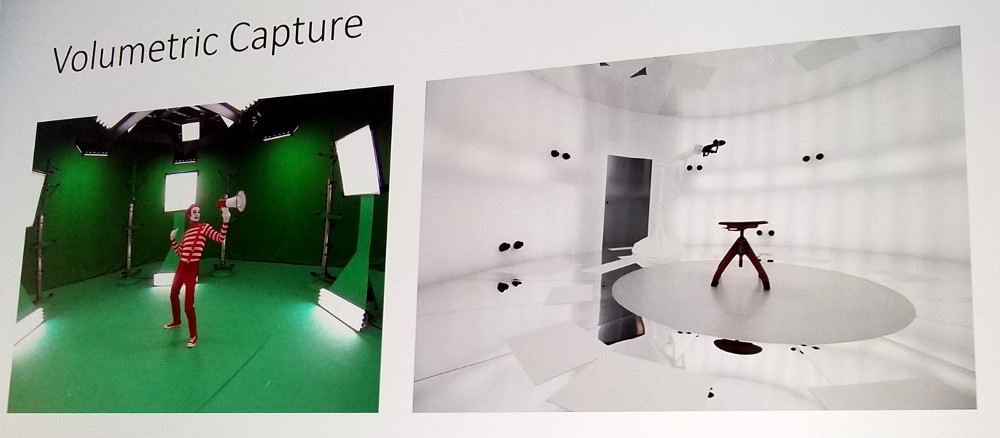

Damm said there are two basic approaches today. The first is volumetric which uses a number of cameras to capture the shape of the object and then synthesizes the lighting. Examples include light stages from 8i, Microsoft, Google and others. These assets are placed in a game engine for production.

In Light field capture, the cameras capture the light from objects (typically an array of cameras) and the recreates the light in rendering. Visby see volumetric capture as defined by 20-30 cameras, but light field capture needs at least 50 and often 100+ cameras for capture.

Damm noted that 360º video standards use existing 2D codec and production tools, but future steps are a bit uncertain. Distribution is hard, too, as there is no quality metric. De facto volumetric standards exist too, coming from the game engine and visual effects industries. He cited the efforts of OTOY and their ORBX format as helpful in this area. In Light Field standards he thinks there is nothing (contrary to what Lude and Karafin discussed).

The industry needs standards as, without them, each device will render differently and there will be fragmentation. But it is very early days. Damm says Visby plans to show its light field capture approach at next year’s NAB.

Light Field Labs CEO John Karafin showed some of the simulations they have been showing for nearly a year now that compare various methods of delivering data and rendering for a 3D display application. His ground truth is a light field data set with over 500 Gbps data transfer rate. He then showed simulations of various rendering techniques (VR 3DoF, interactive game engine) and how some of the transparancies, reflections and materials properties are lost with this approach.

To solve the problem, LFL has been working with OTOY who has developed a contain format for the distribution of light field data sets. It is based on the Visual Effects needs and creates a container that interoperates with over 30 different tool sets in this industry. Whether the source material is CG or multi-camera generated, the idea is to reduce this to a series of models with geometries and materials parameters in a 3D world. LFL calls this their vectorized codec and it can allow the delivery of the ground truth data set at a mere 300 Mbps.

This approach also decouples the display application from the source content allowing a lot of heavy processing to be done at the display, not by the source (like a typical game render). – CC