Planning to meet someone, we dropped into a second session at the IBC conference, after the Immersive Media session (Immersive Media at IBC). It turned out to be quite an interesting session that followed up on our report on volumetric capture from the Fraunhofer HHI event just before IFA (3IT Holds an Open Day).

The session was on volumetric capture and was titled “Advanced Volumetric Capture and Processing” and was given by Oliver Schreer who is Head of Immersive Media & Communication Group, Fraunhofer IIS. At the moment, most 3D content is created as 3D models which are presented as a sequence of meshes using render engines such as Unity or the Unreal render engine. At the moment, characters are animated by capturing the facial expressions of actors whose motion is captured by tracking parts of the body or face and this data is then mapped onto the geometry. Some content, such as facial expressions and the movement of clothes is very difficult to replicate.

This system creates 1.5 Terabytes of data per minute of capture. Image:Meko

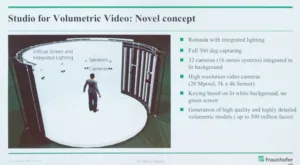

The alternative is to capture 3D images in point clouds (Immersive Media at IBC) and the HHI has developed a prototype volumetric capture facility in Berlin that uses 5K x 4K cameras (32 of them, arranged as 16 stereo pairs). Theses cameras are around an environment that can be accurately controlled in lighting. 120 LED panels are provided to create a background and these panels can be individually adjusted. The system produces 1.6 Terabytes of data per minute, although coping with that level of data is not as difficult or as expensive as it used to be.

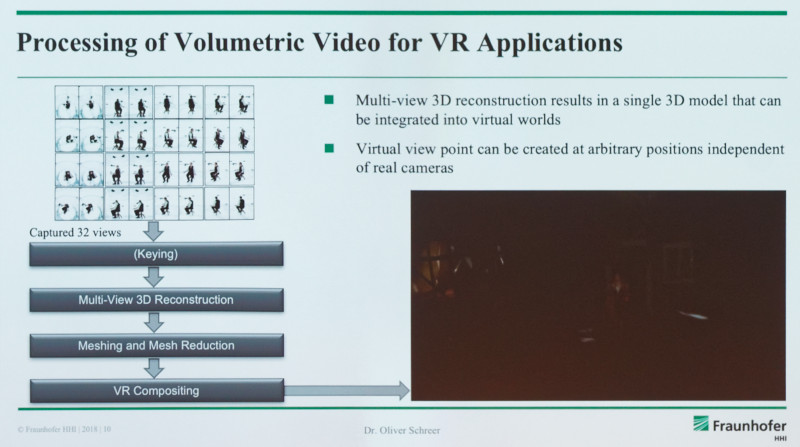

The HHI takes that video data and processes it to create a detailed model which may have up to 300 million facets. The system can be used to create dynamic scene lighting and the team is looking at whether the lighting could be matched to a VR scene to make captured models look very accurate when placed in a scene. A standard workflow has been developed but at the moment, it takes around 12 hours to process 1 minute of captured video, although there is plenty of scope to accelerate this step and the group is working on a fully automated process.

The capture can be used to create a 3D model. Image:Meko

The capture can be used to create a 3D model. Image:Meko

The first prototype was completed in October 2017 and now a commercial facility has been developed at Studio Babelsberg (for more see our report (3IT Holds an Open Day). A capture was made of a famous German boxing coach, Ulli Wegner and used in a VR experience. (Check the trailer https://www.youtube.com/watch?v=ZhhOJa8Lu-o