Rob Koenen, VP of the VR Industry Forum gave a talk about immersive media and we had gone along to hear about lightfields. Unfortunately, the event over-ran and we had another commitment, so we didn’t get to hear that talk, but we got an interesting perspective on VR.

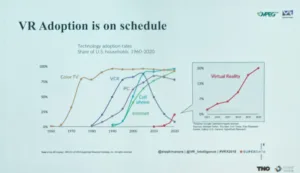

Koenen started by saying that the hype about VR is over (it’s past the trough of disillusionment according to Gartner’s most recent hype curve) and plenty is happening. Live virtual reality is a key feature. He sees 2018 as a turning point and 5G will be an enabler and driver, so there are plenty of forecasts from a range of analysts that show big growth. He thinks that technology adoption speed, so far, is in line with other technologies in US households.

The speed of adoption of VR matches other technologies (although we think that this includes Google’s Coardboard, which is not much of an investment by consumers!). Image:Meko

There are now many services that are supporting VR technology and a lot of content, especially in 360 deg VR. Koenen reported that Sky had told him that it is investing more in 360 degree video as it is ‘starting to pay off’ – VR is no longer seen as an experiment, so it is being used less for promotions.

VR is also being used in many non-entertainment applications and services, especially training and education as well as healthcare. Will applications have been developing, wider adoption has been enabled by the significant drop in the cost of headsets, with many cheaper options such as the Oculus Go which is available from €230. Headsets are starting to go wireless and built-in tracking is starting to appear, which makes set up more flexible and convenient.

However, another development is that VR headsets are not essential for VR and AR which are increasingly being viewed on ‘flat devices’ such as TVs, smartphones and tablets and Koenen described the experience as being ‘surprisingly compelling’.

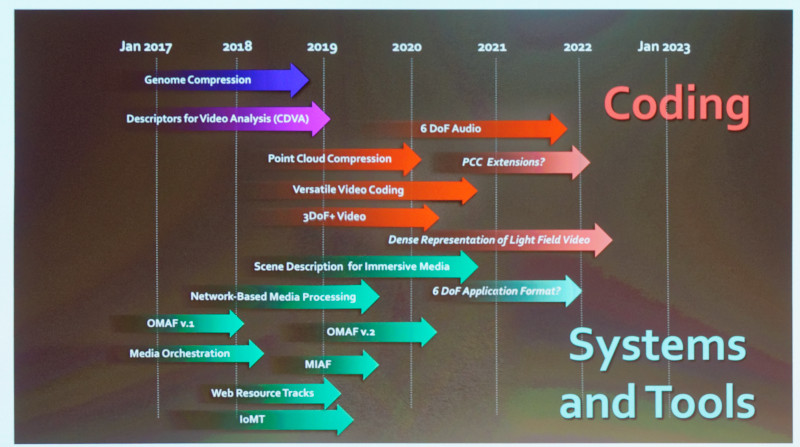

The ecosystems needed to create content are developing with cameras being developed and improved while tools are becoming integrated to make 360 degree video more easy to produce. Qualcomm is building in support for VR and AR into its chips, as standard. There is still a need for a couple of years of further development, Koenen believes, but standards are developing, which helps. MPEG has finalised the Omnidirectional MediA Format (OMAF), although it has not yet been published. The 3GPP has approved the first set of media profiles for VR360 streaming just this month and 3D VR is part of the initial release of 5G, and that technology will help to enable new applications. The VRIF published its ‘Guidelines’ in January.

The guidelines set out the ‘mission statement’of the VRIF and are

- Consumers need high quality, cross-platform experiences with great content

- Content and service providers need a single format with wide reach

- Device makers need a ‘wealthy’ premium content pipeline

- Advertisers need to drive the creation of broad, unique and innovative sales channels

The VRIF plans to add recommendations for LiveVR in January 2019.

Koenen said that there were a lot of factors that need to be optimised for VR. For example, there is quite a lot of text in some apps and there are challenges in the legibility of fonts, so work needs to be done in that area. Other features to be added in 2019 include watermarking, handling HDR and possibly some presentation APIs.

There is plenty of work needed on 3D tools and standards still. Image:Meko

There is plenty of work needed on 3D tools and standards still. Image:Meko

In response to questions, Koenen said that for applications where the viewer is sitting, 3 degrees of freedom (3DoF) is enough, while if you walk around, 6DoF is required. The applications are quite different. New studios are developing for content that looks good in 6DoF applications.

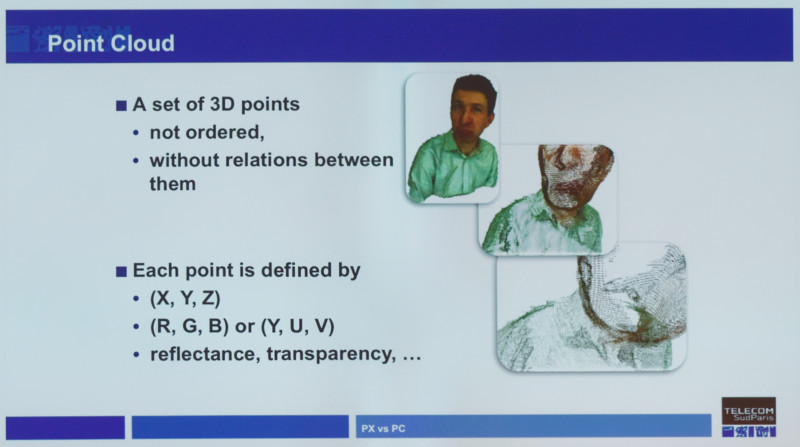

Point Clouds Explained and Compressed

In the second talk in the session, Marius Preda an Associate Professor from the Institut MINES-Telecom talked about point clouds. Preda is chair of the MPEG 3D committee. He started by explaining what a point cloud is. We’re all used to the idea of pixels on flat display surfaces. Point clouds are effectively the same thing in 3D.

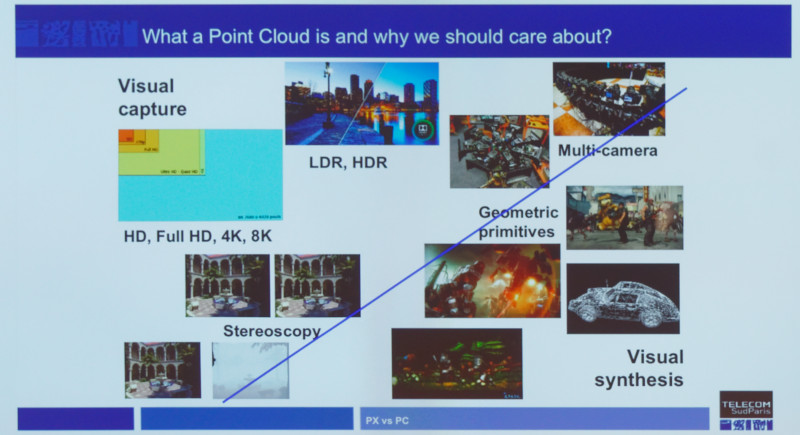

Why do you need them? Well, you can construct 3D solid objects, and that is how they are traditionally defined – there is divide between capture (in pixels) and creation (in geometry). Preda said that point clouds are, effectively, a convergence between the two worlds. The visually captured content is often easier to create, but the geometry-based content is often better for interactivity and immersion.

At the moment, 2D is captured in pixels, 3D with geometry. Image:Meko

At the moment, 2D is captured in pixels, 3D with geometry. Image:Meko

The points in a cloud are not ordered, nor are adjacent points related to each other. Each point is defined by its XYZ position and colour (RGB or YUV) (as well as surface and material properties such as reflectance and transparency).

Point clouds can produce a lot of data – for example an image of a person could contain 800,000 points, but the data involved could be more than a gigabyte, if uncompressed. So, to make point clouds useful, they need to be compressed. Often in creating a virtual environment, you don’t really need the whole thing to be in 3D and often the background can be a flat plane, with just objects moving in the environment being made up of point clouds.

Point clouds can create a lot of data. Image:Meko

Point clouds can create a lot of data. Image:Meko

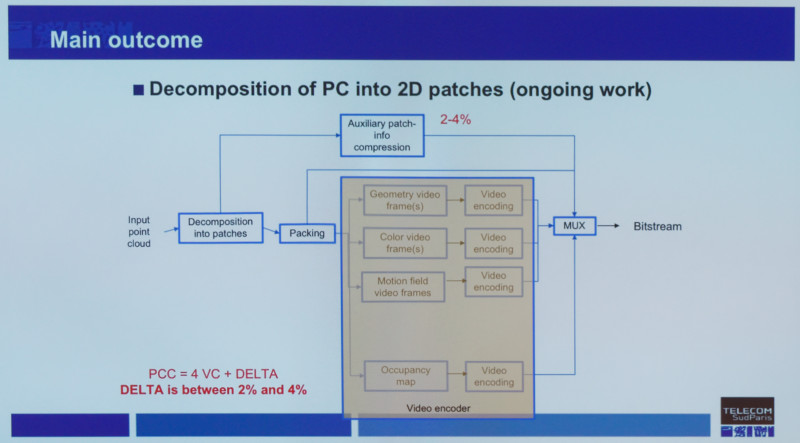

MPEG started working on compression of point clouds and in 2017 the organisation made a call for proposals and the group started work on evaluating the proposals in October of 2017. By October this year, MPEG wants a plan and then a draft will be created by the committee. Clouds can be of different types with static and dynamic being important. One of the approaches tried for compression was to divide the object into 2D patches that can be combined with depth maps. What was discovered was that you can very easily use a video codec once you start to think of a point cloud in this way.

Once you start to use a video codec, you can break the object down and into:

- Geometry video frames

- Colour video frames

- motion video frames and an

- occupancy map.

Each of these sets of data is then encoded using video codecs and while some additional patch information is needed as metadata, this adds an overhead of just 2% to 4%. Using this kind of technology, an 800K point cloud can be communicated in a bitstream as low as 8Mbps with acceptable results (and would need 1Gbps if uncompressed).

Cloud points can be compressed as four video streams with a small overhead. Image:Meko

Cloud points can be compressed as four video streams with a small overhead. Image:Meko

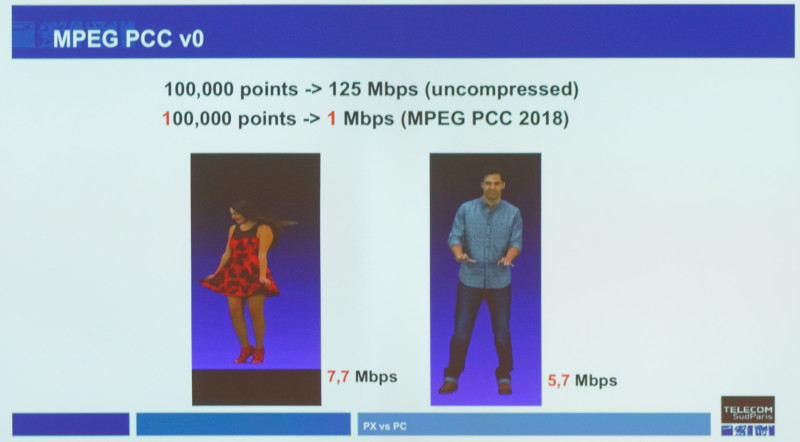

One of the great features of being able to use video codecs is that there is already plenty of infrastructure and high volumes of decoders. There is little delta cost to add point cloud functions to existing decoders. Even some smartphones already have enough technology to act as decoders. The big push now is to get more content, but volumetric capture facilities are being developed.

MPEG is also looking at geometry-based approaches for the longer term but it’s not certain that they will be better or more efficient than this approach.

In answert to a question, Preda said that a standard is probably a year ago. The first target is to be able to deliver 30fps with 8 bits of colour.

Point cloud characters can be viewed from a range of angles. Image:Meko

Point cloud characters can be viewed from a range of angles. Image:Meko