At the SID Display Week in 2022, I just missed (blame trade show email issues) a demonstration of some very intriguing technology from VividQ in Cambridge, UK. In early January, the firm held some demos for potential customers and partners at its headquarters, and I travelled to the firm to finally get a look.

The display technology, which is intended for use in AR and mixed reality headsets, breaks Alfred Poor’s 1st law of Holographic Displays – “no electronic display described as holographic ever is” 🙂 This one actually uses a genuinely holographic image to, among other things, eliminate vergence/accommodation conflict (VAC). That is the effect that causes discomfort or fatigue when you look at an image that has apparent depth, but is shown in one focal plane.

The firm has been developing the optics, the overall design and IP and also the software needed for the holgraphic image creation.

One of the key challenges of holograms is that they need huge amounts of pixels and data to drive them. To get around this and work within practical parameters, the VividQ demo that I saw creates a relatively small area that is truly holographic at the centre of the image, while using a regular stereoscopic image for the rest of the image. That’s fine, because it’s at the centre of the image that the VAC is significant. The company has technology to merge the two images fairly seamlessly.

The Demo

In the demonstration, it was fairly obvious where the holographic part of the image was, but that was mainly because it had been created using laser light sources and there was some obvious ‘speckle’ in the texture of the image that is very characteristic of laser-based displays. VividQ emphasised that the reason that it was so apparent was because the number of pixels that it was able to produce in its demo system limited the techniques it could use to minimise the effect. The same limits would not apply with a more developed demo. With more pixels the speckle could be reduced and a second simpler demo system that I saw using an LED light source showed very good image quality (although I wouldn’t expect to see speckle from an LED source, anyway – it’s an artefact of the coherence of the laser light).

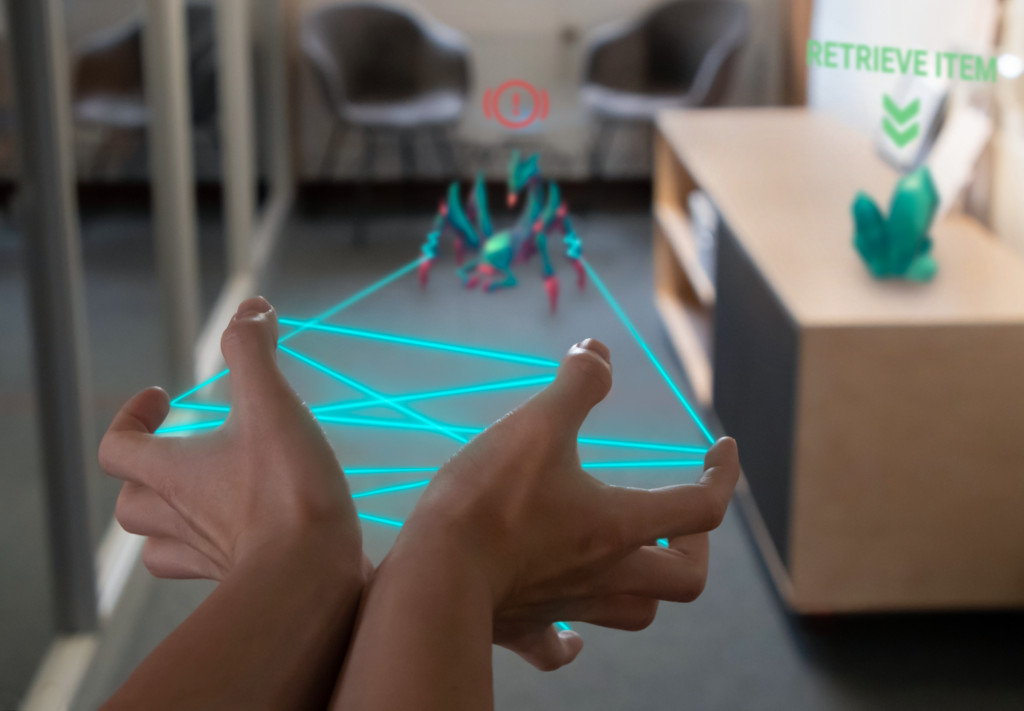

The main demonstration used a bulky fixed system to act like a pair of AR glasses to run some simple games/tasks. These involved, among other things, picking up virtual objects and moving them from one point to the other. The impressive part of the demo was that there was a real sense that the virtual object was really attached to my finger and could be moved very close to my face. Hand tracking was used to identify where my finger was. There was a very natural look to the very close object and further away objects on the desktop.

It’s in this kind of very close viewing conditions that VAC is really a major issue as there is a big difference in the images seen by each eye and the eyes have to be extremely converged. When you are working with 3D images that are at more of a distance, there is less disparity between the eyes and less of an issue. The demonstration made me think back to the many that I have had with different AR/MR systems and made me realise that there have been very few I have seen where what I was looking at was closer than at approximately arm’s length. The Varjo mixed reality experience has tended to be my reference and even there, in simulated training, controls and instruments tend to be where the hands would naturally position at 40cm (16″) or more from the eyes.

VividQ gave us these outline specifications of the system:

- Display: 3D holographic display, DMD-based

- Resolution: > 30 ppd

- FoV: 50° (overall – 20° holographic)

- Eyebox: 12mm x 8mm

- Framerate: 60 FPS

The field of view is mainly limited by the waveguide in use. The waveguide in the demo that I saw was from Dispelix of Finland and the two firms announced on 17th January 2023 that they were working together to develop and manufacture 3D waveguides using VividQ technology. Dispelix has a number of development agreements to work on different headmount systems, as we have previously reported on Display Daily.

The demonstration system used a DMD from TI as the imager, but the firm told us that it can also get good results using LCOS devices as the imager and, of course, these are more widely available. However, for the firm’s technology to work, the imager has to be a modulator rather than an emissive display. As it stands, it wouldn’t work with self-emissive displays such as OLEDs or microLEDs.

Lots of Pixels

As mentioned earlier, you need a lot of pixels to be truly holographic and that means having a lot of compute power to calculate the values needed for the display. At the moment, the level of compute power needed is beyond the GPUs in typical HMDs, so the technology is really suitable for ‘tethered’ applications where a PC-level GPU is available. VividQ has done a lot of work to try to optimise the algorithms used and the level of power needed for the display and hopes that in the future it can migrate to head-mounted devices.

Business Model

VividQ wants to be a developer and licenser of its IP rather than a manufacturer. It has raised investment capital from a range of companies around the world in seed rounds. It is expecting to go to investors with an A round of capital raising ‘soonish’.

Although the company is talking about other possible applications of its technology in, for example, automotive applications, it sees the biggest advantages in these near to eye displays and in XR applications.