One of the big areas of focus at the SMPTE conference was the need to ensure that the wide range of luminance and color carried in HDR signals will map correctly in SDR displays with lower luminance ranges and smaller color volumes. This is called color volume transformation and includes color and luminance mapping.

The problem is particularly difficult because the transformation includes a change in luminance ranges and color volumes. Human perception of colors is heavily influenced by the brightness level of these colors so the transformations of brightness and color are not independent, but interdependent.

In HDR, the content is likely to be mastered in a wide color gamut (typically P3 today) and a wide luminance range, perhaps up to 4,000 nits. The TVs and other devices where the HDR content will be played back, will have lower luminance ranges, but could have similar, larger or smaller color volume capabilities compared to the mastering display. To help make accurate color volume transformation, metadata is needed – and ideally, dynamic metadata that allows optimization on a scene-by-scene or even clip-by-clip basis.

A working group has been formed within SMPTE to work on standards around dynamic metadata to help facilitate accurate color volume transformations and luminance mapping. The group is developing SMPTE standard ST 2094, which is all about delivering an HDR signal with dynamic metadata that will carry the transform information. The goal of the conversion is to present colors on the smaller volume display and not introduce objectionable distortions in lightness, chroma, saturation and hue.

That is not so easy to do. For example, high brightness can increase colorfulness, smaller viewing angles can increase perceived contrast, while shadow details are changed by the black level capabilities of the display, including flare and glare. The ambient lighting conditions also raise the black level, reducing contrast and desaturating colors. Even the overall brightness level of the picture can change our perception as the eye may move into a different adaption range compared to the mastering environment.

But one of the key benefits of HDR is the extended range of colors and luminance it offers, so identifying where the important tonal information is in a scene will create a better transform. This is the idea behind dynamic metadata. Dynamic metadata needs to be created in the mastering process of the HDR content. This metadata can then be used to do accurate color transforms from the distributed HDR content to smaller color volume SDR TVs.

ST 2094 is a multi-part standard covering multiple metadata formats (called applications) and at least one representation for the carriage of this metadata. The metadata provides the information needed to transform HDR or WCG content prior to presentation on displays whose color volumes are smaller than that of the mastering display. Color transforms can be specified for any arbitrary time interval on the timeline, such as scene-by-scene or frame-by-frame.

The group is considering two ways to create the dynamic metadata:

- Parametric mapping

- Reference-based numerical mapping

The two models can be used in many different workflows.

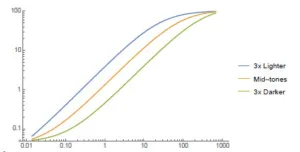

In the first approach, the color volume transform is divided into tone (luminance) mapping and gamut (chromaticity) shaping. Tone mapping typically follows an s-shaped curve that has a linear range in the mid-tones and a more logarithmic shape in the dark and bright regions. A colorist might apply one of the three curves noted in the figure below depending upon the average luminance level of the scene of clip. Or, they might apply one of these curves to a specific part of the scene. Unique tone curves are also not uncommon in the color grading process.

Chromaticity shaping or gamut mapping is designed to allow the wider colors of the HDR content to show as well as possible in the smaller color capabilities of the SDR display. Colors that are within the native color space of the SDR display should show properly, but what about colors that are out-of-gamut, or beyond the native color capabilities of the SDR display?

Usually, these out-of-gamut colors are clipped along a line from the out-of-gamut color back toward D65 white, seeking to create as deep a saturation as possible. But there is a lot of color processing that has to be done to do this accurately without changing the hue of the color. The use of parametric formulas can help improve how these out-of-gamut colors are rendered. ST 2094 committee member like Jim Houston and Lars Borg recommend using a hue preserving color space to do this like CIELUV, CIELAB, CIECAM02, IPT and others. Which color space to use for these transformations is an area of some debate right now.

In the parametric mapping approach, the metadata to create an SDR image from the HDR master is derived in the mastering process. This means doing the HDR and SDR grade simultaneously looking at each grade on respective monitors.

Alternatively, in the reference-based numerical derivation method, the metadata is created after the HDR and SDR masters are done, but no description of the connection between the two grades exist. In this case an iterative process is used that starts with a set of metadata for transforming the HDR to SDR grade. The transformed grade is compared to the original SDR grade and adjustments made in the metadata parameters, with the process repeating until an acceptable match is obtained. At this point the original SDR master can be discarded and replaced with a single HDR master plus the derived metadata.45