They have invested in tools, processors, and vision

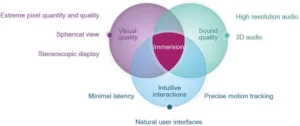

Immersion enhances everyday experiences, making them more realistic, engaging, and satisfying. Qualcomm thinks VR will provide the ultimate level of immersion, creating a sense of physical presence in real or imagined worlds. They see VR bringing a new paradigm for how we can interact with the world, offering unprecedented experiences and unlimited possibilities that will enhance our lives in many ways.

This promise of VR has excited us for decades, and many people think it’s happening now due to the alignment of ecosystem drivers and technology advancements. In fact, it is the mobile technologies that are accelerating VR adoption through products like Cardboard and Gear.

To stimulate our human senses with realistic feedback, real immersive VR requires visual quality, sound quality, and content that doesn’t thwart our intuitive interactions. Adding to the complexity, mobile VR requires full immersion at low power and thermals so that the headset is, lightweight and stylish.

It also requires a system approach on the part of the suppliers. As a matter of fact, one of the main reasons we’re so enthusiastic about Sony. However, Qualcomm, too, is looking at the whole gestalt of VR, including custom designing specialized engines across the SoC, and emphasizing the heterogeneous computing structure of its Snapdragon SoC as being optimized from end to end for VR.

Qualcomm emphasizes extreme pixel quantity and quality, and says they are required on VR headsets for several reasons, most of which can be explained by the human visual system. For immersive VR, the entire field of view (FOV) needs to be the virtual world, otherwise you will not believe that you are actually present there. The combination of the human eye having a wide FOV and the fovea (the part of the retina responsible for sharp vision) having high visual acuity means that very high resolution is required.

Can you see me now?

In a VR headset, the display is brought close to the eyes, and biconvex lenses in the headset help magnify the screen further so that the virtual world is the entire FOV. As the screen takes up more of the FOV, pixel density and quality must increase to maintain presence. Otherwise, you will see individual pixels—known as the screen door effect— and no longer feel present in the virtual world; you’ll be looking at it, instead of being in it.

One potential approach to help reduce the quantity of pixels processed is foveated rendering. Foveated rendering exploits the falloff of acuity in the visual periphery by rendering high resolution where the eye is fixated and lower resolution everywhere else, thus reducing the total pixels rendered.

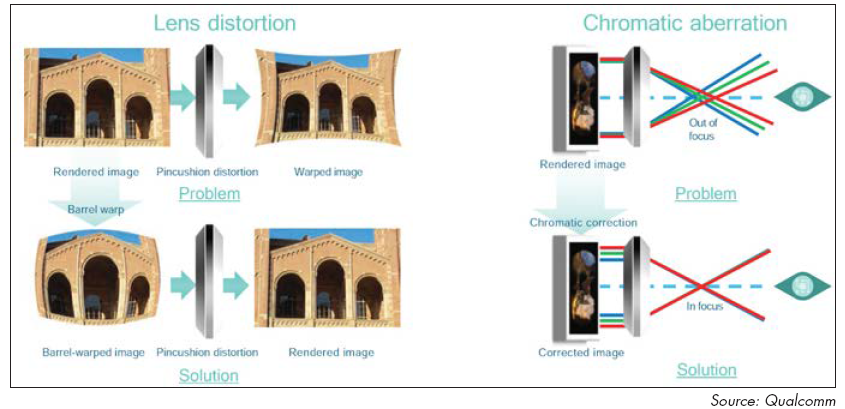

It’s not easy. A wide-angle biconvex lens creates pincushion distortion, so a barrel distortion must be applied to the rendered image to compensate. In addition, further visual processing is required to correct for chromatic aberration, which causes colors to be focused at different positions in the focal plane.

Qualcomm’s View of the VR World

While wearing a VR headset, the user is free to look anywhere in the virtual world. As a result, VR needs to provide a full 360-degree spherical view, which means generating even more pixels as compared to a fixed view of the world. Although a 360-degree spherical view is not new for gaming, it is new for video and is being driven by VR. Multiple 360-degree spherical video formats exist in the industry, such as equirectangular, cube-map, and pyramid-map, so it important to support them all. Besides the video decoder being able to handle a high bit-rate, the GPU must also warp each image of the video so that it maps correctly to the display. Most premium 360-degree spherical video will be high resolution and content protected, so it is also important that the GPU supports digital rights management (DRM) extensions.

Seeing the world in 3D is key to immersion. A VR headset shows slightly different images to each eye. These images are generated from different viewpoints, ideally the actual separation between the human eyes, so that objects in the scene appear at the right depth. Since stereographic display requires generating and processing an image per eye, this is another reason for extreme pixel quantity. For VR, you need to generate the appropriate view for each eye with stereographic rendering and video.

Problem & Solution for both lens distortion and chromatic aberration

Problem & Solution for both lens distortion and chromatic aberration

And then there’s sound

VR also requires high-resolution audio and 3D audio. The sampling frequency and bits-per-sample need to be up to our human hearing capabilities to create high-fidelity sound that is truly immersive.

Realistic 3D positional audio makes sound accurate to the real world and much more immersive. This goes beyond surround sound because the sound adjusts dynamically based on your head position and the location of the sound source. A head-related transfer function (HRTF) attempts to model how humans hear sound. The HRTF takes into account typical human facial and body characteristics, such as the location, shape, and size of the ear, and is a function of frequency and three spatial variables. Positional audio requires the HRTF and a 3D audio format, such as scene-based audio or object-based audio, so that the sound arrives properly to the ears.

Interacting/interfacing in VR

The primary input VR headset method is head movement. Turning your head to look around is natural and intuitive. Other input methods, such as gestures, a control pad, and voice, are also being investigated by the VR industry. Figuring out the right input method is an open debate and a large research area.

A headset should be free from wires so that the user can move freely and not be tethered to a fixed-location power outlet or computer. And since the headset is a wearable device directly contacting the skin of your head, it must remain cool to the touch.

Also, a high-bandwidth wireless connection is required. High-bandwidth technologies like LTE Advanced, 802.11ac Wi-Fi, and 802.11ad Wi-Fi allow for fast downloads and smooth streaming.

Head and motion tracking

The user interface needs accurate on-device motion tracking so that the user can interact with and move freely in the virtual world. For example, when turning your head to explore the virtual world, accurate head tracking is needed to provide the pose to generate the proper visuals (and sounds). Similarly, if your head is stable and not moving, the visuals need to stay completely still; otherwise it may feel like you are on a boat. Motion is often characterized by how many degrees of freedom are possible in movement: either 3 degrees of freedom (3-DOF) or 6 degrees of freedom (6-DOF).

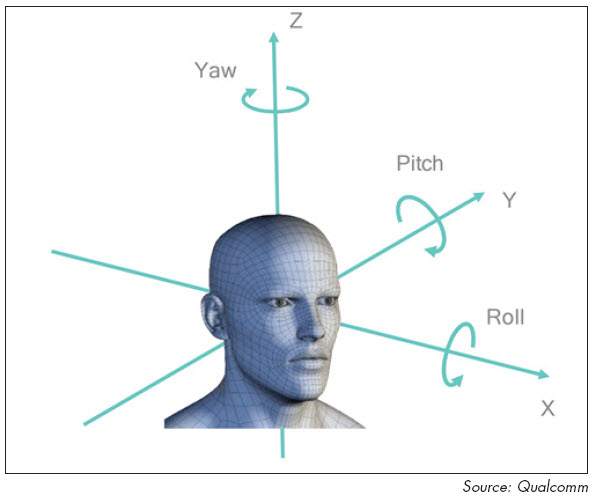

3DOF (X-Y-Z) and 6DOF 3 plus yaw, roll and pitch

3DOF (X-Y-Z) and 6DOF 3 plus yaw, roll and pitch

Rotational movement around the X, Y, and Z axes is detected by 3-DOF—the orientation. For head movements, that means being able to yaw, pitch, and roll your head, while keeping the rest of your body in the same location. Rotational movement and translational movement are detected by 6-DOF—the orientation and position.

One solution to provide precise ondevice motion tracking is visual-inertial odometry (VIO). VIO fuses on-device camera and inertial sensor data to generate an accurate 6-DOF pose. The ondevice solution allows the VR headset to be completely mobile, so you can enjoy room-scale VR and not worry about being tethered to a PC or getting tangled in wires.

Oooh, I don’t feel so good

Any lag in the user interface, whether it is the visual or audio, will be very apparent to the user and impact the ability to create immersion—plus, it may make the user feel sick. Reducing system latency is key to stabilizing the virtual world as the user moves. One of the biggest challenges for VR is the amount of time between an input movement and the screen being updated, which is known as “motion-to-photon” latency. The total motion-to-photon latency must be less than 20 ms for a good user experience in VR. To put this challenge in perspective, a display running at 60 Hz is updated every 17 ms, and a display running at 90 Hz is updated every 11 ms.

There are many processing steps required before updating the display. The end-to-end path includes sampling the sensors, sensor fusion, view generation, render/decode, image correction, and updating the display. To minimize the system latency, an end-to-end approach that reduces the latency of individual processing tasks, that runs as many tasks in parallel as possible, and that takes the appropriate shortcuts for processing tasks is needed. An optimized solution requires hardware, software, sensors, and display all working in harmony. Knowledge in all these areas is required to make the appropriate optimizations and design choices. Possible optimizations to reduce latency include techniques such as high sensor-sampling speeds, high frame rates, hardware streaming, late latching, asynchronous time warp, and single-buffer rendering.

Wrapping it up into an SDK Qualcomm has been thinking about and working on these issues for years. The company thinks the time is right for VR and is introducing the Snapdragon VR SDK, which has new APIs optimized for VR. It should be available to developers in the second quarter of 2016. Developers will be able to use commercially available Snapdragon 820 VR devices to see how VR applications run on real hardware. The Snapdragon VR SDK introduces new APIs optimized for VR, including:

- DSP sensor fusion: Provides precise low-latency 6-DOF motion tracking, real time and predicted, via fusion processing on the Hexagon DSP for improved responsiveness

- Asynchronous time warp: Warps the image based on the latest head pose just prior to scan-out

- Chromatic aberration correction: Corrects color distortion based on lens characteristics

- Lens distortion correction: Barrel warps the image based on lens characteristics

- Stereoscopic rendering: Generates left and right eye view, up to 3200 × 1800 at 90 fps

- Single-buffer rendering: Renders directly to the display buffer for immediate display scan out

- UI layering: Generates menus, text and other overlays such that they appear properly in a VR world (undistorted)

- Power and thermal management: Qualcomm Symphony System Manager SDK for CPU, GPU, and DSP power and performance management to consistently hit 90 fps

Other new Snapdragon 820 features that benefit VR include:

- Several enhancements to achieve < 18 ms motion to photon latency

- High-quality 360-degree spherical video at 4K 60 fps, for both HEVC and VP9

- 3D positional audio for more immersive video and gaming experiences

- Ultra-fast connectivity through LTE Advanced, 802.11ac, and 802.11ad

Qualcomm thinks we’re on the verge of consumer VR becoming a reality. After several false starts, ecosystem drivers and technology advancements are aligning to make VR possible—and the mobile industry will lead the way. The company thinks it is well positioned to meet these extreme requirements by designing efficient solutions through custom- designed processing engines, efficient heterogeneous computing, and optimized end-to-end solutions.

– Jon Peddie