The Nvidia keynote was the opening one of CES, so the first section of the talk was a pitch for CES, highlighting the 50 year history. CTA president, Gary Shapiro, then came on to welcome the keynote speaker, Jen-Hsun Huang of Nvidia came on, in his regulation black leather jacket. Gaming has moved from a niche to the biggest entertainment business in the world, Huang said, and Nvidia’s GPU has enabled not only graphics but has become essential for high performance computing and AI.

Next we got the obligatory video showing how technology could be used for medicine, automotive and space exploration.

Now is an exciting time, Huang continued – what was once science fiction is rapidly becoming real. He said their are four areas that he wanted to focus on – video games are the largest and most intensive computer processing power market. But even the power used in that application has to be boosted to create VR, AR and mixed reality. But you also need AI computing and cloud technology. Super computing is a big area – every query on the internet uses AI in the cloud. The fourth area is autonomous cars.

Deep Learning

The development of ‘deep learning’ has meant a big change and researchers and developers are using neural networks to boost performance. This has lead to a breakthrough in artificial Intelligence (AI). As and example, Huang explained how the layers of knowledge and analysis are built up in the face recognition application, a typical application that has benefited from neural networks. There was one incredible challenge, he said, and that is the sheer depth of computing power needed. However, the researchers met the power of the GPU and that helped a lot.

Computers can now learn how to play ‘Go’ (the most complex human game), how to play ‘Doom’ and to even learn the painting styles of the the great masters. Voices can be synthesised and a network can recognise an image, understand the context and capture data and actions. Networks have learned how to translate languages; robots have learned how to walk; even cars have been taught how to drive, an amazingly complex process that few of us could easily describe. All these things were impossible until recently. Video, audio and language can combine in new ways, enabled by the technology.

GPUs are the Drivers (pun intended!)

GPU technology development is the driver of this progress, and GPU technology was driven by game playing. The size of the gaming market has doubled in value terms in the last five years, Huang said. Nvidia estimates that there are 200 million Geforce gamers globally and ‘several hundred million’ core gamers. Game performance has improved by 10X visually in five years, E sports are now the largest participant sports and, eventually, Huang believes, the number of people will mean that E Sports will be as big as all the other sports put togthether. There are 600 million viewers of game play – there has been huge growth. All of that activitiy and interest means money for R&D which allows the development of better technology.

Huang then made his first announcement, of a deal between Geforce and Facebook Live for live game sharing and viewing.

Huang then showed a video of “Mass Effect Andromeda” and introduced the developer, Aaron Flynn of BioWare – he said that the game had seen five years of development. There was also a preview on the Nvidia GTX 1080. The game will be released on March 21st. The graphics were impressive and the audience, clearly keen gamers, were impressed.

Gaming Finally in the Cloud?

However, there are a billion PC users that use machines that are not ‘game ready’, while the other billion are ‘game ready’. So Nvidia wondered about running GPUs in the cloud (we’ve reported on developments in this area for several years – Man. Ed.). The level of compute power needed is high – and responsiveness has to be very fast. A new platform, ‘GeForce Now’ turns ‘any’ PC into a GPU-gaming PC, Huang claimed. Many game platforms work very well and can be accessed on GeForce Now – there was demo of the Steam gaming platform running on a PC or a Mac.

Rise of the Tomb Raider was demoed. It looked good, but of course, the question is latency. The service, which is based on cloud-based computing, will be available for ‘early users in March and the cost will be, typically, $25 per 20 hours of play. There are different grades of service and performance available according to the level of power needed.

Nvidia showed Tomb Raider in the cloud – click for higher resolution

Nvidia showed Tomb Raider in the cloud – click for higher resolution

TV via the New Shield

Huang then turned to TV and said that it was more than a year ago that Nvidia joined with Google to develop TV content delivery via the Shield device. Huang announced a new Shield that supports both UltraHD and HDR and will support Netflix and Amazon with both features. It will also support YouTube and Google Play. There will also be a Steam app on Shield – users will be able to watch on their TVs while the app is actually and playing on a PC via Valve Steam. There are also more than 1000 games on the Nvidia store for those that don’t have a PC suitable for gaming.

Nvidia’s New Shield supports UltraHD & HDR

Nvidia’s New Shield supports UltraHD & HDR

A new platform is Amazon Echo, in Huang’s view. so Nvidia has worked with Google and there is a new Google Assistant for TV. Wouldn’t it be nice if the AI agent was everywhere in the home, not just near the coffee table, Huang asked? So Nvidia has made an AI mic. Nvidia Spot, that can work with the Alexa. The microphone can detect voice from 20′ (6m) and multiple mics can be used for beam forming to detect who is talking,. Huang showed a video that was impressive. It will change how we react to the house… Huang said that the Nvidia system would work with the Smartthings Hub. The Shield will cost $199, however the details of the Spot, such as pricing, have yet to be decided. It’s available for pre-order online.

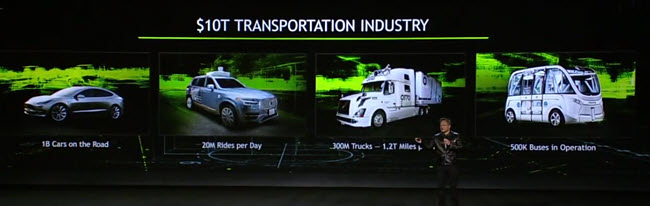

Transport is a Massive Opportunity

Transportation is a massive opportunity, globally there are a billion cars and 300 million trucks. ‘Ride-sharing’ shows the scale – there are 20 million uber and lyft trips per day. There are 500 thousand buses in the world. Transportation is essential to our lives, but there is a huge amount of waste in time and energy. Cars, really shouldn’t be driven by humans (or at least humans should be helped with driving) and there is enormous waste from accidents. Parked cars everywhere ruin the environment. Autonomous Vehicles would allow ‘the re-invention of society’, Huang believes. Your car can become your personal robot, Huang believes and Nvidia has been working on automotive applications for ten years, already.

Nvidia’s GPU-based deep learning has made autonomous vehicles possible. As he said before, driving is a difficult process. Deep learning is about perception and AI can mean good prediction of how situations will evolve and cars can learn how to drive. Add that power to HD maps in the cloud (which can work to the accuracy of the edge of the road – Man. Ed.). Mapping has to be continuous and that needs AI computing (and 5G networks according to Qualcomm). Huang then talked about the Xavier supercomputer for cars.

Nvidia Has a Reference Design for Vehicles

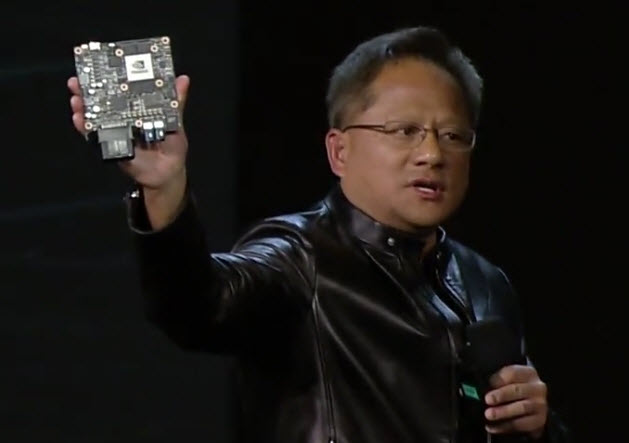

Nvidia has a new OS called ‘Driveworks’ and that can run on Nvidia’s new reference design for a vehicle computer which has a 512 core Volta GPU. There are 8 CPU cores ASIL D functional safety (ASIL is an automotive quality label – Man. Ed.). Although the system only uses 30W, it can perform 30 TOPS, an amazing level of performance, Huang said.

The Xavier Auto computer from Nvidia

The Xavier Auto computer from Nvidia

He then showed a video of the BB8 AI car. The car can determine its own ‘confidence level’ so that it can hand back to the driver if it feels that the situation needs a human and is out of its ‘comfort zone’. The car has a new capability – an AI copilot and has sensors all around. There are speakers and mics all around the car. AI could be running all the time. at times it could be driving you or it could be just looking out for you and warning of tricky situations.

Using gaze recognition and head tracking, the car knows where you are looking, so it knows if you may not be aware of something – e.g. a bike or motorbike in an adjacent lane and could draw the driver’s attention to it. Huang said that as well as these sensing technologies, AI can get to 95% accuracy in lip reading – better than humans can achieve.

Nvidia has a range of driver awareness tools so that the car knows if you are paying attention.

Nvidia has a range of driver awareness tools so that the car knows if you are paying attention.

Nvidia has developed an AI Car Platform. Time is critical in the car so the system must be very fast and natural language processing has to be both good and fast.

Mapworks is a mapping platform that interacts with different mapping companies in different countries. Baidu is doing the mapping in China, while TomTom is strong in Europe and Nvidia works with Zenrin in Japan. Huang said that mapping in Japan, in particular, is very complex – ‘here’ to integrate into map algorithms.

Nvidia Wins Bosch, Audi and ZF

Nvidia is working with ZF (said to be the fifth largest of automotive systems) to adopt the Nvidia computer – and will be the first to market this year with production. The top technology supplier im the automotive business, Bosch, will also adopt Nvidia Drive computer. Huang said that Bosch supplies every automotive company globally.

The momentum in the market for autonomous vehicles is accelerating rapidly. It had been huge undertaking to build automotive computers and it’s hard without an automotive company as a partner. Audi will be the first Nvidia partner to build autonomous cars in 2020. Scott Keogh. of Audi was then introduced and he said that the implications of the development of autonomous cars are massive. He also said that Audi would reach level 4 automation by 2020.

Booth Visit Shows HDR Monitors

Most of the Nvidia booth, in the North Hall along with other automotive players, was devoted to automotive, but in the back corner was a demonstration of a new monitor with 1,000 cd/m² of brightness and support for HDR (the monitor was in five different locations at CES, we heard, but we didn’t spot them!). We thought that the monitor, badged Nvidia, was using the high brightness LG Display IPS panel that we saw in its meeting room, but we later found that it uses an AUO 27″ UltraHD IPS panel. The monitor will be brought to market in Q2 by Asus and by Acer. Games will need to offer support for HDR although staff on the booth couldn’t explain what this support is, although it seemed to be HDR10. (I have previously heard that most games are developed with 10 bit or more grey scales, but are tone mapped for display, already, so I suspect that the support is really about having tested the HDR performance – Man. Ed.). The monitor has 384 lighting zones for local dimming and the HDR data is sent via DisplayPort.

As we understand it, the monitor used a new version of the G-Sync scaler, so needs a special chip to support HDR and can run at up to 144Hz. No pricing or other data was confirmed although Nvidia said that colour performance is ‘close to DCI P3’, using QDEF film. There are unconfirmed reports that the Asus monitor will cost $1,200, with the Acer at just below $1,000.

One of the first games to support will be Mass Effect Andromeda.The Asus monitor has the model reference PG27UQ and it is slated to arrive in the US in Q3, although Nividia was talking about Q2. The Acer is said to be the Acer Predator XB272-HDR.

Analyst Comment

The HDR monitor looked very good, although it may be a bit expensive, initially, for all but the most enthusiastic gamers. However, there’s no question that gamers seeing an SDR and HDR monitor together will understand why they want them. (BR)