The concept of better pixels means expanding the frame rates, color gamut, resolution and dynamic range of HD video to something “more.” But the human visual system is very complex and as these features are implemented, one must consider what this is doing to our perception of the images.

Sean McCarthy from Arris presented a very nice paper that summarized the complex interactions the human eye and brain have with electronic images. He noted that perception of motion is influenced by display size, viewing distance, frame rate, refresh rate, luminance, and the current adaptation state of the photoreceptors in the retina. Similarly, perceived hue is not uniquely defined by the spectral composition of the light coming from a display: It also depends on luminance, adaptation, and the composition of the scene. His paper explored the following:

- The interaction of field-of-view and frame rate on smooth high acuity motion tracking

- The interaction of luminance and screen size on flicker perception

- The interaction of luminance, perceived contrast, and color appearance

- The impact of speed of visual adaptation on scene changes, program changes, and commercials

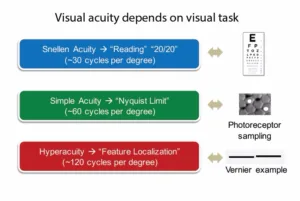

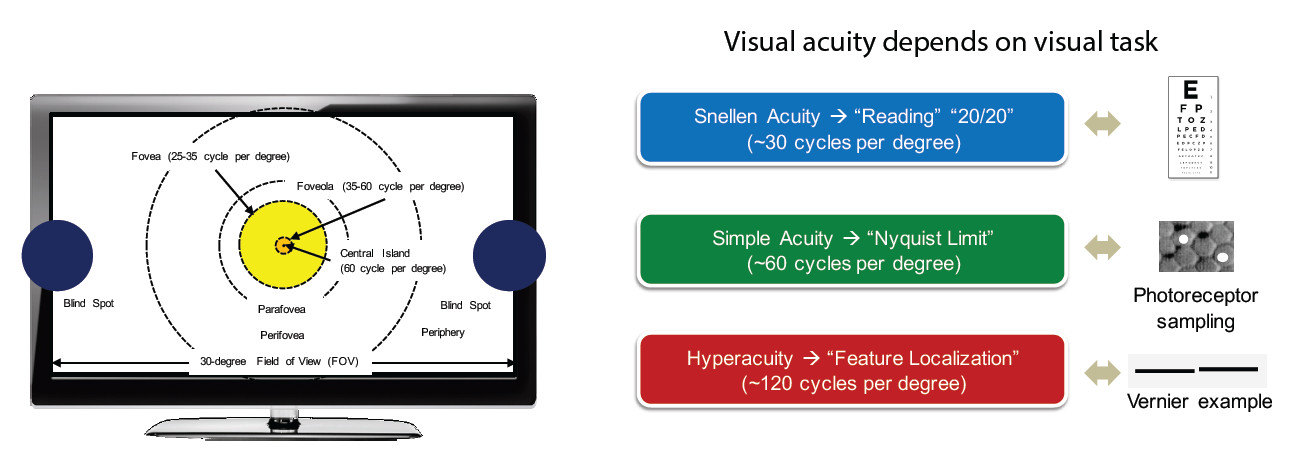

Based on the physical layout of the rods and cones in the eye, McCarthy described Snellen or 20/20 reading acuity as the ability to recognize symbols and their orientation with a human perception level of about 30 cycles (pixels) per visual degree. Simple acuity refers to the retina’s maximum Nyquist limit, which is about 60 cycles/degree. Hyperacuity is the ability to notice even finer details such as the misalignment of line segments (vernier acuity) beyond 60 cycles/degree.

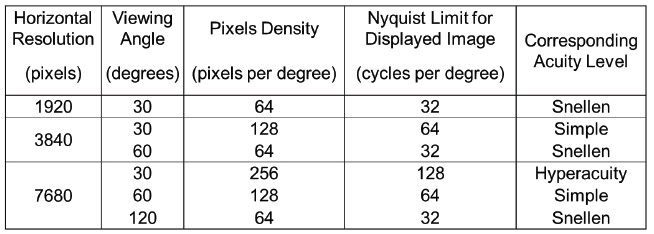

What we “see” from a display depends upon the native resolution of the display and how far away we are (which determines the viewing and cycles/degree). The table below shows various combinations of display resolution and viewing angle.

Besides acuity, humans “build” their understanding of the image with additional techniques. These include binocular depth cues, dynamic range, color and perceived motion vectors. Motion was the next topic that McCarthy discussed, but human motion capture is not the same as camera capture. Camera capture frames of images whereas the human visual system is a continuous stream of information processed by the brain.

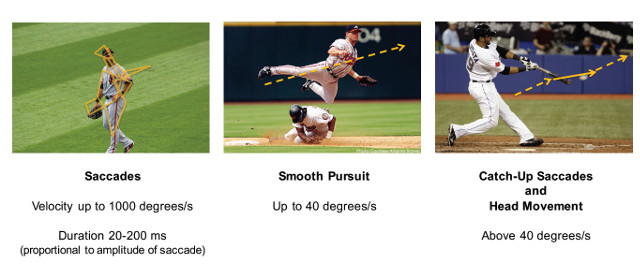

McCarthy described three type of eye and head motion. Saccades are involuntary eye scans over a subject focusing on areas of interest. The second is called smooth pursuit and is the ability to smoothly track an object. The third involves moving the head in combination with saccadic eye movement.

McCarthy noted that higher frame rates result in less blurring, but the speed of the object and the capture frame rate determine the amount of blurring. However, his point was that display FOV also impacts perception. For example, content with an object that moves at the limit of the eye’s smooth pursuit regime (about 40 degrees of visual field per second) on a display that subtends a 30 degree FOV would look different on a display that subtended 60 degrees because it takes twice as long to go from screen edge to screen edge when moving at a constant velocity. The 60-degree FOV display would result in reduced visual acuity of the moving object and more motion artifacts will be visible.

McCarthy then turned to flicker perception noting that it is related to the logarithm of the luminance. In other words, as the brightness of the display increases, so does our perception of flicker. But it is also dependent on the FOV environment. Flicker perception is lower in the center of the eye than at the periphery at all luminance levels. Strobing effects also increase with the FOV so 24 fps content might be OK on a 50” display, but looks flickery on a 100” display.

It is also well known that as the brightness of the display changes, our perception of color is different. Colors appear more colorful (vivid and intense) as overall luminance increases and conversely, more vivid and intense colors appear brighter than less intense (saturated) colors (the HK Effect). The Bezold-Brucke effect says that two colors with the same wavelength appear like different hues (a wavelength shift) if one is brighter than the other. The amount of shift depends on the color.

Finally, McCarthy discussed the impacts of light adaption, particularly with HDR content that has a wider range and may be mixed with commercials at differing light levels. He questioned what happens when you are dark adapted and get hit with a very bright commercial. Human visual adaptation is complex and includes changes in the pupil diameter; a progressive shift from rod-dominated to cone-dominated vision; changes in the gain of the photoreceptor response; and changes in the concentration of light-sensitive photopigments in the photoreceptors. Some of these responses are in turn related to the field of vision and the overall luminance levels. However, these adaption mechanisms happen on the order of less than a second to seconds.

Tim Borer from the BBC Research Group provided additional details on human vision in his paper. He said that human viewers can reliably detect changes in brightness of between 2% and 3% for mid and high luminance levels. This influences the shape of the Opto-Electronic Transfer Function (OETF) of the capture camera. Borer say that if the signal is proportional to the logarithm of the relative luminance and is quantized with enough bit depth, that changes in small luminance levels will not be noticeable (no banding or contours).

But for low light levels between 0.04 and 25 nits, a different vision regime comes into play. Here, the OETF signal transform should be proportional to the square root of the relative luminance in order to get a good match to human perception of the changes in luminance. But, Borer points out that this is just a best fit approximation as the perception factors are more complex and depend upon other factors like the size of screen, duration of the stimulus and frequency.

These two factors are what shape the OETF curve for SDR content. It is also what influences the development of the Hybrid Log Gamma (HLG) curve and other HDR curves like the PQ curve (ST 2084).

Clearly, vision science is a complex topic that becomes more relevant in the HDR, WCG and HFR era – but it also opens up new possibilities for creative uses.