Canatu is a company that we have been tracking for a long time, as it was one of the companies pursuing the replacement of ITO, in its case, with carbon nanobuds. The company has found that as well as display, where its flexibility and lack of haze or diffusion is a big advantage, although still to develop in terms of market size, it has been of great interest to the automotive market, which loves the flexibility. For example, it is working with a high end leather company that makes automotive interiors. In this application, the leather is often stretched and the Canatu sensors can cope with this. This allows auto makers to develop touch surfaces using traditional materials. It now has driver technology from Nissa and Cypress.

Separately, we noted that Continental was in the CES Innovation Awards area for the kind of textured touch surface that Canatu was showing.

Canatu can touch enable this kind of control cluster without mechanical switches. Image:Meko

Canatu can touch enable this kind of control cluster without mechanical switches. Image:Meko

Imagination Technologies moved out of the meeting room that it has used for several years and was in a suite in the Venetian and was highlighting how much it can do in TVs. The company has very good virtualisation technology, with applications running in isolated memory space which means that PINs and encryption keys can be kept private – a feature that is attractive to content owners. Apps have no access to the hypervisor. The company can support native UltraHD performance (XEP/XT), for example in the GUI but also has lower power cores that are optimised to use 2K resolution with scaling (XE/XM cores).

The latest cores were supplied for evaluation in September with RTL now.

Imagination also has a neural network processor design. The technology can be used as an accelerator and for object or individual identification as well as age detection and checking engagement or mood. As we have reported before, Imagination has some clever ‘region of interest’ technology which allows encoding of video giving priority for quality to areas of the image that are most important – e.g. the face in video conferencing. This technique can be exploited in TV scaling and the company has a range of other technologies for dynamic video enhancement and scaling. The Series2NX NNA core is now running in FPGAs.

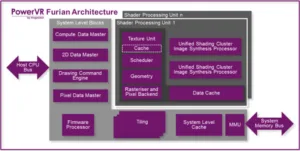

Imagination has always had a lot of skills in heavy duty graphics processing with low power usage, so the company sees real opportunities in VR and AR – last year the company showed us its technology for foveated rendering. The company has developed a new architecture, called PowerVR Furian, which can support UltraHD up to 120 fps as well as HDR. However, the power is generated without huge power budgets.

The PowerVR Furian architecture is optimised for high performance in VR

As well as its GPU and video processing, Imagination has been developing its wireless technology and has been developing its Ensigma radio designs which are extending to demodulators for TV sets. The company is looking to develop its business in the TV space, with the backing of its private equity owners. Watch out, also for announcements of a new GPU targeting automotive applications.

The company has usually managed to have at least one major customer in the TV space for its architecture at any one time, but is looking to see its cores more widely used in that application.

Intel had its usual big booth and was focusing very strongly on VR and 5G. It was showing 4K video streaming and VR over 5G. There was a keynote talk by Brian Krzanich that again highlighted the use of Intel’s RealSense technology and the Intel Movidius chip in gesture-based music with players using virtual instruments in a band known cheesily as Algorithm and Blues. (speaking as a musician, the demo was enough to encourage ‘old school’ instruments! – Man. Ed.) He started by dealing with the recent security bugs that had been found, Specter and Meltdown.

Krzanich said that the big resource of the future is not material, its data. Chips are now 50 years old and now we are at an inflection point driven by huge amounts of data. Cars, aircraft and factories will produced data in terrabytes and petabytes per day. AI, Autonomous Vehicles, Smart Cities and many other topics at CES are driven by this data. Krzanich showed how Intel is working on ‘volumetric video’ which uses multi-camera capture to create models of scenes that are being filmed. It uses Intel’s Truview technology. The cameras are used to create billions of voxels allowing the viewer to truly enter the scene. Data is captured at 3 Terrabytes per minute! The technology can make a ‘viewer’ feel as though they are actually at the event. The technology will be used at the Winter Olympics in a few weeks.

https://www.intel.com/content/www/us/en/events/ces/keynote.html 43:26

Intopix is a company that we are used to seeing with its Codec technology at IBC, ISE and Infocomm, but not at CES. The company was at CES because as well as compressing video (the technology is in some new JPEG standards), it can be used for sensor data as well as the video coming from the cameras in autonomous vehicles, hence the presence at CES. 8K video is also a challenge and the company sees opportunities in the development of 8K video. The company told is that its technology is used by Crestron.

We stopped by Sigma Designs, but there were no TV products on show. The company is currently being acquired by Silicon Labs which is interested in the wireless and Z-Wave business, but is not interested in TV. Staff told us that the deal is ‘contingent on the sale or closure of the TV business’, so the TV business did not attend CES. The deal has a condition that if the whole company deal doesn’t complete for specified reasons, Silicon Labs will buy the Z-Wave business.

Tobii was showing the increased support for its gaze recognition technology at CES Unveiled and also at the main show. The company was showing how the technology is being used in gaming. However, we discussed with the firm the use of gaze to enable foveated rendering to optimise the generation of 3D images for VR. We heard that five major OEMs and ten others will introduce products featuring the technology over the next year or so. That’s impressive! Adoption of gaze in PCs has been slow because of the cost – the chicken and egg issue that has held back gaze for some time.