Douglas Lanman is from Facebook reality Labs/Oculus and he was at the event to talk about AR and VR under the title “Reactive Displays”. He quoted Gabriel Lippmann, who was a photographic pioneer, who set the target of making a “window on the world” that is like a real window and as good. That hasn’t been achieved yet.

Douglas Lanman is from Facebook reality Labs/Oculus and he was at the event to talk about AR and VR under the title “Reactive Displays”. He quoted Gabriel Lippmann, who was a photographic pioneer, who set the target of making a “window on the world” that is like a real window and as good. That hasn’t been achieved yet.

3D is missing from the solution at the moment and there have been many different attempts. Lanman was involved in the Tensor display that used multiple LCDs and used computational imaging.(and was mentioned in an SID keynote back in 2012 – Display Monitor Vol 19 #24)

Lanman is now a research scientist at Oculus and although his first reaction to the idea of VR was “that’s cheating” . Lanman saw a demo of VR and moved to Facebook to further develop the idea.

One option is to turn living rooms into CAVEs – it might be the home theatre of the future, but it won’t be for everyone. He believes that “Lippmann’s window” is the smartphone today, rather than photographic prints and personal mobile displays are the future. It has to be wearable displays, he believes.

Although Oculus was working on resolution, HDR etc, but these developments were ‘straight lines’. A problem is that no 3D TV supports motion parallax properly and that breaks the realism.

Something needs to be fixed and resolution needs to be good in the near field as well as the far field and the attempt to do this has taken a lot of engineers, so far. Lanman explained the vergence/accomodation problem – they are linked in lock step in the human visual system (HVS). However, it doesn’t really work properly in VR because of the flat display problem.

Blur is a subtle cue to vergence but VR is always around 2m away and in focus. Lanman explained that presbyopia (the problem with near vision in older people such as our editor) is good for VR! (We’ll all have implants eventually to solve the problems, Lanman believes).

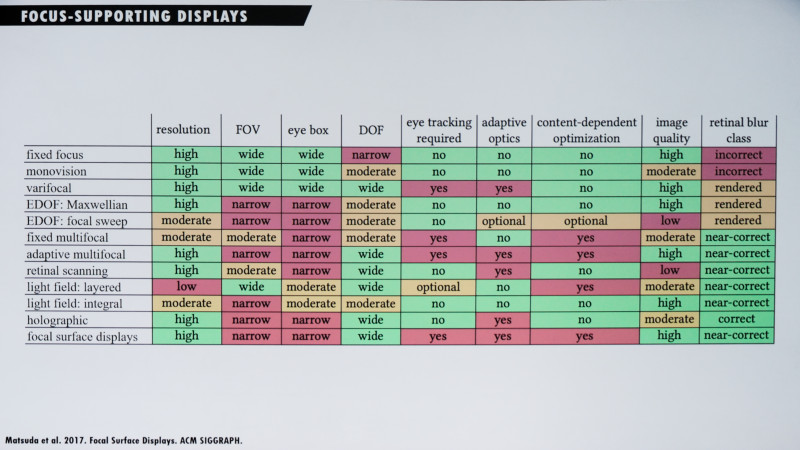

There have been many attempts to develop focus-supporting displays but he said that “light fields are now not looking good as a solution” and “holography is always 10 years away”.

There are a lot of different approaches to solving the accomodation/vergence conflict issue. Image:Meko

There are a lot of different approaches to solving the accomodation/vergence conflict issue. Image:Meko

One way is to keep objects out of arm’s reach. Varifocal displays are also a possibility along with adaptive optics and eye tracking. Varifocal displays have been developed in labs, but solutions can be complex so to investigate the technology, Oculus made a series of prototypes.

The first prototype tried to answer the relatively simple question, can you make it? The solution was big and noisy, but it worked. One year later the group added eye tracking and Lanman believes it was the first group in the world to do that in a headset, and that was two years ago. Solving focus matters in VR, he said, it will eventually be a core problem, but the solution is not mechanical, he thinks as it was very noisy and the second prototype was very over-engineered. Oculus’s mechanical engineers came in to make a prototype which was dramatically bettter, it was very quiet and almost vibration free.

The Oculus Prototype 2 – Image:Meko

The Oculus Prototype 2 – Image:Meko

His group at that point was working on many different concepts. For example, you can work on a kind of ‘half dome ‘ if you want more field of view and Oculus can double the FoV and did so in its ‘Half Dome’ prototype, Reading in virtual reality is a big challenge.

Oculus made this Half Dome VR headset. Image:Meko

Oculus made this Half Dome VR headset. Image:Meko

Do we really need eye tracking, he asked? Can it be avoided, as it is better to attack just one problem, Lanman said. One idea is to develop multifocal displays using stacked sequential displays. You need multi planes for different dioptres of vision correctly and there will always be distances between layers. Adaptive multifocal technology is one solution, but potentially a complex one.

Oculus built an “amazing tester” with eye tracking and accommodation adjustment and there are six displays with varifocal performance and you can measure the user’s prescription and the system can run at 30 – 60 fps. You have to eye-track to get the best out of the system.

Lenman said that his group are ‘stubborn researchers’ – check out the paper “Content-adaptive focus configuration for near-eye multi-focal displays” Wu et al 2016.

The group developed a focal surface display, he said, based on the question “why not just vary the focus according to the depth map of the image”. The group used a spatial light modulator (SLM) which he said should be used with coherent light.

The company has built what Lanman described as a digital complex lens, using a Jasper display – at the moment, he said, the SLM is reflective.

Eye tracking helps with differential focus. Image:Meko

Eye tracking helps with differential focus. Image:Meko

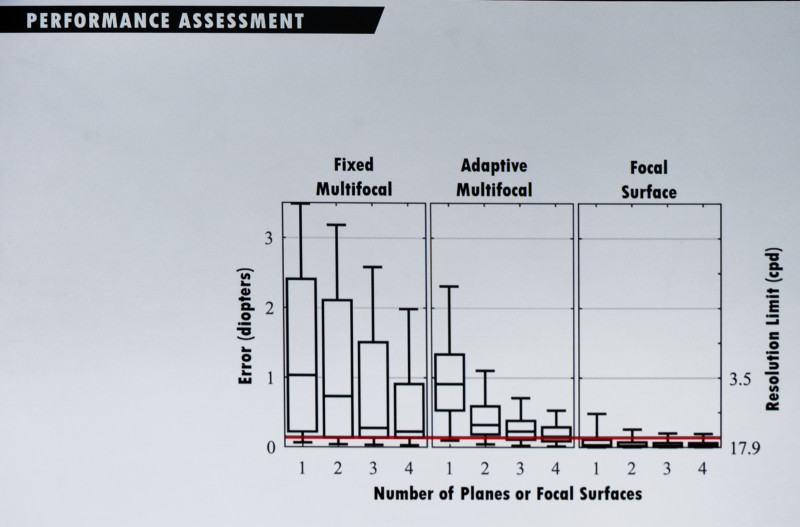

Can you get away with one focal surface, Lanman’s group asked? The team used AI to check a data table of scenes. The use of a local surface might get rid of the need for eye tracking, but not yet – the team found that you really need two surfaces and that means you must have tracking at the moment.

Oculus test results show that just two planes gets most of the benefit. Image:Meko

Oculus test results show that just two planes gets most of the benefit. Image:Meko

Mobile theatrical quality wearable displays will be a huge challenge, he believes. The advantage of VR is that you can build a display for a specific eye – not even a pair, they can vary for the left and right, if you want to.

Lanman finished by saying that his group is “creating a canvas for infinite stories”.

Analyst Comment

Our notes said that this was a very good keynote. Although we would have liked a slightly slower explanation of the technology issues, the keynote looked at developments rather than just bringing us up to date with the status quo. Matt also did a round up of other work in this area at SID (NEDs with Eye Focus Cues at Display Week – Roundup) (BR)