Bill Mandel of the Samsung Research Center in Irvine, California helped deliver a presentation co-authored with Yeong Taeg Kim that provided more insight into the HDR10+ standard.

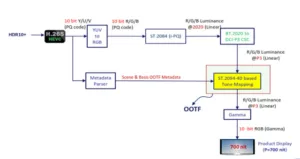

Samsung’s dynamic metadata approach has been standardized as ST-2094-40, but HDR10+ is the practical implementation of the standard and is not as full featured. In essence, HDR10+ uses a series of Bezier basis functions to adjust the tone mapping curve on a frame-by-frame basis. The algorithm has a linear portion where it follows the PQ curve exactly and then tone maps from that point to the peak luminance capabilities of that TV or display. The data required for this is small and can easily fit within the SEI part of the HEVC encode or InfoFrame component of the HDMI stream.

The key benefit of HDR10+ or any dynamic metadata approach is to better preserve the HDR “look” on displays that have lesser performance than the mastering display. There will be a lot of HDR TVs in the market that have 300-500 cd/m² of light output, but will be receiving content mastered on 1000 cd/m² or higher luminance mastering monitors.

“HDR10+ is designed to use the metadata to more accurately tone map the content for the TV while preserving hues, flesh tones and highlight details,”

said Mandel. The format is also backwards compatible with HDR10 so both static and dynamic metadata is included in the content.

Mandel then provided some examples of issue with image quality when only static metadata is used such as the use of MaxCLL data to scale the tone curve. If just one pixel has a very bright luminance value, this can skew the tone mapping for the whole piece of content. Plus, without scene-by-scene metadata, the TV has to decide how to tone map the content. Sometimes this can result in preservation of the darker and mid tones with saturation of the highlights or just the opposite.

Functionally, 2094-40 specifies the use of a histogram of the image luminance values within a frame using percentiles. A normalized Bezier Curve is a key function of the Basis OOTF, which is a linear combination of Bernstein Basis functions. This approach is simple and is tolerant of spurious bright pixels. Passing data such as the knee point and coefficients, a tone mapping curve can be easily computed and applied to the frame.

Most importantly, the tone mapping does not have to be a simple roll-off from the knee to the peak luminance point. Complex shapes are possible to help preserve skin tone and/or highlight details.

Mandel then explained some of the HDR10+ workflow for the generation of the metadata. It starts with the high luminance master with the first step being the generation of a histogram of the luminance levels on a frame by frame basis. Samsung algorithms then generate the parameters for metadata for a new target display, for example at 300 cd/m². A colorist can then review and adjust as needed to finalize the metadata. The scene is then played back on the target display to validate the desired tone mapping. HDR10+ and HDR10 metadata is generated at the same time.

At the TV, the HEVC signal is decoded and the metadata parsed into static and dynamic components. Color conversion and volume remapping happens before the dynamic metadata is applied for tone mapping. Finally, these values are mapped to the native gamma of the display panel.

Mandel concluded by noting that Samsung is engaging throughout the full content acquisition, production, distribution, and consumption ecosystem.