goHDR is a UK-based company that was at NAB 2015 to describe and show its HDR encoding solution. The company demonstrated real time capture, encode/decode and display of HDR content on an HDR display.

goHDR is developing an approach that it will propose to the Cost Action Group (IC 105), a European union of companies looking at the HDR problems, along with the MPEG group (proposal to be submitted this July).

The problem it is trying to solve is HDR capture at the camera. For cameras with more than about 12-13 f-stops, you need 16-bit capture to show the full range of gray scale in the image. Management of such large RAW files may be OK in a post production process, but the data rates are just too high for a conventional video processing path – and for live HDR.

One approach companies can use is to apply tone mapping at the camera. Tone mapping at this point is designed to shrink the 16-bit data into an 8-bit or 10-bit format by applying a gamma curve that better matches human visual perception. A number of companies have proposed solutions here, including Dolby’s whose PQ curve has been adopted by SMPTE. This is a display-referred encoding scheme that provides a 10-bit image if the peak luminance of the intended display is 1000 nits and a 12-bit image is the intended display is 10,000 nits. This means that that the capture devices must have display device in mind when they acquire the data.

The problem with this approach, notes CEO Alan Chalmers, is that this “bakes in” the tone mapping at the beginning of the process complicating or frustrating manipulation later in the chain, should that be necessary. Chalmers thinks a scene-referred approach is better that is not tied to a specific end display device.

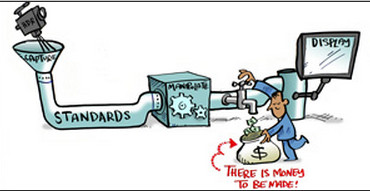

It is better, argues Chalmers, to deliver the full range of bit depth to later stage processing. To do this in an efficient manner requires compression at the camera. goHDR’s approach allows this with compression ratios that can vary from 150:1 to 500:1. It is a dual stream approach that separately compresses the chroma and luminance channels. These channels can be compressed at different ratios and the two merged into a single transport stream. This approach is also backwards compatible with existing displays and delivery systems.

“One of the big advantages of this approach is that we can use off-the shelf 8- or 10-bit encodes for the luma and chroma channels”, says Chalmers. “By delivering the full range of dynamic range we create a scene referred processing chain that does a better job of preserving the creator’s intent”.

Chalmers says that additional metadata is also needed that allows more flexibility in HDR processing. As an example, he suggested coverage of a golf tournament where the golf ball disappears against a blue sky. If this is encoded using his scheme, the broadcaster can change the exposure of the scene to show the ball – something that may not be possible if the capture camera over exposed the shot or tone mapping was applied at the camera.

In the Futures Park at NAB 2015, goHDR was showing live capture with a Canon HDR camera that allowed bright highlights or dark details to be extracted in real time and shown on an HDR display. (CC)

Analyst Comment

Notwithstanding the potential improvement over a single-stream approach, critics were quick to say that production and transmission infrastructures are not equipped to handle the parallel content streams needed with a two-stream approach – and would likely be unwilling to commit the capex needed for it. Indeed, enhancement-stream codecs, such as MPEG-SVC, have historically succumbed to the reality that higher capacity (and increasing horsepower to handle more complex compression) always seem to trump any perceived advantages. (agc)