Craig Peterson is CEO of VEFXi which is working in VR and we have reported several times on the company this year, since MWC.

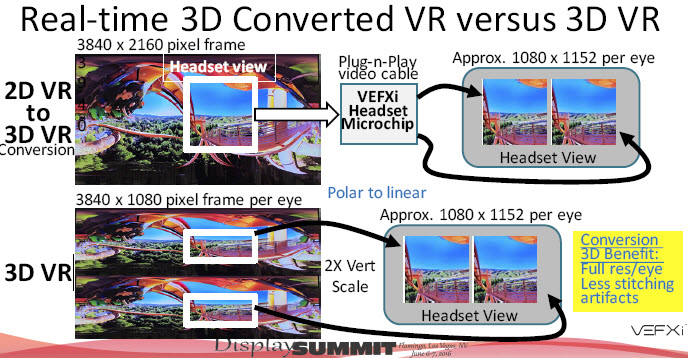

He started by saying that there are two kinds of VR. Animated is often 3D, but real world VR is mostly 2D. 3D is normally half vertical resolution, and 3D VR filming is harder than 3D. Real time 3D capture takes a lot of computing, so nobody is really doing it yet.

You can see a 96º field of view in the Oculus Rift. The total format for 360º is 3840 x 2160 – that’s why the displays are 1080 x 1152 per eye. You can record at half resolution or create 3D from full resolution 2D (which is what VEFXi does).

There are challenges in 3D VR filming. For example, a problem for camera stitching is that stitching on one eye is at the sweet spot of the other eye. The other way is to reconfigure cameras, but that is challenging.

Another problem, Peterson said, is that there art no stitching algorithms with depth – all the current ones are designed to work with focus on infinity and that causes problems when you move your point of view. Also you have to deal with left/right camera differences. It’s hard also to make cameras work as though you are looking straight ahead when you are looking at part of the image that is off axis. 3D conversion from 2D content can sort that out, providing it’s good enough.

Peterson is not sure if headsets will be used in the long term in high volume, but 360º images will be (e.g. by sweeping with a smartphone for example). There will be new devices next year to view this kind of 360º content.

Hand crafted 3D can look great, but costs up to $30 K per min, according to Peterson. Vefxi was doing 3D conversion, but found it was hard to get good quality with ‘pop’. So it started again with its technology. The new solution has better depth analysis and can generate more extreme 3D with a low number of artefacts. For live gaming you need to do the processing in <4ms, he said, and that is a challenge.

So the company developed a new chip with a dataflow-type architecture – and with quality comparable to hand coded 3D. From a hardware point of view it looks to the system like an LCD video display cable.

The company worked on developing a ‘deep thinking’ architecture based on neural nets. That really wasn’t stable enough, the 3D could be trained, but different images did different things. So, again, the company changed to an ‘application-specific neural net’ concept.

The firm also needed a good display with high resolution, but these have actually started to arrive. New lenses for these displays are just arriving, so there should be a boost in the market for VR in the next year.

What if you could watch 2D images in 3D – could it be a kind of A/R? Yes, Peterson said, if you use touchless haptics to guide the user.

Peterson then went through applications for 3D including arcade games and advertising. At the Samsung developers’ conference, the company talked about VR ‘holodecks’, which indicates a trend. Peterson also showed 3D autostereo systems using LEDs.

Analyst Comment

The company had a demonstration of automatic 3D creation from 2D content, by inserting it’s chip between the system and the display and I have to admit that I was impressed by the quality. (BR)