A special symposium on Virtual Reality (VR) was held to open the 2015 SMPTE fall conference here in Hollywood, California. There were a number of good papers, a nice panel and some demos that shows the level of activity and interest in VR. However, despite all the interest in the topic, there are no SMPTE working groups working on standards – even though the need exists.

Where are standards needed? The format of the delivered pixels is one area and how signals will be encoded and distributed is another, noted Lucas Wilson of Assimilate. He has done almost 20 VR shoots and has learned a lot about what works, what doesn’t and where the holes are. For example, he said that there are a number of announced VR cameras out there, but few actual high quality rigs. Jaunt has the Jaunt one, NextVR uses a 3D rig based on RED cameras and there are a host of GoPro rigs, but these are lacking for higher quality applications. Nevertheless, one of the suppliers of these platforms form mounting GoPros – 360 Heros – says they have sold 10,000 of these platforms to date, so there are a lot of people experimenting with VR capture – at least at this level.

Another key take away – VR is a mixture of the passive TV and film production techniques and tools and the active game engines tools and techniques. That makes creating VR content really hard as the tools that blend these separate elements don’t really exist. The development of tools has to advance to improve efficiency and costs – and standards may help here as well.

The VR workflow is not that different from the traditional one with the insertion of the stitching steps, noted Wilson. But this is perhaps the biggest problem, according to visual effects specialist, Vicki Lau. And movement across cameras can be even more difficult – especially in 3D. It also appears that most lighting and camera effects don’t work well in VR. For example, a flare from a lens can look very odd when you move your head, as do lighting effects. It may sometimes be best to replace these natural elements with CG elements that can better imitate a more natural experience.

A speaker from GoPro described their new Odyssey 360-degree capture camera rig developed with Google for VR capture. Slapping a bunch of GoPros together with rubber band and gaffer tape is easy, but to shrink to a tight package that controls thermal variations and has pixel level synchronization is not so easy. A 4K image is barely enough pixels so the Odyssey creates an 8K 3D image (32 mpixels) in an over/under format. But for long term needs, 64 or 128 mpixel images will be needed to truly suspend belief and give a real feel of presence. Naturally, this puts a big strain on any delivery pipeline, let alone the processing pipeline.

Jan Nordmann from Fraunhofer USA highlighted the need to include immersive audio in the experience. They were demonstrating the new MPEG-H standard that allows the delivery of object or channel based audio to mobile users. In a VR environment, most will use headphones and here the requirement is to deliver binaural rendering that can place a sound at any point around the user and tie the rendering to the head tracking. That means that some sounds react as you move your head (a voice continues to come from a particular direction, for example) and others do not (background music or crowd noise)

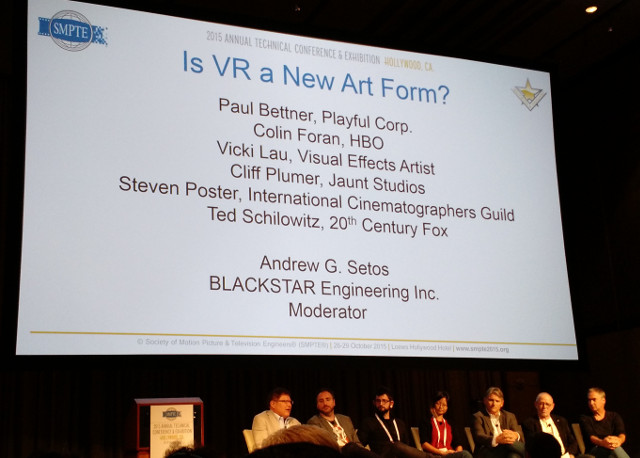

In a lively panel discussion, the group was asked, “Is VR a new Art Form?” The answers were yes and no depending upon who responded. Those who say it is point to the need to create new story-telling techniques as most of the basic film making tools and techniques do not apply in VR. Others noted that spherical content and immersive entertainment has existed for years – mostly at theme parks or planetariums, so there is precedent for creating this type of content.

Ted Schilowitz from 20th Century Fox described how they are working on a VR experience on the movie The Martian, to be released in early 2016. He noted that the time people engage in VR experiences is short now, but this will likely expand in the future. They are planning a 20-30 minute basic VR experience, but this could last for 2 hours if you explore all the branches, he said. (Note that work in Germany, we reported, is showing real human factors problems with extended use of VR [3IT5 AR Needs Touch and Short Sessions.] Man. Ed.)

In the companion demo room, Fox showed the first VR short they made from the movie The Wild on a Gear VR headset. Most liked the experience, but the very mediocre image quality was quite apparent as was the lack of good spatial audio cues. One good thing I noticed was that the characters appeared life-sized in the 3D video so I felt like I was sitting on a rock next to the characters.

Schilowitz made an interesting comment about this clip noting that many people have told him that they keep thinking about the VR experience for days afterwards and that the memory of it imprints differently in the brain. I will have to see if that is true with me as well.

All agreed that the tools and hardware are getting better very quickly, but it is the creative talents that need to drive development, not technology.

The panel talked a lot about the entertainment and gaming value of VR, but also said that its use in journalism and tourism could be big commercial drivers – as well as pornography.

Steve Poster, ASC worried about the sociological implications of immersing in a VR environment for hours at a time. “Should we be doing brain scans of people experiencing VR to see what is being activated,” he asked.

VR also presents a different set of priorities in production and display compared to traditional methods. For example, small quantization errors that lead to banding or changes in gamma curve of the left and right eye images are more visible in VR than on direct view display. On the other hand, variations in color temperature and brightness, even from eye to eye, seem to be less sensitive in the VR environment.

But we have a long way to get to compelling VR experiences said Layla Mah, lead architect for VR and advanced rendering at AMD in her keynote address at the event. Most importantly is the compute power needed – a petaflop, or a quadrillion floating point operations per second. We are at teraflops today. And, HMDs have to evolve to “untethered devices with a form factor somewhere between a contact lens and a pair of regular glasses.” But, we probably need to move faster than Moore’s law to get to this level in a reasonable time frame.

Analyst Comment

VR is clearly a very hot topic in Hollywood and around the globe with lots of money pouring into products, tools and content. But it is very early still and the narrative techniques, hardware, software, business models and more are all experiencing rapid change and experimentation. In many ways it feels like the rush to 3D a few years back, so we have to wonder if headsets with insufficient image and audio quality will come to market with immature content that will create a thud of a debut with consumers. 3D receded for these reasons and the need to wear glasses. VR requires a full HMD so will things be different his time once you get past that first wow experience? I really don’t know. (CC)