Camera/Camcorder – 4K resolution or more, wide color gamut (WCG) and high frame rates (HFR) are only some of the issues for a camera to acquire the next generation of images – high dynamic range (HDR) is needed as well.

This is a pressing topic in the SMPTE community, as evidenced by Chris Chinnock’s recent six part article for Display Central on HDR after the SMPTE conference October 20 – 23 in Hollywood. Besides his HDR articles, he also wrote four articles on WCG and three articles on HFR, plus several other articles for a total of 19 on the SMPTE Conference.

In the first of these articles, he said, “Nevertheless, a panel of colorists, DPs and special effects experts all seemed to agree that if you are going to create HDR content, it is best to capture in HDR rather than try to create HDR content in a post-production process. For example, once a bright area is clipped in capture, there is not much that can be done to restore the details in that bright area in post. The same goes for dark details”.

This would seem to go almost without saying, but how do you capture high dynamic range content without clipping the highlights or excessive noise in the low lights?

In the Dolby Vision system, the lumen range is from about 0.01-0.02 nits to 2000 nits, a total of about 17 – 18 stops of dynamic range in camera parlance. Conventional high-end digital cinema cameras have 11 – 14 stops of dynamic range. For example, the specifications for the ARRI Alexa 65 camera, perhaps the highest end camera available for cinema acquisition today, says it has “>14 stops” of dynamic range. How do you acquire these images without either saturating the bright scenes or having excessive noise (or no image at all) in the dark scenes? The ARRI Alexa 65 leaves a gap of about 3 – 4 stops (8x – 16x in light levels) between what it can acquire and Dolby HDR targets.

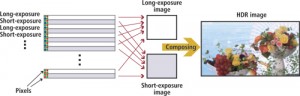

One approach to this is to acquire each image twice – once under exposed and once over exposed. Throw away the data in bright regions from the over exposed frame and you have the low light detail with low noise. Throw away the data in the under exposed image to get the high light data without crushing the whites.

This approach works but it has two problems. First, it involves acquiring the image twice and requires at least double the frame rate to implement. The Alexa 65 is a good camera, but it won’t do the 48Hz acquisition required by this approach for cinema, let alone the 120Hz acquisition that would be required for TV. The second problem is similar – you acquire two different frames. If there is motion in the image, the two images won’t be of the same scene. Motion corrected digital image processing will mostly fix this, but that’s one more step along the way to HDR content.

Current photography, the Internet and your computer monitor can’t accurately capture the difference Dolby Vision makes. But these two images, with the Dolby Vision versions on the right, provide a taste of the difference between a display using Dolby Vision and standard HD video. (Photo Credit: Dolby)

Manufacturers of CMOS camera sensors, such as AWAIBA, are working on this issue, developing sensors with higher dynamic range. So far, these sensors have been targeted more at security, medical and scientific applications than entertainment, but no doubt there will be HDR CMOS sensors specifically for TV, cinema and the consumer. As Chinnock pointed out, CMOS sensors have a 2x advantage in speed and a 2.5x advantage in dynamic range over CCD sensors, so CMOS seems to be the logical choice for high-end entertainment applications.

Image (left) produced by an AWAIBA CMOS sensor. The high dynamic range scene is without saturation and artefacts and was acquired by one of the company’s CMOS image sensors. The image shows a human eye under inspection with a slit lamp. (Photo Credit: AWAIBA)

Image (left) produced by an AWAIBA CMOS sensor. The high dynamic range scene is without saturation and artefacts and was acquired by one of the company’s CMOS image sensors. The image shows a human eye under inspection with a slit lamp. (Photo Credit: AWAIBA)

Manufacturers of CMOS camera sensors, such as AWAIBA, are working on this issue, developing sensors with higher dynamic range. So far, these sensors have been targeted more at security, medical and scientific applications than entertainment, but no doubt there will be HDR CMOS sensors specifically for TV, cinema and the consumer. As Chinnock pointed out, CMOS sensors have a 2x advantage in speed and a 2.5x advantage in dynamic range over CCD sensors, so CMOS seems to be the logical choice for high-end entertainment applications.

Some CMOS sensor designers use two different classes of pixels – one designed for high lights and the other for low lights. This can be done by having different integration times for the two types of pixels. This would largely solve the motion artifact issue, but would require 2x the pixel count. Toshiba implements this by having different integration times for alternate rows, for example. Fairchild Imaging has a similar scheme, only using alternate columns rather than alternate rows.

(Right) Toshiba uses a single frame method in which different exposure times are applied to different lines in a frame. (Photo Credit: Vision System Design)

(Right) Toshiba uses a single frame method in which different exposure times are applied to different lines in a frame. (Photo Credit: Vision System Design)

CMOSIS has taken a different approach and developed new CMOS image sensor pixels that allow read-out of a photodiode with a wide dynamic range, which maintains a linear response to light. After exposure, the photodiode is read-out via two transfer gates to two sense nodes. Two signals are then read from each pixel. The first signal only reads charge transferred to the first sense node, with maximal gain. This sample is used for small charge packets and is read with low read noise. The second sample reads the total charge transferred to both sense nodes, with a lower gain. Pixels with a read noise of 3.3 electrons and a full well charge of 100,000 electrons have been demonstrated, resulting in a linear dynamic range of 90 dB, which corresponds to about 19 camera stops. This is more than enough for HDR video content.

Andrew Wilson has written an article for Vision Systems Design that goes into some of the details of the current and next-generation HDR CMOS sensors. This is a good next-step for anyone interested in understanding HDR cameras. – Matthew Brennesholtz