It’s a while since we met with Synaptics and as a result we had a lot to catch up on. Historically, the company was known for its touch technology which is widely used in touch pads on notebook systems. About two years ago (June 2014), the firm bought the display driver business from Renesas and has been working on integrating its touch and display circuitry.

Synaptics is developing force sensing touchThe first demonstration that we looked at was a force demonstration that uses a separate sensor to measure the deflection of a panel. It uses a capacitive (but not procap) sensor technology that is also controlled by the single Synaptics chips which provides a single data stream with X, Y and Z data.

Synaptics is developing force sensing touchThe first demonstration that we looked at was a force demonstration that uses a separate sensor to measure the deflection of a panel. It uses a capacitive (but not procap) sensor technology that is also controlled by the single Synaptics chips which provides a single data stream with X, Y and Z data.

Next we looked at a Xiaomi phone that uses touch gestures that can be made on the sides of the display. Synaptics said that it already has to track touch on the edges of displays to ensure that the touch on the edge is rejected. However, by optimising the sensitivity, gestures along the edge of the phone can be used for input. (This reminded me of the use of edge touch shown by Sharp at SID last year and suggested for automotive applications – Man. Ed.)

Pens and stylii are becoming more important in the market and we looked at the development of pen input. The firm can detect passive styluses down to 1.5mm but is also developing a new stylus/pen that is “active” or “hybrid passive”. The pen does not have any real power source, but “does have electronics”. The pen can, effectively, report a number of “states”. At MWC, these states were being used to communicate pen colours, which are set by a switch on the pen.

Pens and stylii are becoming more important in the market and we looked at the development of pen input. The firm can detect passive styluses down to 1.5mm but is also developing a new stylus/pen that is “active” or “hybrid passive”. The pen does not have any real power source, but “does have electronics”. The pen can, effectively, report a number of “states”. At MWC, these states were being used to communicate pen colours, which are set by a switch on the pen.

There is a lot of work going on in the background, we heard, to progress the Universal Stylus Initiative, which is developing a standardised API to allow styluses to be used across a range of compatible hardware platforms (in the same way that USB works the same over multiple platforms).

Next, we had a quick look at the way that Synaptics supports swiping on the keyboard of the Blackberry Priv. This effectively turns the keyboard into an additional surface for simple touch gesture input. For example, swiping from left to right for ‘next page’.

The company has also had some success with wearables, especially smartwatches and has got design wins in the Fossil Q and the very expensive Tag Heuer device. It has also got a design win in the Google Pixel C, a convertible system that uses USB Type-C and runs Android on a 10.2″ 2560 x 1800 display. It uses the Synaptics S3370 chip which integrates the touch controller into the TCon. Synaptics told us it is trying to “make tablets more like smartphones” in terms of the architecture.

Turning to dedicated display drivers (DDICs), the push has been to reduce power consumption and the company was highlighting its ClearView technology which makes for smoother gradients in colours (unfortunately, the image we took doesn’t show this as clearly as it was ‘in real life’). New software technology called “Image Studio” has been developed to allow much quicker and easier display calibration. For example, a company may want to get a particular ‘look’ for its brand or product or to help to match performance where different displays are being sourced for a single device. Staff told us that the software can quickly get customers ‘90% of the way’ using field engineers.

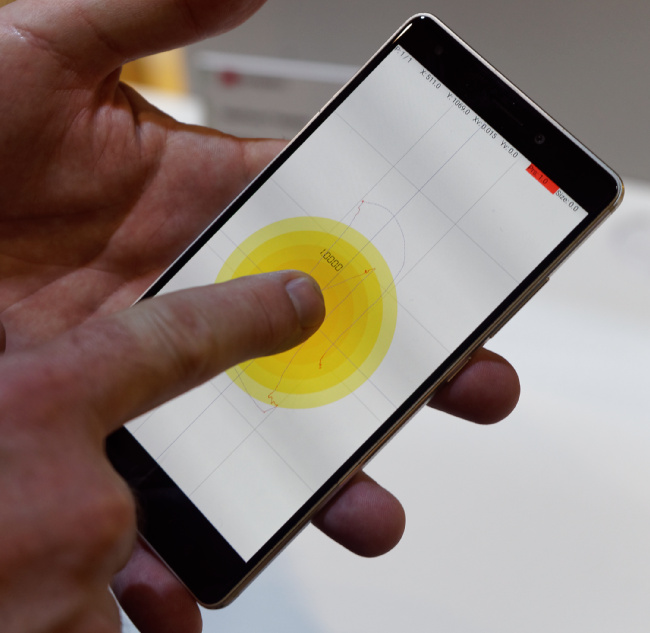

Synaptics has developed force-sensitive sensing using in-cell touch technology. The company told us that it started by looking at the mechanical deflection as a way to detect force, but needed to allow for the change in cell gap in the LCD. Once the firm started to measure that gap, it realised that it could track the force from that data alone. 256 levels of force can be sensed and force up to five newtons. It is working with LG, Google, Huawei and ZTE.

A different development is work between the company and Leia3D (www.leia3d.com) which produces 3D displays. Synaptics is working with Leia to allow the use of hover gestures to manipulate the 3D images. The technology was being shown on a 5″ 2760 x 1440 display where each of the 3D views (there are 64) is just one pixel in an 8 x 8 array. That means that the 3D resolution is much lower than the display, which works by controlling the backlight light direction, and a standard LCD cell. The AS3D effect was quite good, but not quite completely seamless between the different views.

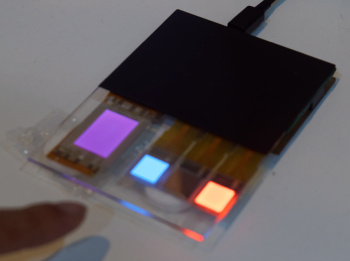

A demonstration was being made of passive area OLEDs being used as illuminated switches and Synaptics has combined the control of the OLED with touch detection. (pictured)

A demonstration was being made of passive area OLEDs being used as illuminated switches and Synaptics has combined the control of the OLED with touch detection. (pictured)

The firm has been working in automotive applications and has even developed touch for a variety of surfaces including the steering wheel. An SDK for apps has been developed that can use force input. For example, in this kind of application, the use of dual finger pushing can be used to zoom out. Alternatively, touch can be used to select a function while pressure is used to activate the selected option.

Synaptics has developed a high resolution display (QHD) with a touch system that can be used by hobbyists and developers that are interested in developing more specialist devices and the company will develop interfaces for the Raspberry Pi and Arduino. It believes that making the kit available will allow some interesting new applications of high res displays.

Finally, we looked at the fingerprint recognition that is under development. There is a strong move away from ‘swipe’ actions to ‘area sensors’ that detect the fingerprint without movement. The company has developed a sensor that can be operated under as much as 350μ of glass. This may eliminate the need for visible detectors on the front of the phone.

The company also announced a 3.5mm fingerprint detector that can fit on the side of a smartphone.