ST 2094 is quite flexible allowing the use of windows to do specific transforms. That means a window may be used to apply a different transform for a bright highlight or dark region of the scene. Metadata sets can also be created for several types of display targets. This allows grades to be optimized for an OLED display or LCD display with various levels of peak luminance capability, or an SDR display with a 709 gamut and 300 nits peak luminance, for example.

ST 2094 is aimed at file-based non-real-time HDR workflows, i.e. not live TV. Live TV is a more difficult use case since broadcast facilities don’t have a means of carrying metadata today. SDI transport does not specify a method. That means alternative HDR approaches, like the Hybrid Log Gamma (HLG) concept being championed by the BBC and NHK, will get some serious attention (see related coverage).

ST 2094 notes that dynamic metadata can be implemented in an IMF container. Color Remapping Information (CRI) metadata can also be included in the HEVC encoding process in the SEI message blocks. This enables a single layer HDR stream, like the new Ultra HD Blu-ray specification, to deliver metadata that can remap the color volume of the HDR content to other color volumes like 709.

SMPTE ST 2094 could be ready in early 2016 and will include details for a data structure, general representation and several flavors of color volume transforms. These are the -10, -20 and related extensions. These so called “flavors” of color volume transformation differ in their simplicity of implementation and level of control over the color rendering.

A number of papers dealt with ST 2094 and related aspects of color transforms. For example, in a presentation by Adobe’s Lars Borg, he called the two approaches being discussed in ST 2094 a “virtual master” approach. It has the main benefit of creating a single HDR grade with metadata that can be used to create HDR and SDR grades on multiple target displays. The file is editable, has no synchronization issues and requires only one archive copy (HDR plus metadata).

The trade off is that you need smart conversion at or near the target display when that display does not match the mastering display. This smart conversion can be done prior to reaching the home to create an SDR channel for example, or in the home. Smart conversion requires an HDR TV that can support dynamic metadata decoding, or a device like a set top box, AVR or gateway. These outboard products can then connect to legacy SDR TV, using the display’s EDID data to know how to use the metadata in the conversion process.

Fortunately, the transmission of the metadata does not require much bandwidth – about 300 bps, which is far less than closed captioning data at 9600 bps, noted Borg.

Jim Houston from Starwatcher Digital looked at the color primaries of 2020 vs. 709 in the CIELUV 1976 color space in his paper. He found that in general, very little change in the blue primary is needed to map between 2020 and 709, but the red and green need adjustment to fit the smaller color volume. The implications of this are that a simple RGB gamut shrinking from 2020 to 709 would significantly desaturate the blue. On the other hand, reducing only the red and green primaries can introduce hue distortions. As a result, color volume remapping is best done in a color space other than RGB.

William Redmann from Technicolor described their Color Remapping Information (CRI) metadata process that can allow an HDR deliverable with metadata to generate an SDR grade.

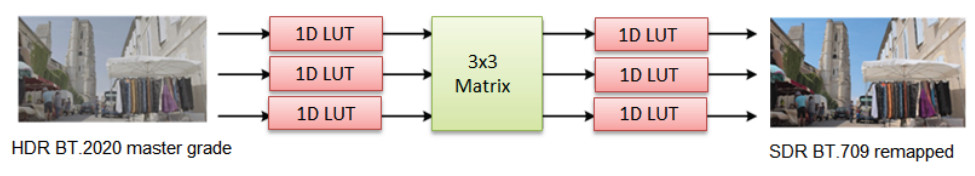

Color transforms are routinely done in professional production today using 3D (RGB) Color Look Up Tables (CLUTs), but Redmann said their discussions with TV SoC providers suggested these were too complex and memory intensive for consumer electronic devices. The model he then presented seems to address these concerns. In fact, CRI has already been adopted in the HEVC edition 2 spec as a Supplemental Enhancement Information (SEI) message. This will allow an optional carriage of dynamic metadata to create the SDR grade from the HDR master. CRI along with appropriate tone mapping of the luminance, is one of the “flavors” being considered for standardization in ST 2094.

The CRI approach is shown in the figure below. The CRI metadata generation process, conducted during mastering, consists of determining the appropriate parameters for the pre- and post tone mapping 1D LUTs and the 3×3 color remapping matrix.

In practice, this approach falls in the reference-based numerical derivation method described above. Redmann admitted that their simplified approach was not as good compared to global optimization, but the latter result is too intensive computationally so “better” may be preferred to “best”.

To develop the CRI algorithms, Redmann used some reference material that was graded in HDR and SDR. The CRI algorithms were applied to the HDR images to generate the SDR images. These were compared to the reference SDR grade using PSNR, SSIM and DeltaE to see how faithful the conversion was. This was repeated until suitable images were obtained. Redmann showed the results of about a dozen pieces of content with half the sequences showing a DeltaE of one JND, which means they have imperceptible color changes. Human evaluation of the images was also done with favorable results.

The butterfly image below shows the original SDR grade on the left and the derived SDR grade on the right.

Redmann concluded by noting that CRI is not only good for file-based workflows, but should be considered for real-time workflows as well.