Four years ago, Snap acquired Adshir’s real-time software-based ray-tracing technology and has now deployed it for use on any smartphone. It may not get the big press that Meta gets with every VR pronouncement, but in many ways, Snap AR is doing a much better job of helping its audience transition to a mixed reality future.

The significance of ray-tracing on smartphones is in the perception. On more powerful devices, like a PC, real-time ray-tracing, for consumer consumption, is driven by gaming where it’s kind of like a badge honor to say that you have enough hardware power to do it. And, yes, it adds a noticeable bump in image quality and realism. It also comes with the benefit of very expensive GPU power to support it and built-in drivers to enable game developers to take advantage of the process without loss of game performance.

In this instance, Snap AR is adding the capability in software for use in a social media setting where it may not need the accuracy of a fully-rendered scene, high frames rates, and no latency. But boy can it use the shine that you get off of a ray-traced image. Snap AR is using its own proprietary shortcuts in software to create enough of an illusion of ray-racing to make it a meaningful addition. The addition of ray-tracing just helps Snap AR application developers to add more complexity and dynamism to their software which, in turn, should increase adoption. While most of that adoption is likely to be contained within Snap’s social media app and be viewed on a smartphone, it gives an additional bump in capability to Snap Spectacles, a product that is experiential rather than scientific meaning, it isn’t designed to be a graphics workhorse. What I like about Snap’s approach, as compared to Meta, is that it is not looking for a slam dunk, just an AR adjacent strategy that is enough to create further engagement among its core user base. Still doesn’t mean it will pan out for Snap, but it probably won’t cost them $20 billion either.

The Enormous Cost of Ray-Tracing

Ray tracing is a rendering technique that has been traditionally used in computer graphics to simulate the behavior of light and how it interacts with objects in a 3D environment. It’s devilishly and insidiously complicated and complex and that’s one of the reasons the computational workload is so high. The book of tricks and compromises to get an almost ray-traced object within a scene (that may or may not be ray-traced) is a thick one and pages are being added to it every day. And all that is just for a single static scene. When you add camera, character, and movement, the workload goes up by the cube at the least. Then, increase the display resolution and color-depth and a supercomputer begins to break out in a sweat. However, ray-tracing is used beyond mere image regeneration.

In autonomous vehicles, ray-tracing is used to accurately represent a virtual model of what the vehicle is “seeing” in order to create an accurate 3D model of its environment that provides better positioning and size values for objects. Light detection and ranging (lidar) sensors send beams of laser out and calculate the features of an object based on the signal that is bounced back. That requires a tremendous amount of computational power given the movement of the observer in relation to the object being observed and is the subject of extensive research on the use of networked computational power, AI, and built-in graphics processing power. A realistic mixed reality situation would combine the exacting qualification of real world values using ray-tracing algorithms and processes to create superimposed AR imagery that mimics the lighting conditions of its real-world background. That isn’t happening any time soon.

Ray-tracing AR glasses, if there were such a thing in the truest sense, could provide very realistic and immersive AR experiences, rendering virtual objects in the user’s field of view. However, one major technical challenge is the computational power that means AR glasses using ray tracing will need powerful hardware, such as a dedicated GPU, to render images at a high enough frame rate to avoid lag or stuttering, and not negatively impact the user’s experience.

Another challenge is the issue of display resolution. In order to create highly realistic images, ray-tracing requires a high level of detail, which means that the display resolution needs to be high enough to render the intricate details of virtual objects. Current AR glasses have relatively low display resolutions. Another issue is the size and weight of the AR glasses. In order to house the necessary hardware and battery life required to support ray-tracing, AR glasses may need to be bulky and heavy. Finally, there are also concerns around the cost of ray-tracing AR glasses. The high cost of the hardware required to support ray tracing, as well as the need for high-resolution displays, would make them unaffordable or, at least, only something an Apple stan would pay for. While Snap Spectacles are never going to be delivering ray-tracing, they do deliver on price and weight and applications in the world of Snap.

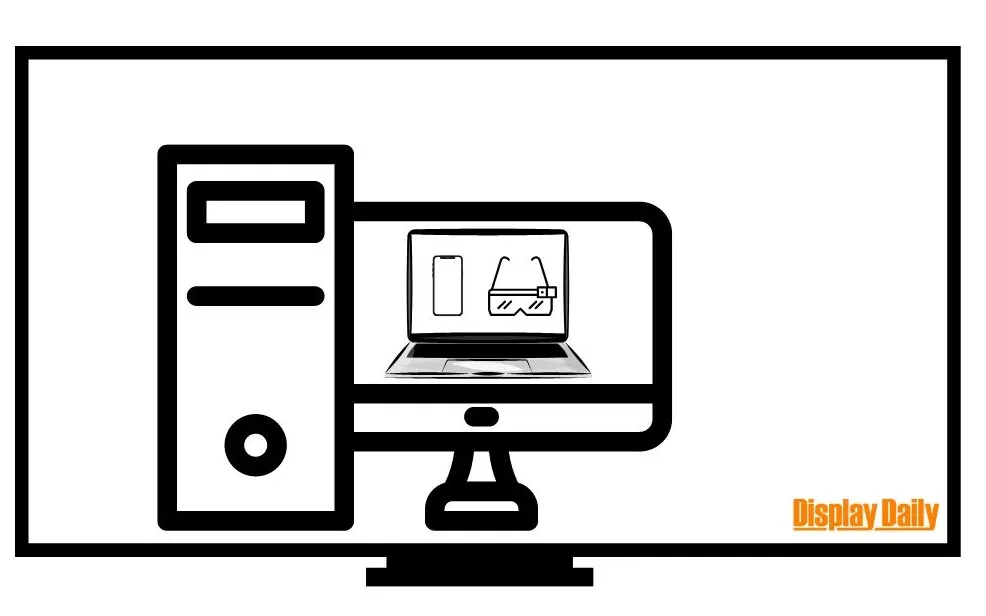

The Matryoshka Doll of Displays

There is a lot of skepticism about the future role of mixed reality and its likely adoption, but even without Spectacles or headsets, Snap users are using the concepts of AR to create posts and share their creativity. We may have to start rethinking the role of devices within the context of mixed reality interfaces. Just as smartphones moved us away from desktops and laptops, mixed reality devices will move us away from smartphones. Yet, we will still be tethered to all of these devices, relying on most of the computational power for each successive iteration of a display to come from the more power preceding generation of devices. In our lifetimes, it is unlikely that we will see anywhere near the kind of computational power that is needed for a truly mixed reality experiences from a headset, never mind AR glasses.

Desktops didn’t disappear because of smartphones. Laptops didn’t disappear because of smartphones. Smartphones won’t disappear because of mixed reality. They all feed off each other. We’re just building a matryoshka doll of devices to which we can add mixed reality headsets and glasses. Don’t be a skeptic. It’s happening.