In his keynote at the GPU Technology Conference (GTC), Jen-Hsun Huang, CEO of Nvidia said that his company has developed a version of its iRay ray-tracing software that can be used to create content for virtual reality with photorealistic quality. Huang said that iRay VR would be available in June.

In the announcement, Huang explained that real time high quality ray tracing is simply not possible yet, because of the computer power needed, so the approach of iRay VR is to take a number of points in the scene (his example used 100 light points) and creates a 4K lightfield for each of these points. Each render point takes an hour of computing time on an 8 GPU server, so his demonstration took 100 hours to render.

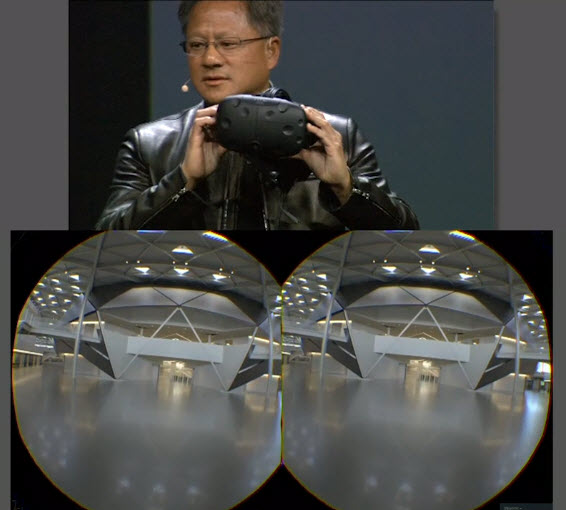

NVidia uses a Quadro M6000 with a very large frame buffer (which can hold the data for all the light probes in memory and has been reported as up to 24GB) to work out the viewpoint of each eye and which of the lightfields is most appropriate. The system uses the data to render an image for that eye, in real time. The M6000 does the composition and rasterisation and produces a very low latency pair of images for the VR headset. Huang showed a demonstration of a render of Nvidia’s new headquarters building in California, being displayed in real time on an HR headset. The visual quality was impressive and Huang expects architects and car designers to love the technology.

However, VR is going to be widely available, even where the power of a Quadro is not available, so Nvidia has also developed iRay VR Lite which can take models from 3ds Max (or other iRay supporting software) and directly create 3D VR experiences for downloading onto mobile devices and lower end displays. It needs a buffer of up to 8GB. An attendee at the event said that iRay VR Lite was run on a big server at Nvidia’s office, using an internet connection to stream it, but that even then “real time was a stretch”.

Huang also talked a lot about the importance of recent developments, especially “Deep Learning”. AI systems (driven by GPUs, of course) are now starting to be able to do things that programmers may not be able to program directly at all. Systems may be able to support “superhuman” performance without needing a superhuman teacher.

Huang also showed an impressive demonstration of a neural network being used to analyse landscape paintings and after the computer was shown a lot of images, it was able to create surprisingly natural-looking images of “landscapes” or “forests”. Impressive (BR).