Recently I wrote a Display Daily titled “VR/AR Standards – Are We Confused Yet?” Then I attended a SMPTE meeting on the growing body of standards for Video over IP…

One of the problems with Video over IP is that so many standards are required to fit video, which is by definition continuous and very time sensitive, into the existing body of Internet Protocol (IP) standards, which is by definition packetized as well as asynchronous and not time sensitive, at least not to the microsecond level of video. Another problem is to relate new Video over IP standards to legacy standards. These legacy standards include those such as digital video, video compression schemes (old and new) video interfaces such as SDI, HDMI and DisplayPort, etc. “Digital video” is a broad topic in itself and includes topics such as video sub-sampling (4:4:4, 4:2:2, 4:2:0, etc.) interlace vs progressive, aspect ratios, etc.

Sound and its synchronization with video is another major issue. In addition, you can’t forget ancillary data, aka metadata, that travels along with video. In a broadcast environment, this metadata carries information such as closed captioned subtitles or instructions on how to process Dolby Vision or HDR10 high dynamic range (HDR) content for display on your display. Fail to carry or show correctly the closed captioned subtitles and you’re in violation of US law. Failure to transmit HDR metadata correctly and you have Dolby and many other companies refusing to use your products. In a production environment, the metadata is different but you still must carry it correctly. This metadata will include such things as the camera used, camera settings, etc. Get these wrong and the video production and post-production communities will refuse to use your products.

At this point let me make it clear – I’m not talking about streaming video over the Internet to consumers. Streaming is big and will be getting much bigger – Cisco has forecast that online video will be responsible for 82% of all consumer Internet traffic in 2021 and live video alone, which is forecast to be about 13% of this consumer traffic, will represent 25 Exabytes (25 Trillion Gigabytes) per year. Still, highly compressed streamed video is not the same as the real-time uncompressed or lightly compressed professional video targeted by the SMPTE standards.

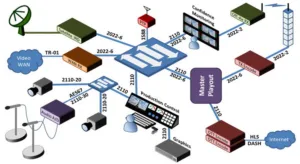

IP Video Ecosystem for Production, Post-Production and Broadcast (Image © 2017 by Wesley D. Simpson. Used with permission.)

At the most recent Society of Motion Picture and Television Engineers (SMPTE) New York section meeting, Wes Simpson, President of Telecom Product Consulting, presented a talk “A Field Guide to IP Video.” He discussed several of the major standards needed to make Video over IP work in a production, post-production and broadcast environment. The ecosystem for these standards is shown in the image, with the labels on the blue connecting lines indicating the standards needed to provide that connectivity. The key standards include:

- SMPTE ST 2022-1, -2

- Common name: Compressed Video Over IP

- Released: 2007 (descendant of MPEG CoP3)

- Comment: Primarily found in long haul networks. A single source can feed thousands of destination points. Commonly used for IPTV systems that feed video to consumers and to enterprise desktop applications.

- SMPTE ST 2022-5, -6

- Common name: Uncompressed SDI over IP

- Released: 2013

- Comment: This standard was originally developed for transporting uncompressed video across long-haul networks, such as contribution feeds from remote sports venues although it is sometimes also used in studios. The standard includes forward error correction (FEC) for protection against short bursts of packet loss.

- SMPTE ST 2110-10 (System)

- Common Name: Professional Media over IP Networks (System and Timing)

- Released: Expected in 2017. (Based on VSF TR-03)

- Comment: This standard is primarily for in-studio use and forms the foundation of the 2110 series of standards. A variety of other standards in the 2110 series are being drafted to provide detailed specifications on individual media types, such as uncompressed video, uncompressed multi-channel audio, VANC data, etc.

- SMPTE ST 2110-20 (Video)

- Common Name: Professional Media over IP – Uncompressed Active Video

- Released: Expected in 2017

- Comment: Primarily for use inside the production facility. Uncompressed, raw pixel data is inserted directly into RTP packets. Carries only the active pixels in a video image. Can transport HDR, WCG, SD, HD, UHD-1, UHD-2, etc. Does not include transport of audio or ancillary data.

- SMPTE ST 2110-21 (Video Timing)

- Common Name: Professional Media over IP – Timing Model for Uncompressed Active Video

- Released: Expected in 2017

- Comment: For in-studio use. This standard defines the allowed amounts of variability in packet stream delivery rates for ST 2110-20 senders. Three different models are currently proposed and these models are especially important in buffer management.

- SMPTE ST 2110-30 (Audio)

- Common Name: Professional Media over IP Networks – PCM Digital Audio

- Released: Expected in 2017 (Derived from AES67)

- Comment: Provides a common, interoperable format for audio transport. This has been implemented (as AES67) by a large number of device and system manufacturers. Allows fine-grained synchronization between multiple audio channels to preserve sound-field integrity and maintain stereo audio phase relationships.

- SMPTE ST 2110-40 (Ancillary)

- Common Name: Professional Media over IP Networks – Ancillary Data

- Released: Expected in 2017

- Comment: Based on an IETF draft document draft-ietf-payload-rtp-ancillary which is, in turn, based on SMPTE ST 291-1. This ST 291-1 ancillary data for SDI is converted into IT packets.

- IEEE 1588-2008

- Common Name: PTP – Precision Time Protocol ver. 2

- Released: 2008

- Comment: Part of the IP Studio Network Infrastructure and has applications outside the broadcast community. This standard distributes accurate (within 1 microsecond) clock to video, audio and other devices to permit synchronization between sources, processors and receivers.

- IETF RFC 4566 (SDP)

- Common Name: SDP – Session Description Protocol

- Released: 2006

- SDP are text files supplied by senders to give receivers the information they need to properly connect to and identify IP video streams. Information includes IP addresses, UDP port numbers, RTP types, clock identifiers, media sampling/channels, etc. As text files, they are human readable, not just machine readable.

- AMWA – NMOS

- Common Name: NMOS – Networked Media Open Specifications

- Released: Ongoing (releases as needed)

- Comment: Provides a way for devices to register themselves either with a central registry or on an ad-hoc basis; discover other devices in the network; establish connections between devices; provide identity information about and within streams; and to manage timing relationships between streams.

As you can see, not all of these standards have been released yet and not all of them are SMPTE standards. Still, they are sufficiently close to final formalization that multiple companies have designed hardware and software to meet these standards. This was demonstrated at NAB by the Joint Task-Force Networked Media (JT-NM) in association with the Alliance For IP Media Solutions (AIMS), as shown in the photo.

JT-NM Interop based on proposed SMPTE ST 2110 standard at NAB 2017 (Photo Credit: Bob Ruhl)

The JT-NM is sponsored by The Advanced Media Workflow Association (AMWA); the European Broadcasting Union (EBU); the Society of Motion Picture and Television Engineers (SMPTE) and the Video Services Forum (VSF). Bob Ruhl is the VSF Operations Manager and currently the JT-NM Secretary. The purpose of JT-NM is to coordinate the standards developed by these four organizations to make sure they are compatible with each other.

AIMS has 32 full members ranging from 21st Century Fox to Utah Scientific and 42 Associate members ranging from Advantech Co. Ltd. to Xilinx, Inc. AIMS Members have worked together to write Application Programming Interfaces (APIs) to facilitate interoperability of their hardware. The overall AIMS goal is to speed the switch from SDI to IP video.

Besides promotion, to a large extent what AIMS is adding to the JT-NM standards are the APIs. The jointly developed APIs have been tested during multi-vendor, developer interoperability tests, and were successfully demonstrated at IBC 2016 and NAB 2017. One function of the API is to act as a “registration service.” When a device from one AIMS member needs to connect to a device from another AIMS member, the first device can check with the registration service to determine the second devices capabilities and determine if connection is possible and what settings to use to connect.

At the NAB Interop demo, 43 different vendors demonstrated that their 60+ products could correctly exchange Video over IP. This was based not only on the SMPTE and other standards, but the APIs developed by AIMS members.

It appears as though Video over IP based on SMPTE and other standards is, in fact, ready for use by the Production and Broadcast communities.

The SDVoE Alliance was announced at ISE in February, 2017. (Photo credit: M. Brennesholtz)

The SDVoE Alliance was announced at ISE in February, 2017. (Photo credit: M. Brennesholtz)

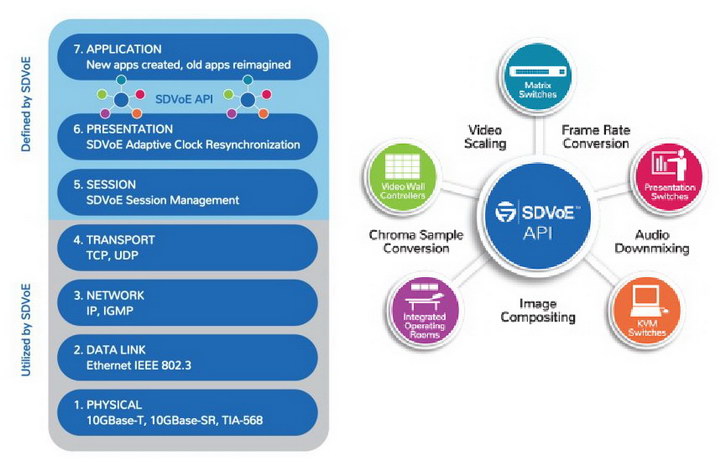

Unfortunately, this isn’t the end of the story. The SDVoE Alliance (Software Defined Video over Ethernet) is another system for transferring real-time video over standard Ethernet with very low latency. I first encountered the SDVoE at their founding press conference at ISE in February and they have a major presence at InfoComm (Booth 3729) which is going on as this is published. I’ve seen multiple press releases from SDVoE members introducing products this week at InfoComm. SDVoE’s main standard is an API that unites all of the compatible products. Some SDVoE member companies also produce dedicated hardware as well. For example, AptoVision, one of the SDVoE co-founders, makes chipsets that implement SDVoE protocols.

Left: Layers in a SDVoE system. Layers 1-4 are defined by standards organizations. Layers 5-7 are layers based on SDVoE protocols and APIs. Right: Some functions of the SDVoE API. (Image credit: SDVoE)

Left: Layers in a SDVoE system. Layers 1-4 are defined by standards organizations. Layers 5-7 are layers based on SDVoE protocols and APIs. Right: Some functions of the SDVoE API. (Image credit: SDVoE)

Justin Kennington, President of the SDVoE Alliance, took the time two days before the start of InfoComm to talk to me about SDVoE. He says his organization’s focus is on Professional AV, not broadcast, hence his organization’s presence at InfoComm. The SDVoE system is not based on SMPTE 2110, although Kennington said it could be incorporated in the future if required. He also said the IEEE 1588 PTP is not used because it is not required by Pro-AV users. SDVoE protocols support a variety of interfaces, including SDI, HDMI, DisplayPort and VGA.

One interface it does not and will not support is HDBaseT. Kennington dismissed HDBaseT as HDMI that used CAT-5 cables instead of HDMI cables. The SDVoE takes the reference to Ethernet seriously. According to Kennington, while HDBaseT can use CAT-5 Ethernet cabling, it is not “Ethernet” itself. He said even at the hardware level, it does not use Ethernet protocols. He said if you use a standard Ethernet router in a HDBaseT system you get – nothing. Use one in a SDVoE system, and your video passes through transparently.

If you are really, really serious about learning how to move video over Ethernet (e.g. you are the IT manager in a SDI-based post-production house), SMPTE offers an on-line seven week training course titled “Moving Real Time Video and Audio over Packet Networks,” This includes both on-line studying plus coaching by an expert, i.e. Wes Simpson. Upcoming course start dates are July 10th, October 2nd and November 20th. Classes are limited to 20 people and the fee for a SMPTE member is $450, non-member $550. If you are more interested in SDVoE, they are offering two free training sessions on Thursday, June 15 at InfoComm.

Analyst Comment

It is unfortunate there are two different islands of Video over IP, one from the JT-NM for production and broadcast and the other from the SDVoE Alliance for Pro-AV. What happens if you want to use a broadcast camera and other production equipment at a live Pro-AV event? This happens all the time, e.g. at Award Shows such as the Oscars. What if you are a rental company with customers in both the Rental and Staging and Broadcast communities? I assume there are or will be translators from JT-NM to SDVoE and vice versa, but isn’t that an unnecessary complication to an already complicated business? –Matthew Brennesholtz