The stereotypical view of a video game designer is a 25 year old male who has been heavily into video games since he was 11. At 14 he created his first video game to share with his friends. Now he drinks Red Bull, eats too many potato chips and works half the night coding in C#. When he isn’t coding, he’s playing video games himself.

I don’t know if that stereotype is accurate or not but I don’t match any portion of it anyway. So why am I writing a Display Daily article on developing VR games? Primarily because, as far as I can tell, most of the writers for Display Daily are like me and have never developed a video game, let alone a video game targeting VR HMDs. I actually did write a game once, in BASIC for the HP-85 computer my company gave me because it was already obsolete. My kids actually played it a little. At the time, any game, no matter how lame, running on any computer was a novelty.

HP-85 computer from the 1980s with advanced features such as built-in tape drive, thermal printer and CRT. (Credit: Wolfgang Stief via Wikipedia, downloaded 21 Aug 2017)

HP-85 computer from the 1980s with advanced features such as built-in tape drive, thermal printer and CRT. (Credit: Wolfgang Stief via Wikipedia, downloaded 21 Aug 2017)

Game players today are a little more discerning than that. The latest high powered Intel processors coupled to the latest high-powered Nvidia graphics processors and cards can provide real-time images of surprising quality and realism. Typically, these images are shown on a computer monitor with a rectangular frame, i.e. a monitor much like the one you are reading this article on. This limitation to a flat panel comes from the software and the graphics card, not from the underlying 3D computer graphics data used to produce the images. Even with a flat panel monitor, in most games the player can rotate the image to see what is behind his avatar. In fact, to win the game, the player often must look behind him, other wise the Bad Guy will sneak up on him.

If many games already have 360° data for the graphics and allow inputs to rotate the image to look in any direction, they should be VR HMD ready, right? My understanding is some are but most aren’t. If you are serious about video games, you don’t want a kludge – you want your game to run right and run fast – very fast. I’ve been told that 85 frames per second (FPS) is considered a video gaming minimum and 120 FPS is better. Plus, you better not have any ripping and tearing in the image, whether it’s on a flat panel or a VR HMD. While VR is growing fast, the main money in video games is still for PCs and flat panel monitors so there are relatively few sophisticated games that run only on VR HMDs.

So you have a video game and you want to port it to a VR HMD, what do you do? First of all, you need access to the source code, including the code for the video graphics. Porting a game to a HMD isn’t for the end user unless the end user is a very, very talented game hacker. In which case, he’s working for a game developer and has access to the source code anyway.

A good place for an amateur or professional PC game developer to start is at the Intel VR Bootcamp website. Not surprisingly, most of the discussion there is about VR games for a Windows-based system using an Nvidia graphics processor. This isn’t much of a problem – high-end PC-based video games mostly use a platform like this (and, of course, the PlayStation and XBox use basically the same architecture, these days – Man. Ed.). This Bootcamp site also has a link to the Intel YouTube VR channel. There are 38 videos posted there, some from the recent SIGGRAPH 2017. These videos are mostly examples of what VR can do your for game, not the nuts and bolts of how to do it.

Where to Start

If you actually want to create a VR game from scratch, and your programming skills are up to it, the article “Introduction to VR” and subtitled Creating a First-Person Player Game for the Oculus Rift, posted on-line on July 18, 2017, could be the place to start. Besides all the Intel tools and your own skill set, this tutorial says you need the Unity game engine from Unity3D and an Oculus Rift HMD. It goes without saying that you also need a high-end PC with an Intel Core i7 processor coupled to a high-end graphics processor.

The author of this article, Praveen K. of Intel, says there are five easy starting steps needed to integrate your Oculus system with the Unity game engine:

- Download the Oculus utilities for Unity 5.

- Import the Unity package into your Unity project.

- Remove the Main Camera object from your scene. It’s unnecessary because the Oculus OVRPlayerController prefab already comes with a custom VR camera.

- Navigate to the Assets/OVR/Prefabs folder.

- Drag and drop the OVRPlayerController prefab into your scene. You can work with the OVRCameraRig prefab. For a description of these prefabs and an explanation of their differences, go to this link. The sample shown below was implemented using OVRPlayerController.

Simple, isn’t it? Just keep in mind the camera in step 3 is a virtual camera, not a real one. Also, remember these are the starting steps. My favorite comment embedded in the code used as an example in this article is “Teleporting is set to true when Button A is pressed on the controller.” This becomes two lines of C# code:

nextfire = Time.time + fireRate;

RayCastShoot(true);

If only teleporting was so easy in real life as it is when you are using a VR HMD and the Unity game engine!

Praveen emphasizes the importance of speed for the end-user code and, when discussing terrain in Unity, says, “Multiple online resources are available that explain how to create a basic terrain in Unity. … Adding lots of trees and grass details to the scene may have a performance impact, causing the frames per second (FPS) to decrease significantly. … In order to improve the VR experience in your game, a minimum of 90 FPS is recommended.” Maybe that’s why a lot of video games tke place in a desert – few MIPS-robbing trees.

Creating Terrain in Unity (Credit Praveen K., Intel)

Intel is an equal opportunity supplier and there is another article from Bob Duffy at Intel titled “Updating Older Unity Games for VR.” This discusses what is needed to get an existing game based on the Unity platform to work on the HTC Vive using the HTC controllers. He points out problems like how C# and JavaScript are fundamentally incompatible, although he gives a couple work-arounds. My favorite line from this article is the heading “Doesn’t Work – Try New Project.” This isn’t as bad as it sounds. It just means you need to create a blank new Unity project and copy everything over. Hopefully, you leave the poison pill behind.

At the end of the article, Duffy said, “In the end I found that updating this game wasn’t terribly difficult, and it allowed me to think through the interaction to be a more immersive and intuitive experience than originally designed. From the exercise alone I now have a lot more knowledge on how to tackle an original VR game.” Maybe it isn’t terribly difficult for him, but…

The fact that you need different software to port your game to the Oculus Rift or HTC Vive HMD, I consider a red flag. Your conventional PC game doesn’t need re-writing when you go from an Acer monitor to a Dell. AR/VR Standards are needed here!

In an article titled “Getting a Microsoft Surface Book to work with SteamVR,” Bob Duffy, also from Intel, discusses what happens when you don’t have a fast enough computer and try to use something like the Microsoft Surface. He says, after showing how to get SteamVR running on your Surface Book, “Hopefully this was helpful. Your Surface Book is clearly not on the most rocking system to run VR, however for some applications like TiltBrush it’s a good experience and your Surface Book can get you started with VR… that is until you get access to a higher performance [platform]. Trust me, you’re going to want to do that 10 minutes into this.” He says if you really need an extreme system for a compelling demo at an event like SIGGRAPH, just rent one from the Intel Demo Depot.

3D Image created on a Microsoft Surface Book using SteamVR’s TiltBrush. (Credit: Bob Duffy of Intel)

3D Image created on a Microsoft Surface Book using SteamVR’s TiltBrush. (Credit: Bob Duffy of Intel)

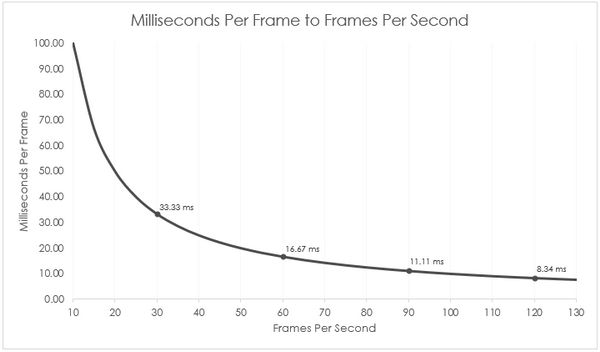

Intel, of course, isn’t dedicated to just the Unity platform. There is a series of three articles by Errin M. and Jeff Rous on how to write a game for the Unreal Engine 4 (UE4) from Epic Games. These articles emphasized the need to optimize your game to minimize the time to generate each frame. The authors even gave a graph of mS/frame vs FPS, as if game developers couldn’t do arithmetic. More importantly, they gave directions on how to use the UE4 tools that allow you to monitor the mS/Frame metric to see the effect of game optimizations you code.

(Credit: Errin M. and Jeff Rous, Intel)

(Credit: Errin M. and Jeff Rous, Intel)

In the video on the Bootcamp website titled “Bit Rates, Frame Rates, and Processors,” Anne Munition discusses the trade offs between speed and image quality, especially if you want to stream the images from your video game. She favors the Twitch streaming service, which has a limitation of 3500 kB/S. With this limitation you can’t have it all – there will be compromises in the image quality. She favors streaming at 720P60 (1280 x 720, 60FPS) rather than 1080P30 (1920 x 1080, 30FPS) because the very poor motion rendition at 30FPS will bother people watching streamed video games far more than the reduced resolution of 720P60.

All these I’ve discussed are considered basic tutorials – I haven’t gotten into the advanced topics. In one of the advanced articles “Combating VR Sickness with User Experience Design,” Matt Rebong from Well Told Entertainment, says “Nausea, dubbed in the community as ‘VR sickness,’ (VRS) is widely considered the biggest hurdle for VR game developers to date and for good reason: it is one of the largest barriers to entry for new adopters of the technology.” He goes on to discuss strategies his company has used to reduce VRS.

Do display people really need to know about the software behind the games to be shown on their VR HMDs? I think it’s important to realize just how hard the software people are striving for high image quality, including both resolution and frame rate, in their video games. They aren’t just striving – they are achieving it. Frankly the HMDs I’ve used simply haven’t been up to the quality of the gaming content available in terms of either resolution and frame rate. Also, VRS is not only a software problem – I have heard that better image quality and, especially, faster response times in the HMD can reduce VRS as well. Think of this article as a call to arms for improved image quality on HMDs. –Matthew Brennesholtz