A couple of weeks ago, I was invited to (yet another) webinar for a new product launch in virtual reality by HP. I really wasn’t looking forward to it – just another Windows VR headset, it seemed, but it turned out to be significantly more than that.

So, let’s get the basics out of the way.

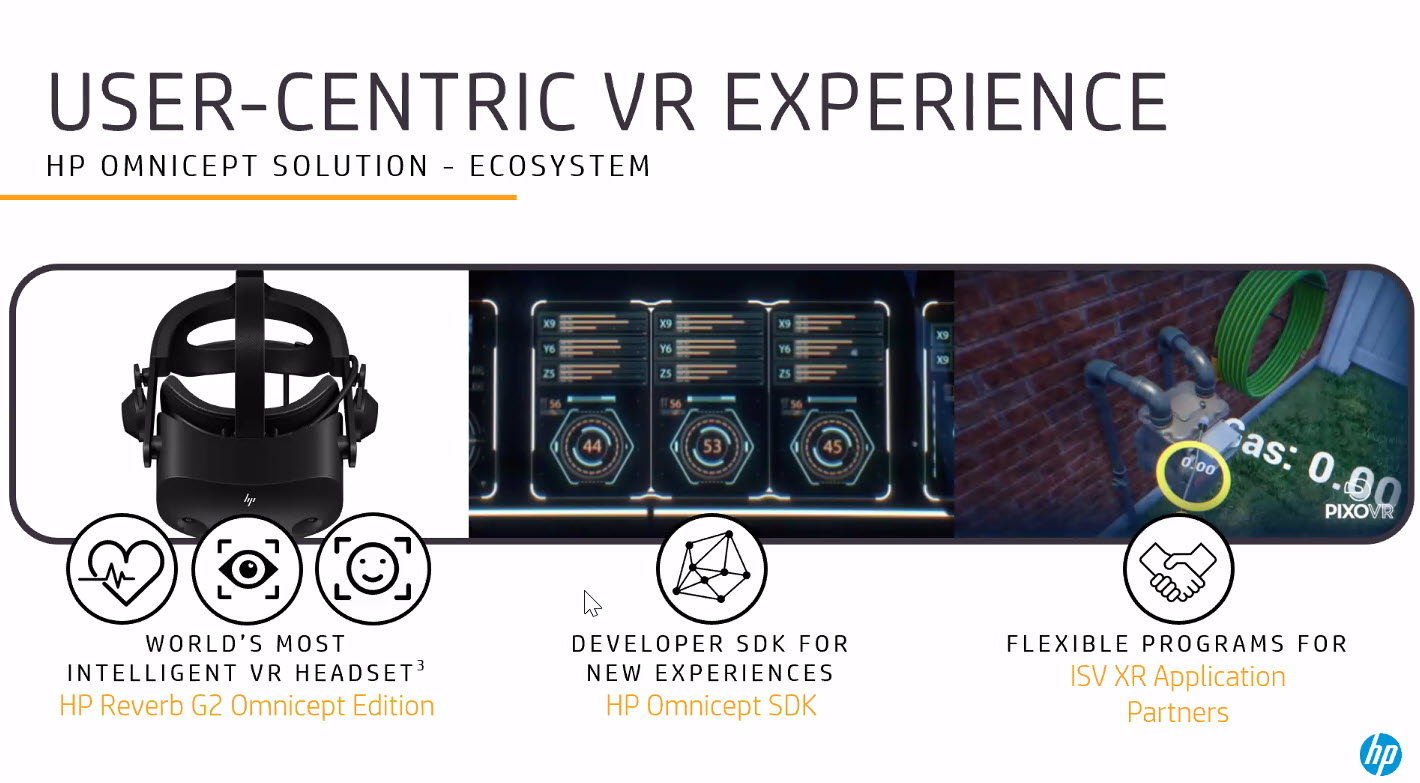

The Reverb G2 is a new headset for VR or XR. The headset has new displays that use 2048 x 2048 resolution, so I’d love to try them to see if the ‘screen door’ effect is really gone – it might be close at that resolution. Although the headset will be sold for gaming and other consumer applications and will start shipping in November, the webinar was about a new offering for B2B, which includes a new platform (Omnicept) for analysis and development.

Sensors Everywhere

Without a chance to try the new headset, it’s hard to know if the display is compelling, but what grabbed my attention at the event was the proliferation of sensors in the headset. I have long contended that one of the (several) critical factors in the creation of the smartphone market by Apple with the iPhone was the integration of a wide range of sensors. Working out how to include and integrate really useful senors remains, in my opinion, one of the key factors in developing and growing the market for IT displays. Anyway, back to HP.

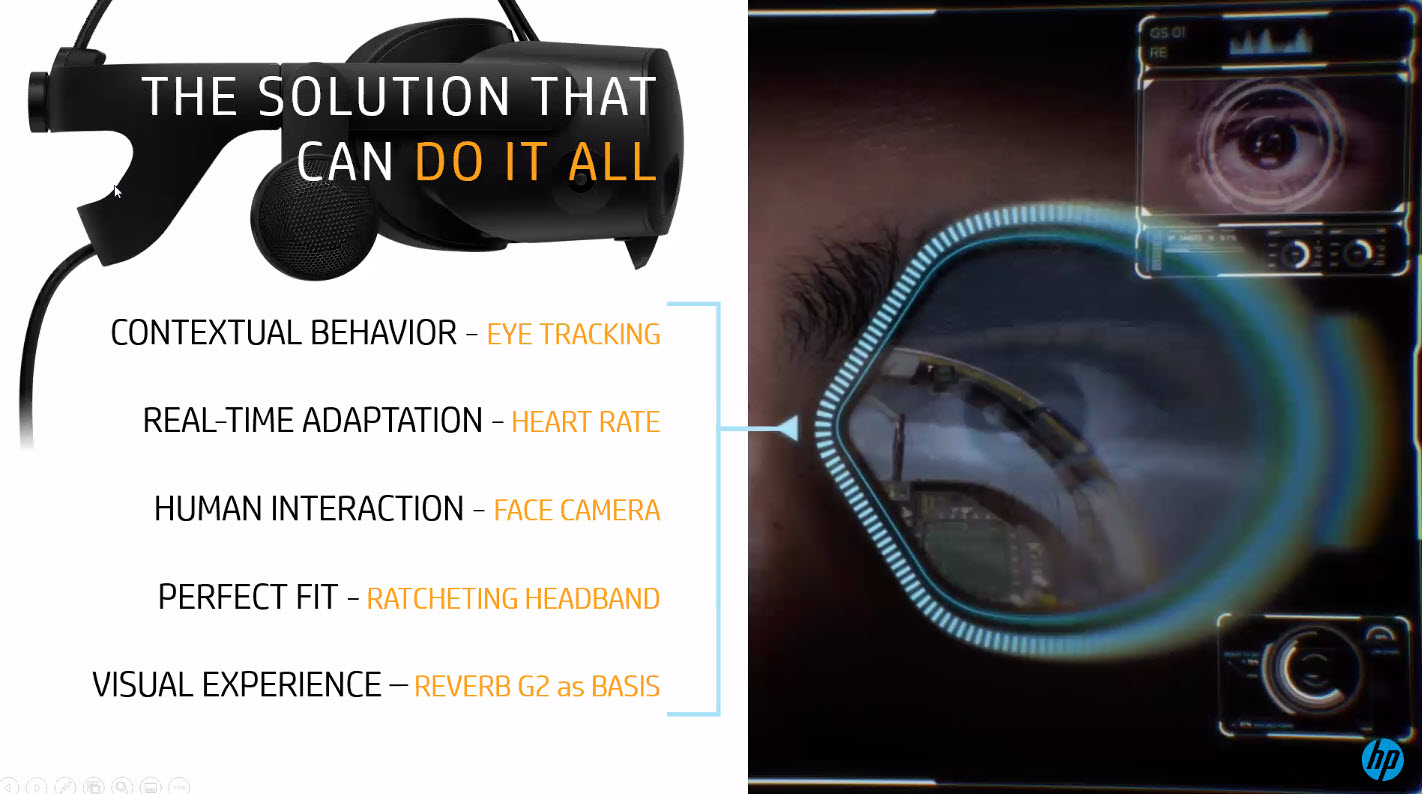

Another of my favourite topics is the importance of gaze recognition in IT. HP has added this eye tracking to the Reverb G2 to allow features such as foveated rendering (the use of eye tracking to allow the optimisation of image quality to match where the user is looking, to better use computing and graphics resources). The technology has been supplied by leading vendor, Tobii and Johan Bouvin from the firm spoke during the event.

One of the key B2B application markets for HP is training. The availability of eye-tracking information can be really helpful to trainers to see how subjects are responding to virtual situations. As well as helping to optimise the training process, the information can be used to better define systems and operations to ensure that critical information is clear and available.

More Than Just Gaze

What Tobii has added to the basic gaze tracking is a set of pupillometry sensors, so that the system can check on the dilation of the pupils of the user. As Wikipedia puts it:

“Pupil diameter also increases in reaction to cognitive tasks requiring memory and attention, and this phenomenon is used as an indicator of mental activation (‘arousal’) in psychophysiological experiments.”

There is also an integrated heart rate monitor which can help to understand the users reaction to different stimuli.

The combination of the eye tracking, heart rate measurement (HRM) and pupil size changes allows the creation of a ‘cognitive load’ number which can be used to see how users perform under stress and also detect when they might be overloaded. In simulations and safety training, knowing how the user might suffer cognitive overload could be transformative of procedures and operations.

That’s the first stage. As well as the gaze and pupils, HP has added a facial camera that will be used in the future in combination with the other sensors to develop a system for reporting emotion as well as cognitive load.

HP is looking for developers to use the platform to develop apps and to encourage developers is making the platform free for education, and charging a 2% licence fee on applications developed for the platform. Enterprise customers wanting to develop in-house applications can purchase the platform.

A number of training applications were shown but we were especially impressed with a demo of Ovation VR, an app for training in presentations. The system allows someone that is training for a speech to set up a surprisingly realistic audience and auditorium (complete with virtual AV including apparent projection of PPT files). The system then monitors the speech, checking, for example, that the speaker is engaging with the audience properly or is spending too much time looking at the screen. The system also uses speech recognition to give real time feedback on speech styles. The session is recorded and can be replayed so that presenters can see how they look to an audience.

Jeff Marshall of Ovation said that features such as live warnings about ‘filler words’ (the ‘ums’ and ‘likes’ that we often hear) and his own talk was exceptional for not including any of those fillers. Impressive!

The application certainly looks very impressive and if I had to deliver an important talk (although those opportunities are rare at the moment), I would be very tempted to train using the software.

All in all, I was very impressed with what HP is doing and has done to move XR beyond just seeing a 3D world. I just wish I had a chance to try the headset and see if the hardware matches the promise! (BR)