There were also four speakers in the Display & Mastering Tools section of the Advanced Display Summit. Mostly, the speakers discussed the tools their companies can provide to the production and post-production industry in support of advanced displays such as 8K, WCG, HDR, etc.

The first speaker in this group as Florian Friedrich from FF Pictures who talked about HDR under control: Tools for signal analysis, quality control and dynamic metadata. Most of his talk and his demonstration during the ADS focused on the use of his HDRmaster Toolset software that allows post-production houses to produce high-quality HDR content and check both the visual and digital quality of the HDR distribution package.

This package is both a stand-alone quality checker for the finished product plus a plug-in for both Resolve and Premiere. Currently, his software is for Windows-based systems only but he said development of Mac OS software is the next step. His HDRmaster QC (quality check) software will currently evaluate content encoded as HEVC, TIFF, YUV RAW and MP4 files, with more coverage coming soon. One thing he emphasized was the importance of re-importing the finished output file into the QC checker. He said that files that looked fine in the uncompressed format often have problems after the very high compression of modern encoders such as HEVC. These problems can be subtle, however, and merely looking at the content on a consumer TV will not necessarily reveal them.

In PQ-based HDR systems, everyone talks about metadata. For HDR10, this is static for a complete piece of content; for HDR10+, it can vary on a scene-by-scene basis; and for Dolby Vision, it can vary on a frame-by-frame basis. The HDRmaster Toolset has software tools needed to evaluate the images and produce the metadata needed by these HDR encoding schemes. The Toolset also provides for HDR10+ disk authoring tools that work with regular disk authoring software such as Indigo Ultra.

The second speaker in this topic was Bill Mandel of Samsung Research America whose talk was titled HDR10+ Technology. In his talk, he not only discussed the technology of HDR10+, but also the recently announced HDR10+ Technologies, LLC and its profile, logo and licensing program. This LLC has been founded recently by Twentieth Century Fox, Panasonic and Samsung although the three companies have been working in a partnership to advance HDR10+ since at least August 2017.

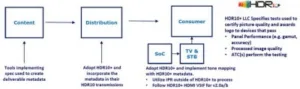

Overview of HDR10+ Technologies LLC management of HDR10+ (Source: Samsung at ADS)

Overview of HDR10+ Technologies LLC management of HDR10+ (Source: Samsung at ADS)

SMPTE ST 2094-40 is the overall technical standard guiding HDR10+ and Samsung uses the generic term “2094-40” within the large number of standards groups involved in HDR10+ standards. These groups include the ATSC, CCTV, MPEG, SMPTE, IEEE, CTA, etc. (Note: While HDR10+ from Samsung and others is ST 2094-40 there are several other flavors of ST 2094 defining different versions of PQ-EOTF HDR. 2094-10 is from Dolby, 2094-20 is from Philips and 2094-30 is from Technicolor. A generic reference to ST 2094 is not enough – you must reference the version you are talking about. MSB) And don’t forget SMPTE 2086 – according to Mandel, ST 2094-40 “provides better qualified metadata than simply SMPTE 2086.”

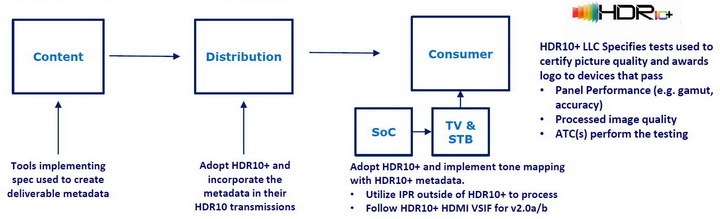

Steps in the generation of HDR10+ Metadata and embedding it in H.265 (HEVC) output. (Source: Samsung at ADS)

Steps in the generation of HDR10+ Metadata and embedding it in H.265 (HEVC) output. (Source: Samsung at ADS)

Mandel discussed the workflows for embedding Metadata in an HEVC output stream. The basic workflow is shown in the figure but there others. He then reviewed hardware and software shown at NAB 2018 to show that there was a complete ecosystem for HDR10+ for anyone interested in creating HDR content. These tools included ones from Colorfront (who also spoke at the ADS), Scenarist and Black Magic Design.

Going slightly out of order, Bill Feightner from Colorfront was the last speaker on the topic of HDR and he spoke about Nits to Nits – Supporting multiple display and surround brightness. He addressed the display side of high dynamic range, not the “equally important capture side” addressed by Florian Friedrich from FF Pictures and Bill Mandel of Samsung.

He said that historically motion entertainment such as live theater had always been “high dynamic range” until Edison and others introduced motion picture film in the late 19th century. Television, including HDTV, was also standard dynamic range and extended dynamic range, as introduced by Dolby Cinema and others, is beginning to penetrate the display market. One of the issues, he said, is there is no standard definition of standard dynamic range (SDR), extended dynamic range (EDR) and high dynamic range (HDR).

Part of the problem in defining these properties is it is not just the display that creates SDR, EDR or HDR images for the viewer. The content is important as is the surrounding environment for a display. A HDR display with native HDR content looks very different in a dark room compared to its appearance in a room with more normal ambient light. The Colorfront engine is designed to optimize the appearance of the content to match, as best as possible, the creative intentions of the content creator.

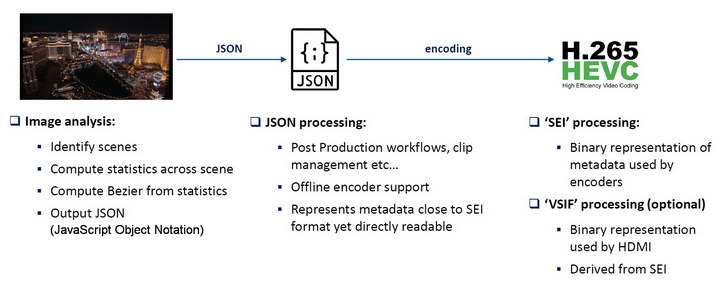

The Colorfront Engine can adjust content to match viewing conditions. (Source: Colorfront at ADS)

The Colorfront Engine can adjust content to match viewing conditions. (Source: Colorfront at ADS)

The Colorfront Engine is used by the content creator and not by the display user. Content creators generate not one version of a piece of content but many versions, sometimes hundreds of versions, intended for viewing on various display types under varying circumstances. A post-production house will take in either scene-referred or display-referred content from the camera or other acquisition source. Cinema content is generally scene referred, meaning that the brightness of pixels in the content should be shown at a specific display brightness in nits. Traditional cinema content plus all modern HDR systems that display using the PQ-EOTF are all scene-referred. Display referred systems are generally TV systems where pixels in the content are intended to be shown at a percentage of the peak brightness of the display. Traditional TV content with gamma-encoding plus HLG HDR content are all display-referred.

One problem with scene referred content is that the target display is rarely the same brightness as the display used to create the content. This is where the metadata in Dolby Vision, HDR10+ and HDR10 systems comes in useful – it allows the processor at the display to re-optimize the content to match the display. Metadata isn’t needed in display-referred HDR systems like HLG.

In any event, this content makes its way through the post production editing process and a master is created. In the past, it has been necessary to manually adjust the content for each target system such as cinema, HDR TV, SDR TV, or mobile devices. The Colorfront engine, however, uses a perceptual transform engine to produce the various versions of the content to be shown on the display types listed on the right of the figure above.

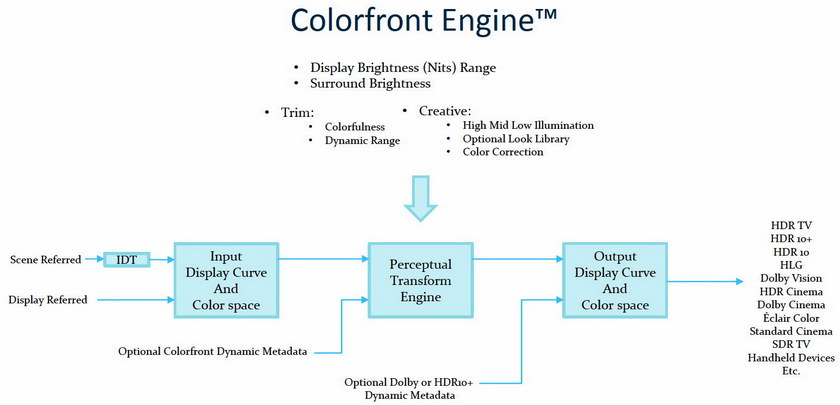

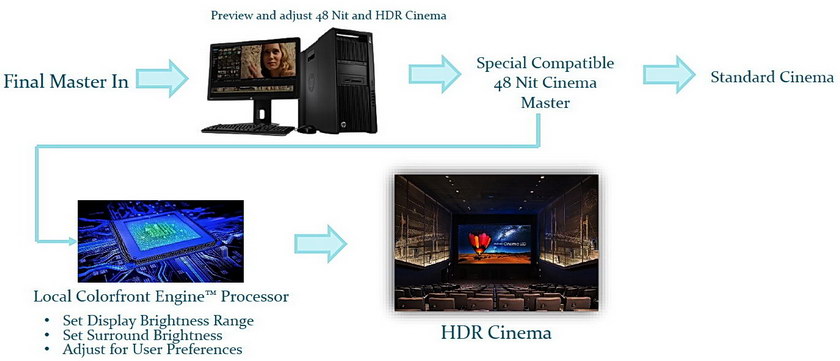

The Colorfront Engine can be used locally to deliver EDR and HDR within standard cinema compatible masters. (Credit: Colorfront at ADS)

The Colorfront Engine can be used locally to deliver EDR and HDR within standard cinema compatible masters. (Credit: Colorfront at ADS)

In a few cases, the Colorfront engine can also be used locally. Feightner said that HDR data can be embedded in a SDR cinema master. The content can then be shown on a normal SDR digital cinema projector. For an HDR or EDR cinema system, the local Colorfront Engine can use the metadata to adjust the content for the HDR or EDR display. This is essentially identical to what the processor does with the metadata in a Dolby Vision, HDR10+ or HDR10 TV system.

Tyler Pruitt from Portrait Displays and CalMAN presented a talk titled Monitoring Challenges Today. He talked mostly about two issues: color problems with the color mastering monitors used in the post-production process and the problems with “HDR cinema,” such as Dolby Cinema, which he says are really EDR solutions.

According to Pruitt, there are currently three “Hero Monitors” on the market that can be used for color grading of HDR content. These are the:

- Dolby Pulsar – LCD RGB LED (4,000 cd/m²)

- Sony BVM-X300 – RGB OLED (1,000 cd/m²)

- Canon DP-V2421 – LCD RGB LED (1,000 cd/m²)

Three other monitors he calls the “new kids on the block” in the color mastering space:

- Eizo – CG3145 – LCD (1,000 cd/m²) (No SDI Inputs)

- Flanders Scientific – XM310K – LCD LED (3,000 cd/m²)

- TVlogic – LUM-310R – LCD LED (2,000 cd/m²)

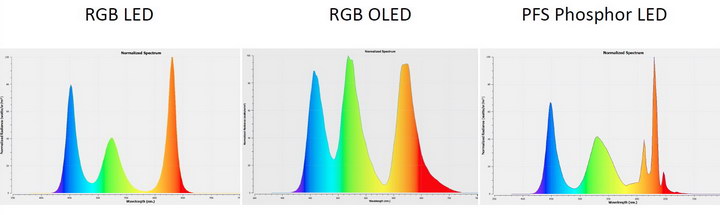

Spectral Energy Density curves for three display technologies. (Credit: Portrait Displays and CalMAN at ADS)

Spectral Energy Density curves for three display technologies. (Credit: Portrait Displays and CalMAN at ADS)

Unfortunately, these monitors plus the larger monitors used for client viewing in post-production (often high-end TV sets) all have different spectral energy density (SED) curves. This can lead to metameric failure of two sorts.

First, when two different displays with different SEDs are set up to the same CIE 1931 white color, they can look distinctly different from each other. This issue can be corrected with improved display calibration, as discussed recently at SID. The second problem has no short term solution. When two displays with different SEDs are set up to the same white point for most people, for other people they can still look different from each other.

This can cause problems, for example when the colorist and the director disagree on whether two monitors are set up to look the same or not. Perhaps it is even worse when the colorist and the client disagree on monitor setup. Pruitt says it would be a display Utopia if color mastering monitors could be made large enough and cheap enough that they could also be used as client monitors but, unfortunately, that is not the case now.

Current EDR Cinema Solutions. (Credit: Portrait Displays and CalMAN at ADS)

Current EDR Cinema Solutions. (Credit: Portrait Displays and CalMAN at ADS)

Pruitt says that the current high-end cinema solutions are really EDR, not HDR, because they simply don’t have the peak brightness and the contrast required for a true HDR solution. As I mentioned before, since there are no formal definitions of EDR and HDR, I won’t argue with either Pruitt or with Dolby, Éclair or IMAX. – Matthew Brennesholtz