The Consumer Technology Association (CTA) recently introduced a new standard, CTA-2069, Definitions and Characteristics of Augmented and Virtual Reality Technologies. This standard can be downloaded for free from the CTA Website, although registration with the CTA is required. The standard was developed by the CTA’s Portable Handheld and In-Vehicle Electronics Committee.

This very short standard (10 pages total, with 2 pages of actual content) defines 11 terms commonly used by the AR/VR community and gives the commonly used acronyms. This vocabulary is:

- 360 spatial audio (360 SA)

- Augmented reality (AR)

- Mixed reality (MR)

- Outside-in-tracking

- Room-scale VR

- Six degrees-of-freedom (6DoF)

- Spherical video (AKA 360° Video/360 Video)

- Three degrees-of-freedom (3DoF)

- Virtual reality (VR)

- VR video and VR images

- X Reality (XR)

I have a number of problems with this standard, not the least of which is it has no external references. These definitions are purely produced by the CTA and has not been cross-referenced with other VR/AR/MR lexicons. If the CTA did cross check their definitions, they didn’t include the documentation in the standard. I’ve looked at a couple of other CTA standards and they were all full of references to other standards and guidelines. Most SMPTE, ISO, etc. standards are so complex that a little guidance is very useful when you are trying to apply them to a specific application such as consumer electronics.

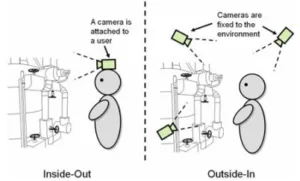

Inside-out vs. outside-in tracking in a VR system (Source: xinreality.com/wiki from Ishii, 2010)

Another one of my problems with this standard is that it is extremely limited. I wonder if the CTA really believes that if you understand 11 words and phrases, you can understand VR, AR and MR? If you are going to be working in the VR/AR/MR/XR community, or even managing people working on these projects, these 11 terms simply aren’t enough. For example, while the CTA defines outside-in-tracking for VR systems, they don’t define the alternative, not surprisingly called inside-out-tracking. (FYI – for outside-in-tracking, the sensors, normally cameras, are mounted in fixed locations and are looking at fiducial marks on the HMD and other objects to be tracked in the VR space, while inside-out-tracking uses cameras or other sensors mounted on the HMD and other objects such as the VR controller, with the cameras looking out at fiducial marks in the VR space.)

Another problem I have with this standard is it defines words using other words a reader is not likely to know. For example, if someone needs a definition of “360 Spatial Audio,” what is the likelihood that he will understand the word “ambisonics?” Similarly, in the definition of “Room-scale VR” the CTA uses the phrase “Spherical Video tracking.” Is this the same as “outside-in-tracking?”

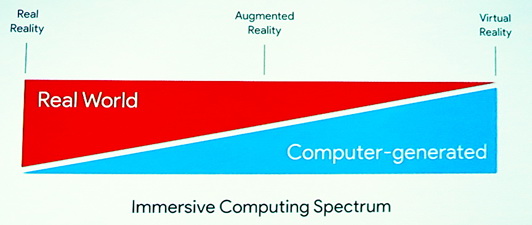

The real reality to virtual reality spectrum covers all of X Reality. (Source: Clay Bayor, Google)

The real reality to virtual reality spectrum covers all of X Reality. (Source: Clay Bayor, Google)

One thing I like about this CTA vocabulary is the way the definitions of “Augmented Reality” and “Mixed Reality” have been expressed. Sometimes these two concepts merge in confusion and have no clear distinction between them. This is not unreasonable because the concept of XR is actually a continuous spectrum.

At SID 2017, Clay Bavor, VP Virtual and Augmented Reality at Google, in his keynote speech “Enabling Rich and Immersive Experiences in Virtual and Augmented Reality” discussed this continuum. (KN2 Google Makes AR/VR Display Breakthroughs – subscription required) Perhaps Bavor oversimplified this range of real reality to virtual reality continuum. Both AR and MR are near the center of the range, with viewers seeing both real and virtual objects. In AR, the virtual objects are intended to be seen as clearly virtual.

Perhaps the most common AR objects seen today are wayfinding instructions overlaid on the real image as shown on an AR display system. “Follow the green arrow” sort of things, with a green arrow overlaying the real world, as shown in the image. In this case the system is not a see-through HMD but an iPad with the real surroundings taken live from the camera.

Mixed reality is a system where virtual objects are overlaid on the real world and are expected to look as though they may actually be real objects in the real world. In the second image, a Pokémon Go creature is superimposed on the image of the real world and game-players are expected to pretend to believe it actually is a real creature. Of course, it is possible to mix MR and AR content and this image has wayfinding information on how to jump over the creature. Clearly, hard and fast definitions of VR, AR and MR are not practical but the ones from the CTA give a good indication of the general range of the three terms.

Augmented Reality wayfinding image shown on an iPad (Credit: Pointr Labs)

Augmented Reality wayfinding image shown on an iPad (Credit: Pointr Labs)

Mixed Reality Pokémon Go image, with AR wayfinding added to MR image. (Credit: Anadolu Agency)

Mixed Reality Pokémon Go image, with AR wayfinding added to MR image. (Credit: Anadolu Agency)

There is only one place in the CTA 2069 standard where I don’t agree with the CTA. They say “VR Video and VR Images are still or moving imagery specially formatted as separate left and right eye images usually intended for display in a VR headset.” This implies that all VR is 3D with separate left and right images. It is not, and VR imagery often has only a single image, designed to be shown to both the left and right eye.

The Bottom Line

My bottom-line objection to CTA 2069 is that it is just not needed. The Virtual Reality Industry Forum (VRIF) has also issued a lexicon on VR/AR terminology that is also free to download, without the CTA hassle of registering. Instead of defining 11 words and phrases, the VRIF Lexicon defines 154. The Lexicon assigns a category to every word or phrase it defines. The 11 categories it uses are: concept, audio, camera, display, interaction, metric, physiology, sensor, software, technology and video. It also says where in the workflow a term is normally used: capture, produce, encode, distribute, decode, render, display, interact and experience. Note this “workflow” goes far beyond what SMPTE would normally consider to be workflow, which would include mostly capture and produce and maybe encode. Certainly end-user items such as display, interact and experience are not included in the SMPTE concept of workflow.

A few of the 154 words on the VRIF list have no definition associated with them. For example, Cinematic VR is categorized as a concept in the produce part of the workflow, but no definition is given. Well, I wouldn’t want to try to define Cinematic VR, either.

Another source of AR,VR and MR word definitions is the XinReality Wiki. On their Terms page they define 114 different words and phrases. These don’t have a one-to-one overlap with the VRIF and they define some terms that are not in either the VRIF Lexicon or CTA-2069. For example, if you need to know the definition of outside-in-tracking, defined by both the VRIF and CTA-2069, you probably also need to know the meaning of markerless outside-in-tracking. One of the problems of a Wiki like this, however, is that it can’t be treated as authoritative. It just doesn’t have the review process that either the VRIF or the CTA has so it’s necessary to double-check any definition from the Wiki before using it.

Since VR, AR and MR are new technologies with new vocabularies, I’d like to see a merged, central, free dictionary of all words and phrases associated with AR, VR and MR. This could put everyone in the industry on the same page,at least in terms of vocabulary. Perhaps the VRIF is working on this since their available lexicon is version 1.0 and was issued 1 July 2017. A year old already! And a year is practically forever in the fast-moving AR/VR/MR community. –Matthew Brennesholtz