Everyone has seen one of those Apple iPhone ads, “Shot on iPhone 7.” (Or iPhone 6, 7+, 8 or 8+.) Gorgeous pictures shot by professional photographers blown up to billboard size or perhaps printed in a glossy magazine. Beautiful pictures shot on the same camera millions of people carry in their pockets.

Maybe you can do as well, if only you buy an iPhone? I’ve also seen equally gorgeous photos shot by equally professional photographers on Samsung phones.

On the night of November 5, 2016, professional photographers around the world shot photos with the iPhone 7 to emphasize the camera’s low light capability. This photo was by Arif Jawad. (Credit: Apple)

On the night of November 5, 2016, professional photographers around the world shot photos with the iPhone 7 to emphasize the camera’s low light capability. This photo was by Arif Jawad. (Credit: Apple)

But what do these professional photographers use when they are getting paid by someone other than Apple or Samsung? Not smartphone cameras but (relatively) large professional cameras from Canon, Nikon or one of the other big camera companies. Plus, they carry at least one camera bag with extra lenses, filters, batteries, etc. Plus, maybe they have one or more people with them to set up adjust the lighting for a specific shot.

The truth about imaging is that the bigger the camera, the higher the resolution of the image and the higher the light sensitivity of the camera. Of course it is possible to put a lousy lens and a lousy sensor in a big but cheap camera and wind up with lousy images. It is also possible to put an excellent lens and excellent sensor in a small camera (e.g. a good smartphone camera) and wind up with excellent images. Still, the upper limit of the resolution of any conventional camera is set by the laws of physics, specifically the diffraction of light, and is based on the size of the camera. Light sensitivity is currently set as much by engineering considerations as by limitations set by physics, but still, for the foreseeable future, bigger is better.

In today’s smartphone market, the size of the embedded camera limits the thickness of the smartphone. If you make a thinner camera, you can make a thinner smartphone. But with a conventional diffraction limited, lensed camera design you also get a smaller sensor providing lower resolution images, no matter how good a lens you use or how many pixels there are in the sensor.

Is it possible to make a thinner camera without losing resolution? The diffraction limit on imaging actually relates to the camera’s area, not it’s thickness. The thickness limit on conventional cameras comes from the relationship between conventional lenses, f/# and pupil size. With realistic, diffraction-limited lens designs this leads to a camera that is essentially a cube with the front to rear dimension of the camera (image plane to front of lens) comparable to the dimensions of the sensor array. A bigger sensor array leads to a thicker camera but the system can also have higher resolution and light sensitivity. How about an unconventional camera? Can we bypass the limitations of conventional diffraction limited lenses?

The answer is theoretically “yes” and several research groups have proposed technologies that could lead to relatively large area but very thin cameras that, potentially at least, have very high resolution and light sensitivity.

Very Thin, but High Resolution Cameras

Reza Fatemi, Behrooz Abiri and EE Professor Ali Hajimiri from the California Institute of Technology (Caltech) have published a paper titled “An 8×8 Heterodyne Lens-less OPA Camera.” For those with a less technically-oriented bent, Caltech has also put a free and simpler explanation of the technology on its website.

This technology uses an 8×8 optical phased array (OPA) detector to generate a receiving beam from a 0.75° region of space. This receiving beam can then be scanned electronically over a 8° field of view (FOV). This is done by controlling the phase of the light at each detector element to control the direction from which the overall system receives light. By varying the phase sensitivity of the different receiving diodes, the beam can be scanned very rapidly to generate the image. I won’t bother you with the details of the physics, I’m not sure I could explain them if I tried.

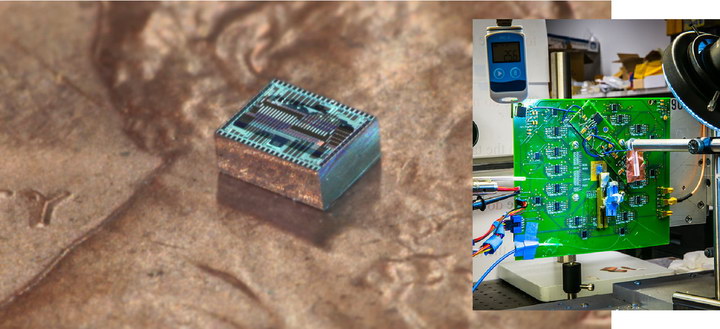

Left: Caltech’s OPA chip is dwarfed by a US penny. Right: The OPA chip in its laboratory setting. (Credit: Caltech)

Left: Caltech’s OPA chip is dwarfed by a US penny. Right: The OPA chip in its laboratory setting. (Credit: Caltech)

Professor Hajimiri said, “We’ve created a single thin layer of integrated silicon photonics that emulates the lens and sensor of a digital camera, reducing the thickness and cost of digital cameras. It can mimic a regular lens, but can switch from a fish-eye to a telephoto lens instantaneously—with just a simple adjustment in the way the array receives light.”

Phased Arrays Already in Use

Phased array systems are already used in consumer electronics, but currently only in the RF range, not for visible light. For example the antennas in a 60MHz Wi-Fi system are aimed using phased array techniques and some wireless charging systems use phased arrays to aim the radiated power at the target to be charged.

This system is a research project at Caltech and clearly is a long way from a practical OPA camera for smartphones. For one thing, the lab system used 1550nm laser light, not the visible wavelengths iPhone photographers want to use. Plus, nothing is free – the 8×8 array in the Caltech camera generates an image that has roughly 8×8 resolution, far from the multiple megapixels needed for smartphone cameras. While this is a good proof-of-principle, Caltech is a long, long way from a 1920 x 1080 array of phase-sensitive diodes for visible light.

Other thin camera systems are less futuristic and may be closer to practical implementation because they build on the existing technology for high pixel count sensors. In these systems a flat mask and lots of computing power essentially replaces the lens.

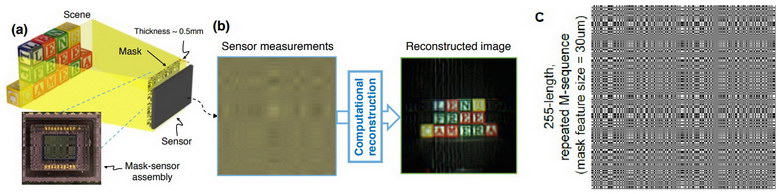

Rice University FlatCam System (Credit Rice University)

Rice University FlatCam System (Credit Rice University)

For example, Rice University has a thin camera assembly, as shown in the image, called the FlatCam. A binary coded mask (C) is placed 0.5mm away from a conventional, off the shelf image assembly (A). Every point in the original scene contributes, to some degree, to every pixel on the sensor, leading to something that looks nothing like the image to the unaided eye (B). However, the image can be recovered by computational means, leading to the reconstructed image. In the experiment, a 512 x 512 mask was used with a 512 x 512 sub-section of a higher resolution imager to eventually provide a 512 x 512 reconstructed image. The system does not contain depth information. It is similar to a pinhole camera in that it produces an infinite depth of field. C is the binary-coded mask used by the Rice researchers for work in visible light. They also did experiments in the infrared using a mask with a larger pitch.

“Moving from a cube design to just a surface without sacrificing performance opens up so many possibilities,” Ashok Veeraraghavan, assistant professor of electrical and computer engineering at Rice said. “We can make curved cameras, or wallpaper that’s actually a camera. You can have a camera on your credit card or a camera in an ultrathin tablet computer.”

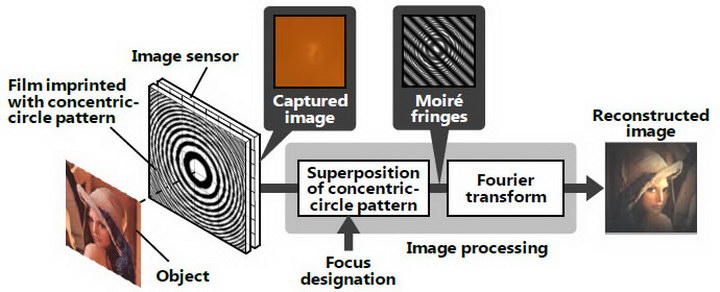

The lensless camera from Hitachi is based on a mask with concentric rings. (Image: Hitachi)

The lensless camera from Hitachi is based on a mask with concentric rings. (Image: Hitachi)

As a second example, Hitachi has also developed a lensless camera, as shown in the image. Since this system has been produced by a camera company, not a research university, it is probably closer to production. A circularly symmetric mask is suspended slightly above the conventional image sensor. The original image is then decoded from the captured image using moiré fringes and fourier transforms. Like a light field camera, this system captures depth information and the image can be decoded for any focus plane.

According to Hitachi, coded aperture cameras like theirs or the one from Rice are not a new technology (the Rice paper has 40 references) but the current Hitachi approach is said to use 1/300 the computing power of previous designs. This would give Hitachi a major advantage over others developing coded aperture camera designs. According to Hitachi, this camera technology makes it possible to make a camera lighter and thinner since a lens is unnecessary and allows the camera to be more freely mounted in systems such as mobile devices and robots without imposing design restraints.

Can you Square the Circle?

There you have it – very thin cameras suited for use in smart phones are a subject of intense research both in industry and universities. Perhaps, in the short term, one of the many large area coded aperture designs could be incorporated into an smartphone case. Currently these cases are just decorative and protective, with some containing an add-on battery. They have plenty of unused area for an ultra-thin camera. How about a smartphone case with a built-in camera using a sensor as big and as good as the one used in the $3,499 Canon EOS 5D Mark IV, a popular choice among professional photographers?

Think of the ad campaign that could lead to! -Matthew Brennesholtz