One of the highlights of Display week for a person with a technical background like me are the technical sessions. I attended most of the Augmented Reality (AR) and Virtual Reality (VR) sessions and will be reporting on them in this article. Sessions 4, 11 and 18 were on Tuesday 5/23 of Display Week. Sessions 46, 51, 58 and 65 plus the poster session were on Thursday. The last two sessions, 72 and 78 were on Friday, after many Display Week attendees had gone home. There were no AR/VR sessions on Wednesday, to avoid a conflict with the AR/VR business conference. (Display Week’s AR/VR Business Conference)

Several AR/VR papers presented at Display Week 2017 were designated “Distinguished papers.” Extended versions of all distinguished papers are available at no cost in the Journal of the SID (J-SID) through December 31, 2017.

Sessions 4 & 11: AR/VR Invited Papers

All the papers in these two back-to-back sessions were invited papers related to AR and VR. Speakers were from many of the major players in the industry, including Google (4.1), Intel (4.2), Stanford University & Dartmouth College (4.3), Intel (4.4), Microsoft (11.1), Meta Co. (11.2), Lumus Ltd. (11.3) and Avegant Corp. (11.4). Several of the papers were undiluted pro-AR/VR propaganda, including 4.1, 4.2 and 11.2, so I won’t report on them.

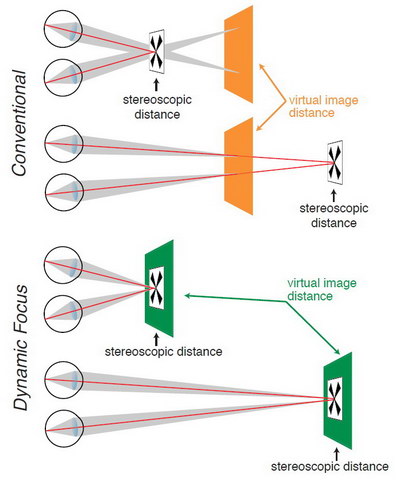

Paper 4.3 was an introduction to a recurrent theme in many AR/VR papers later in the program: the Vergence/Accommodation issue. This is the same issue raised by, but never really solved for, 3D TV. Experience with 3D cinema has shown a simple solution – put the image far enough away from the viewer so that no accommodation is needed and the problem goes away. Throughout the conference, no one explained why this solution could not be adapted to the virtual image in a VR headset, where the optical designer has considerable freedom to place the virtual image at any distance desired. Accommodation correction may be required in AR systems, as I’ll describe later.

Schematic of the Vergence/Accommodation problem with a fixed-focus 3d VR HMD (Top) and its solution with a dynamic focus HMD (Bottom) (Image: Stanford and Dartmouth)

Schematic of the Vergence/Accommodation problem with a fixed-focus 3d VR HMD (Top) and its solution with a dynamic focus HMD (Bottom) (Image: Stanford and Dartmouth)

Paper 4.4 from Intel is the paper that puzzled me more than any other paper in the entire conference. It was titled “An End-To-End Virtual Reality Live Streaming Solution.” It was primarily about the live streaming of sporting events, which typically last two hours or more. Hasn’t anyone told Intel that VR sickness keeps people from watching VR for more than about 20 minutes at a time? When I asked the speaker about that during the Q&A session, he dismissed it, saying VR HMD technology would solve that problem. He also did not present any viable business model that would allow the recovery of the very high VR production costs for live sports. I wonder if Intel had learned anything from the rise and fall of 3D TV, where, once the novelty wore off, very few viewers had any interest in live 3D sports?

Paper 11.1 was largely a duplicate of what Microsoft was going to say in the next day’s AR/VR conference, perhaps at a slightly higher technical level. Paper 11.4 was a review of the design issues associated with a near-to-eye (NTE) display using a DMD, LCoS, LCD or OLED imager. Paper 11.3 was similar to 11.4 except it focused on the Lumus optical design.

As is typical of Invited Papers, these two sessions would be useful background for someone new to AR/VR and its capabilities, but didn’t really reveal any new technology.