A humongous floating video wall and a virtual badminton game.

Image provided by The Tokyo Organizing Committee of the Olympic and Paralympic Games Image created with the cooperation of NTT

Image provided by The Tokyo Organizing Committee of the Olympic and Paralympic Games Image created with the cooperation of NTT

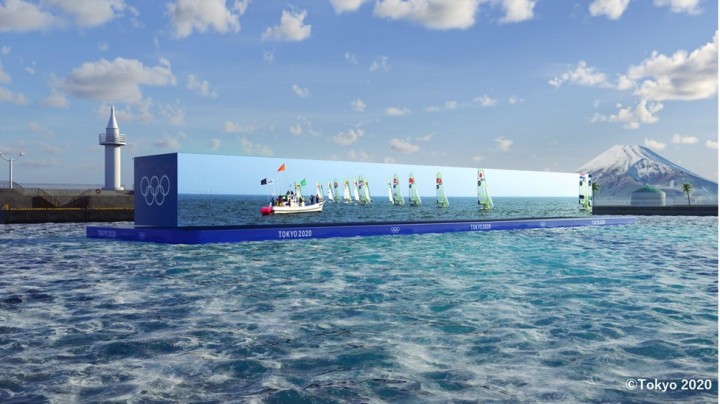

For the Olympics, NTT Docomo built and launched (literally and figuratively) a barge with a very large NTT Docomo display, the company has named Kirari! The barge displayed live transmissions of 12k ultra-wide composited images of an entire sailing event to remote locations. NTT’s goal was to provide the sense of realism of being close to the venue, as well as a sense of unity between players and spectators, and audiences, even when they are far away.

Images taken by boats and drones equipped with multiple 4K cameras were combined in real-time into an ultra-wide image of 12K resolution consisting of 3 aligned 4K images and transmitted live to a 60-meter-long offshore wide screen floating in front of the sailing competition site.

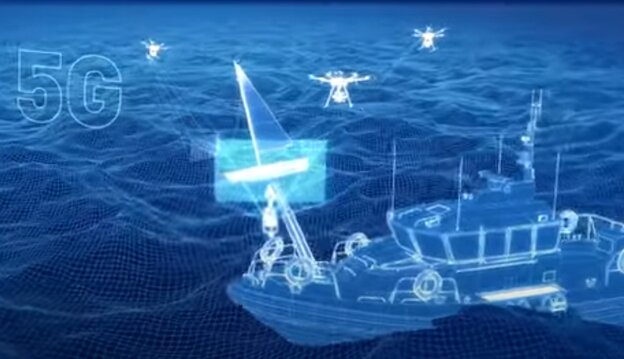

4K cameras on ships and drone captured the real-time Olympic sailing and water events (Source: NTT DOCOMO)

4K cameras on ships and drone captured the real-time Olympic sailing and water events (Source: NTT DOCOMO)

NTT set the floating display in the bay of Tokyo for visitors and the press to watch various Olympic sailing and water events. The data from the 4K cameras on drones and ships was sent by an Intel-based 5G system. The video was displayed at the Enoshima Olympic village and also on a super-wide 13-meter display set up in the main press center in Tokyo’s Big Sight.

Drones and ship capture the live action of the racing fames and transmit over 5G to the viewing stations (Source: NTT DOCOMO)

Drones and ship capture the live action of the racing fames and transmit over 5G to the viewing stations (Source: NTT DOCOMO)

NTT contributed the Kirari! Display and accompanying technology to the “Tokyo 2020 5G Project.” The data from the cameras was synthesized to create a 12k image that was projected on the big display.

NTT took four separate images with about 10% overlap and then merged them into three super-wide images that they blended into one giant image consisting of three 4k images—11520 × 2160—25 mpixels.

In addition to cheering on players from their home countries, the players and staff were able to understand important information such as wave and wind conditions in distant competition areas because they could actually see it from the video captured on site.

Visitors watch the Olympics from a safe distance (Source: NTT DOCOMO)

Visitors watch the Olympics from a safe distance (Source: NTT DOCOMO)

NTT said the goal was to provide a more realistic visual experience for people who couldn’t attend the Olympics due to the pandemic. The company plans to leverage this project as a new way for people to watch sporting events in age of the new normal.

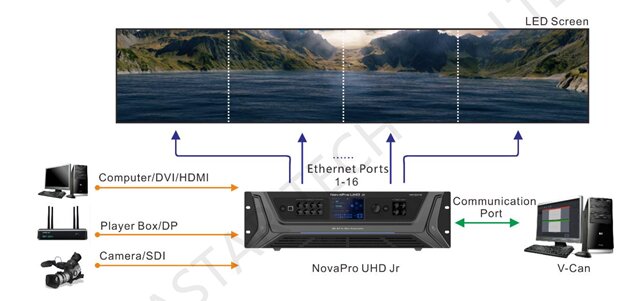

NTT used three NovaStar UHD Jr LED panel controllers to drive the 25 Mpixel screen. NovaStar is based in Xi’an, China.

NovaStar’s UHD Jr LED screen controller

NovaStar’s UHD Jr LED screen controller

The NovaPro UHD Jr accepted DVI inputs and drove the screens via its Gigabit Ethernet output. An input source can be made up of at most 4 DVI input sources.

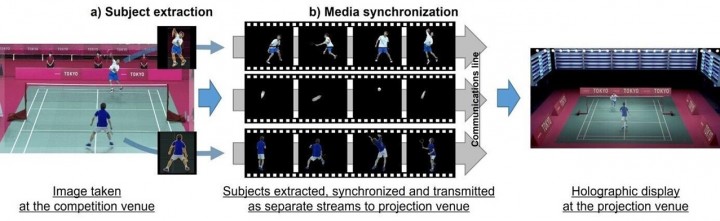

Remote Badminton Without a Green Screen

NTT also launched a project for the transmission of the badminton competition held at the Musashino Forest Sport Plaza to a remote projection venue (Miraikan—National Museum of Emerging Science and Innovation) connected to the network using Kirari!.

The system for the badminton event used real-time extraction of objects with an arbitrary background.

When extracting only the subject from a video, a background such as green or blue background is generally prepared, and the background color is removed by chroma keying. In contrast, the NTT system extracted only the subject in real-time from the video as it was played in the field, without the need to prepare a special background environment. NTT had been conducting research and development on this technology for some time.

The NTT engineers succeeded and got a robust and accurate subject extraction using a deep learning model optimized for the space of a badminton court by adding a pseudo-depth image to the input image. That made it possible to extract players individually even in a fast-moving sport like badminton where players are separated in the front and back sides of the court.

To transmit video, NTT used real-time extraction of objects with arbitrary background technology described above to separate the player images, the shuttlecock image, and the sound of cheering from the competition image and transmit them as separate streams to the projection venue. The system synchronized and transmitted those separate streams. It was possible to display the elements of a life-size court, such as players, shuttles, and spectators’ cheers, with the right timing, which improved the sense of reality.

Transmission of filmed images from the competition venue and holographic display at the projection venue (Source: NTT)

Transmission of filmed images from the competition venue and holographic display at the projection venue (Source: NTT)

For the shuttlecock, which moves both subtly and at very high speed in video, NTT established a method to accurately extract the contours of the shuttlecock as it deforms into various shapes by identifying its position using a deep learning model developed specifically for badminton. Opposing players and the shuttlecock are extracted independently, allowing for multi-layered projection at the remote venue.

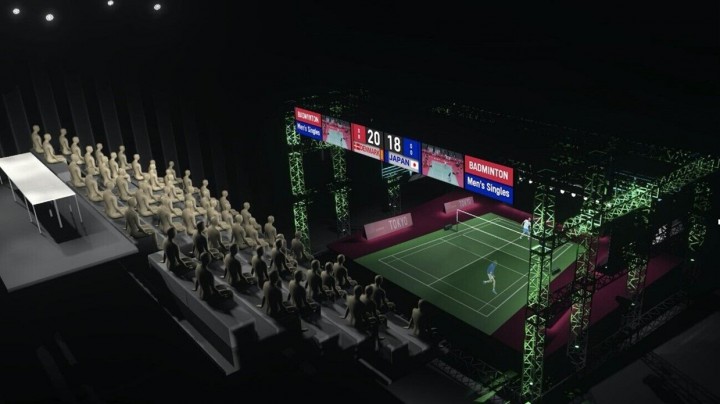

In conventional ‘holographic-like’ displays, ‘Pepper’s ghost’ is often used, where aerial images appear by reflecting displayed images (from below the viewer’s vision) onto a half-mirror set at an angle of 45 degrees. With this method, the depth of the aerial image is fixed, and there have been various issues in reproducing a space with different depths, such as badminton in which the depths of the players in front, the net, and the players at the back are different.

NTT solved the problem by putting the spectator seats at a height close to that of the actual competition venue, and the court. Two half-mirrors and LED display/projector were set up for the line of sight looking down from the seats.

A special device reproduces the height difference between the match court and the spectator seats (Source: NTT)

A special device reproduces the height difference between the match court and the spectator seats (Source: NTT)

That arrangement allowed for a multi-layered projection of the two layers of aerial images at different depths, with the players in front on the court in front, and the players at the back across the net on the opposite side of the court, each holographically reproduced. In addition, continuous, connected, and natural shuttle movement was reproduced by dynamically changing the display position of the shuttle image between the two layers of aerial images. It was also possible to display live match images in the form of giant signage floating above the court, and to enhance the live viewing experience with projection mapping on the court. (JP)

This article was originally published in Jon Peddie’s excellent TechWatch report and is reproduced with kind permission.

Dr. Jon Peddie is a recognized pioneer in the graphics industry, President of Jon Peddie Research and named one of the most influential analysts in the world. He lectures at numerous conferences and universities on topics pertaining to graphics technology and the emerging trends in digital media technology. Former President of Siggraph Pioneers, he serves on advisory board of several conferences, organizations, and companies, and contributes articles to numerous publications. In 2015, he was given the Life Time Achievement award from the CAAD society Peddie has published hundreds of papers, to date; and authored and contributed to eleven books.

His most recent tech title was “Augmented Reality, where we all will live.” His latest fiction work, Database, is here (please feel free to upvote it!!)

(and his next one will be on some key technology history!) Jon has been elected to the ACM Distinguished Speakers program.