The first session at the Display Summit was on “Trends in High Dynamic Range Ecosystem” (sic).

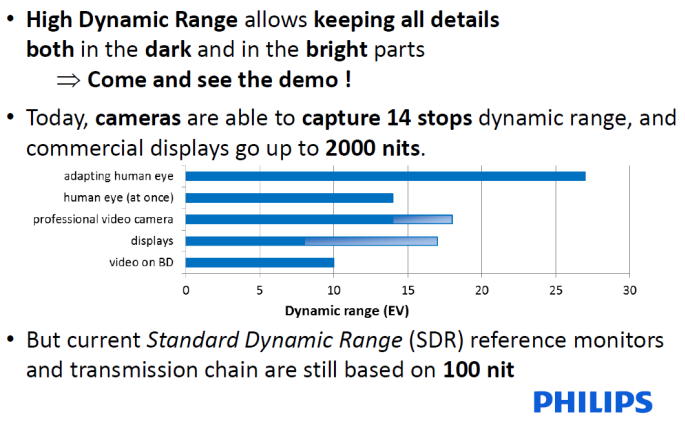

The first speaker was Joop Talstra who is from Philips where he is involved with standardisation. He started by explaining high dynamic range (HDR) and said that if you don’t have HDR, in many scenes you can clip highlights or lose details in the black, or move the black levels up to get the black detail. You can capture 14 f stops with modern cameras, and displays are now bright enough to show the range equivalent to the eye (without adaptation).

What are the problems – how do you handle the extra data, Talstra asked? There is a cost to increasing the bandwidth to support the extra bits. There is also a question of how do you deal with a range of different mastering displays? While Hollywood and TV producers use a standard of 100 cd/mm² for mastering, there is a wide range of consumer displays available in the market – not just in the 100-400 cd/mm² range. There are already TVs with 1,000 cd/mm² of peak brightness.

On the question of how do you deal with transmission, Talstra said that the good news is that you don’t need 20 bits of data (which you might need if you captured the brightness in a linear way – Man. Ed.), you can do it in 10 to 12 bits and the so-called PQ (perceptual quantizer) curve, now standardised as SMPTE ST2084, is well understood.

There are a variety of different mastering monitors and looking good on this kind of display can be basically solved with ST2086 which standardises the metadata to record how the content was mastered. “How do you render for different TVs?” is a different question. Ideally, you would send optimised content for each class of display, but this is unrealistic, Talstra said. There is convergence in the industry on creating two versions – with an SDR and an HDR range. The set can then inerpolate between the two according to its capabilities.

To deal with the transmission capacity problem, you don’t want to send two complete streams – it’s better to send one SDR version with a different signal using metadata to tone map from one another and this can be managed by scene/scene metadata and by ST2094 (still a “work in progress” at SMPTE) which allows frame by frame metadata. You can also code any residual difference between SDR and HDR with another layer. (This is the Dolby approach, Talstra said.)

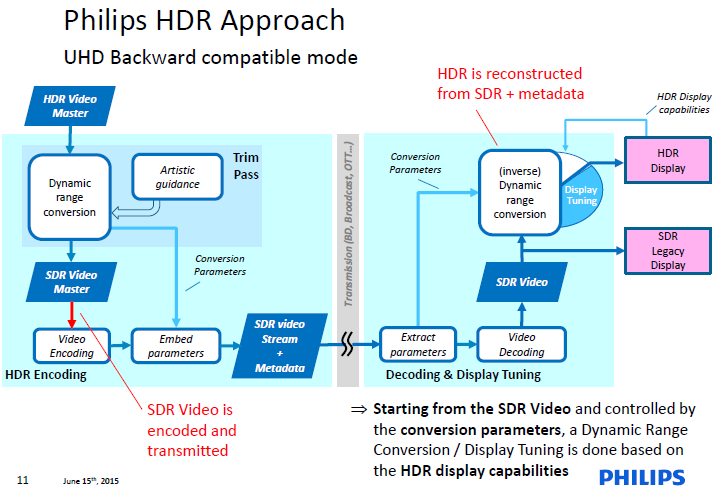

The Philips concept is to create an HDR video master using a high performance master – then an SDR version is created with the metadata to help the display to optimise the SDR version (a few Kbits per second). That takes some human intervention (you just adjust knobs to create metadata, apparently). In transmission you do the reverse.

For broadcast, the SDR is sent, but the HDR is created at the set using an inverse of the metadata. For optical/OTT transmission, where you have more bandwidth, you send the HDR and downcode to SDR.

For the display manufacturer, the aim is not to remove differentiation, but to allow the maker to control how the metadata is used to differentiate the HDR and SDR streams.

Philips’ concept is to keep things relatively simple and Talstra said that less than 1mm² of silicon is used for decoding.

In questions, Talstra said that although metadata is a small overhead, 10 bit data is a bigger overhead, but less than the overhead for UHD resolution or HFR.

ITU is looking at standardising for HDR and ST2084 is “a strong candidate”, in his view.

There was another question. Colorists spend all day in 100 nits and very low light – and there is an issue of how will the HDR content impact the colourist? Talstra said that HDR will be overused initially probably, for effect, as dramatic 3D was initially. Colourists are worried about sudden “popping HDR” that is very bright and may destroy colour vision for 10 minutes. Given that the best colourists are very expensive, this is a cost and health and safety issue.