3M has often been the source of real innovations in the display field, so I was surprised to stumble upon a new technology for optics that seemed to have slipped by me when it was first announced. However, I’ve caught up now, after seeing a reference to a joint development that 3M is pursuing with Pegatron to make reference headsets using the technology.

One of the challenges in developing VR and mixed reality headsets is that the headset is often quite big and projects a long way from the face. That can be an issue as it puts the weight of the headset away from the face making it harder to develop comfortable headsets and meaning, for example, some pressure on the face from the weight of the headset. The nearer you can bring the optics, the lighter you can make the headset and the small the moment of the mass of the optics.

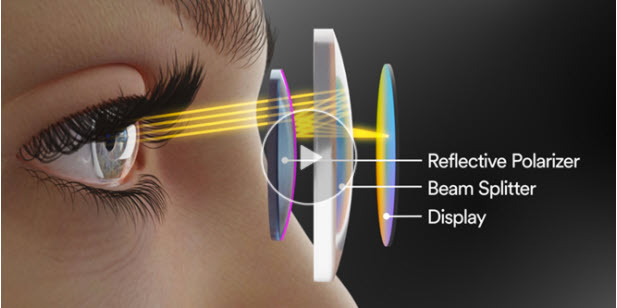

3M has developed an interesting concept of ‘folded optics’ that can substantially reduce the depth needed between the lens and the eye. The technology can also be used to allow some adjustment of the focal length, which means that spectacles may not be needed when using the headset. In the longer term it may mean an ability to offer dynamic focal length corrections to help with, for example, focus/vergence disparity, which can cause discomfort.

The key to the technology is a reflective polariser. 3M has been working with this kind of optical element for years as reflective polarisers as the firm’s DBEF technologies have been widely used in LCDs to recycle light in the backlight, to reduce power consumption or to enhance brightness and contrast.

The headset system works by using two lenses. Light from a display (which is circular polarised) goes through a 50/50 beam splitter on the first lens and through a 1/4 Lambda coating which changes the polarisation. The light impinges on a reflective polariser on the front of the second lens. The polariser reflects the light back again to the inside of the first lens (where the beam splitter is), from where it is reflected back into the eye. The front lens can be be moved backwards and forwards to adjust the focal length.

3M has been working on this kind of VR optics since 2013 and claims that the resolution is better than frequently used fresnel optics because of the way that the lenses can be optimised. It calls the concept the Harp or High Acuity Reflective Polariser Lens. The lens is 2.4cm thick in the Pegatron version of the headset.

You can find more content from 3M including a 40 minute (and pretty cheesy!) video presentation about the headset here.

Headset Details

The headset uses the Qualcomm XR2 processor and includes a 6 DoF mono camera-based tracking system and dual colour cameras for pass-through video. The headset weighs 376 gms (12 oz) and the casing is modular to allow OEMs to have different designs. The battery is at the back so that the weight is quite balanced, front to back which reduces the pressure on the face. The headset has options to allow users to wear spectacles. There is built-in audio with speakers in the arms of the unit. To keep the face cool, there are ventilation slots to allow air to flow from below.

The display device in the Pegatron display is an LCD with 2.1″ diagonal with 1058 ppi and 90Hz refresh (my quick calculation based on a square LCD shape was that the resolution is 1600 x 1600 per eye – that was confirmed in the Q&A). The folded optic path is said to maintain good contrast and resolution in the image. The field of view is 95° per eye, allowing up to 105° in stereo view.

The Harp can be optimised for different kinds and sizes of displays, but the lenses would need to be optimised. 3M is open to this discussion but it’s quite a complex process. It might be able to stretch to bigger displays up to around 2.5″, but 3M pointed out that there are not such panels around at the moment.

In response to a question about ipd, 3M said that because of its optical system, the headset can match to 90% of potential users using a fixed system, but it would be possible to design an adjustable system if customers wanted them.

For a more formal deep dive into the technology, you can check here for a paper presented at SPIE in 2017. (BR)