3IT is focusing these days on AR and VR after starting as the 3D Centre. 3IT is a trade association that has close links to the Fraunhofer HHI in Berlin and each year it holds an open day, usually on the Thursday before IFA, as many people are in town for the big event. We dipped out of the IFA press events to see what was going on at 3IT. We had to head back rapidly for other duties after the event, so didn’t get a chance to try the demos but we found the event useful.

Unfortunately, few of the vendors were happy to share presentations, but thanks to those that did.

Dr Andreas Gördeler switched his speech to English. Image:Meko

Dr Andreas Gördeler switched his speech to English. Image:Meko

The first speaker was Dr Andreas Gördeler of the German government. He said that the 3IT centre has become an internationally-recognised centre of excellence. The Ministry of Economy and Energy sees immersive technology such as AR and VR as being important for automotive, industrial and medical applications. 3D modelling and printing are being supported as important industrial and medical technologies. According to some research commissioned by the government, annual sales of 3D goods and services are estimated to be growing significantly – to be €2.5 billion by 2020. 3D printing should go from €7 billion in 2016 to more than €20 billion by 2020.

The government is backing a Smart Data Forum that is working on projects including 3D imaging and processing under a programme called ‘Pace’. There is also a project to develop ‘digital twins’ of buildings for construction, but also for maintenance and long term servicing of buildings.

The ministry is also working on AI and blockchain, but sees AR and 3D as important.

(I wonder how many UK or US ministers could switch their speech to English, which Goerdeler did as the meeting was held in English?)

HEVC & Codecs

The second speaker was a speaker that we have often written about, Stephan Heimbecher from the Deutsche TV Plattform (but he is also CTO of Sky in Germany). The first question was ‘is the market going UltraHD?’, as the title of the talk was ‘UHD Roadmap;Five Years After HEVC’

There were 7.4 m UHD sets in Germany by the end of June after 1 million were sold in 2015 and there is expected to be an installed base of 10 million sets end of the year in Germany

Set makers are often ahead of content creators, these days. Looking at the development of the HEVC codec, in 2013 the standard was finalised and live encoding was first tried in 2014. Sky launched services based on HEVC in 2016, two years later. There are so many things to consider in creating and delivering content that it can take quite a long time to bring in new technologies.

Turning to the question of “Linear vs streamed”, Heimbecher said that every five years there is talk of an end to linear TV according to analysts. However linear still remains an important part of the mix. Live events are key for linear broadcasting and mass events often have a problem with streaming so ‘there is room for improvement’, he said. The one to many technology of satellite is a good model, he believes.

5G should enable a lot of bandwidth everywhere at only a little cost, but at the moment, Heimbecher is not worried that this will compete with satellite broadcasting.

Schwarz Updates on Codecs

Dr Heiko Schwarz from Fraunhofer HHI and works on H.264, H.265 and H.266, which is under development. There are lots of devices that can support HEVC but there are still a lot of services using H.264. The goal for the next H.266 codec is again a 50% reduction in bitrate for the same subjective quality. Last year the main standards groups put out a call for new technologies to be used for H.266 and in April they were evaluated. The best have already showed a 40% improvement in bitrate. VVC (Versatile Video Coding) is the new name for H.266. In 4K you still nearly need a whole transponder per channel with HEVC and so you still need a lot better compression to allow the development of better video.

Schwarz said that a VVC decoder may need up to 50% more complexity however, a full-featured encoder may need to be quite a bit bigger than current designs. It’s always the same – after an encoder is developed, people find ways to improve the encoding.

We got a chance to briefly chat with Dr Schwarz after the event and he told us that the H.266 technology is just an evolution of H.265 with just ‘more of the same’ and trading, as before, better efficiency for more processor power being needed. The codec will probably have the same opportunities for pre-processing to reduce bitrates and is expected to also see a steady development in encoder technology as content creators learn how to exploit the new codec.

HHI Using 3D Cameras for Medicine

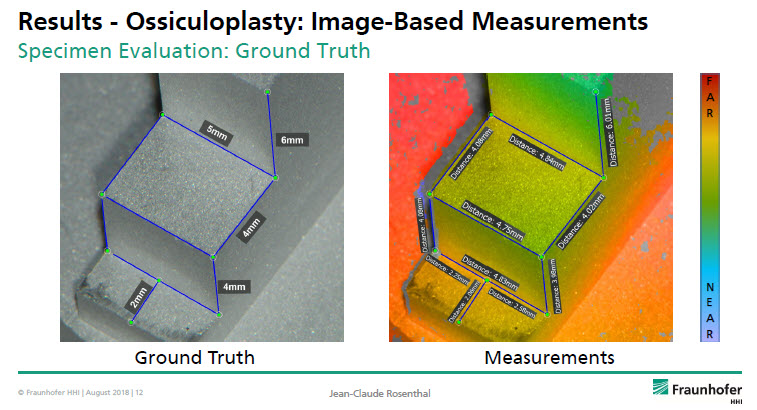

Jean-Claude Rosenthal of Fraunhofer HHI has been working with Arri on a project for digital microscopy in 3D. From a stereo video signal, there is a process of 3D quality control and depth estimation for measurement.

A target application is in middle ear surgical procedures and here, accurate measurement of the correct instrument is very essential for accurate operations in restoring hearing. Measurements calculated from the images were tested and the finding was that the system is quite accurate and certainly accurate enough for that application. The group also developed a way to help surgeons cut very accurate replacement ear drums using technology ‘like a movie green screen’.

The Fraunhofer checked its image accuracy.

The Fraunhofer checked its image accuracy.

The group is also developing 3D panoramic imaging from multiple images which means that surgeons can understand an individual’s anatomy without needing keyhole surgery to ‘look around’. A new company entering this market is ‘Work Surgical’ (which has Google behind it).

HHI is also working on some industrial applications for this technology.

Siemens Looks to AI for Spare Parts Identification

Dr Stefan Kluckner is from Siemens and is working on M3D which is focusing on applications including railway engineering. Also working on 3D additive manufacturing and these days all computation is done in the cloud.

Spare part identification is one application and another is potential additive manufacture of parts. With railway rolling stock often being used up to 50 years, identifying spare parts can be a challenge. Siemens would like to be able to capture an image, process it in the cloud, then find a part number to allow quick repairs. The group is trying to use standard devices such as IPhone 6 or other cameras as the capture imager. 3D sensors on smartphones are useful, but the iPhone X has the 3D sensor pointing in the wrong direction! At the moment, the company is using external 3D cameras for development. Kluckner said that AI is an important part of the process. At the moment, the front end for the identification process is in Android.

Turning to additive manufacturing, there are technical, legal and economic aspects to the development. There is a decision tree that needs to be followed for each part to see if printing makes some sense. If you get technical, legal and economic ‘green lights’, you can go ahead, but only with all three.

The key challenge for parts identification is to be able to use just RGB camera sensors, rather than depth cameras. There is a big challenge because of the scale differences between the smallest parts and largest parts in a railway unit (the largest of which might have to use RGB because they are so big).

Automated decision making is still not good enough or as good as experts, so far, in deciding if additive processing is appropriate.

High Quality 360 deg Video – What is New?

Christian Weißig – is the developer of the Omnicam that we have reported on several times. There have been several productions this year using the Omnicam including in buildings in Germany (including Munich) and Italy (Pompeii). There has also been the capture of an operatic production. The Omnicam has 10K x 2K resolution plus a top camera. The Marriage of Figaro opera will be shown on the Arte 360 online platform.

The Omnicam group has worked with Deutsche Bahn to help with planning for sound and the technology used to demonstrate what DB is doing to reduce sound from developments. We reported on this back in 2015 (3IT7 And Onward to the TIME Machine…)

The Omnicam has also been developed to produce even higher ‘Hollywood quality’ using 14K x 2K cameras. Auto stitching is not perfect, but can be used for some content, with manual intervention for difficult content.

Christian Weißig, Fraunhofer HHI and Dr. Johannes Steurer, ARRI with moderator, Kathleen Schröter

Christian Weißig, Fraunhofer HHI and Dr. Johannes Steurer, ARRI with moderator, Kathleen Schröter

VR & the Brain

Kathleen Schröter introduced Dr Michael Gaebler who is from the Mind Brain Body Group of the Max Planck Institute in Berlin and he talked about VR and the brain. There is a lot of work going on, taking psychological issues and finding physical causes and factors that may be behind them. There are more details at vreha-project.com. The project is government-funded by BNBF (Bundesministerium fur Bildung und Forschung)

Dr Michael Gaebler is from the Mind Brain Body Group of the Max Planck Institute. Image:Meko

Dr Michael Gaebler is from the Mind Brain Body Group of the Max Planck Institute. Image:Meko

The project is looking at ‘classical’ diagnostics and asking if can VR help to diagnose cognitive impairments? For example, after a stroke you can often diagnose motor impairment relatively simply by watching the patient walking. Cognitive impairments, on the other hand, are harder to diagnose. Gaebler gave the example of the Rey-osterrieth Complex Figure Test – you need to try to copy a previously remembered pattern with a paper and pencil. In the test, you compare the original with the image redrawn from memory and that can help to pont to issues.The group is trying to see if VR can help instead as it may be more repeatable.

The process of development is a loop – Test – Prototype – Test – Modify.

Alzheimer’s is also initially seen as a psychological problem. The group tried copying a regularly used test and took a commercial headset with Leap Motion and Kinect to create a simulation. The test requires a patient to see an environment of objects, for example, on a surface. The user is then asked to reconstruct the environment and the time taken to do this is a good indicator of the progress of the condition. At the moment, the research is still in the early stages.

The rubber hand illusion shows it is relatively easy to fool the brain about body parts.

The rubber hand illusion shows it is relatively easy to fool the brain about body parts.

There is also a ‘VR ghost’ project. In most VR, if you look down, you don’t see yourself (commercial often have avatars) but for proper immersion you need to have real bodily interaction. Gaebler explained the ‘Uncanny valley’ effect, where things that are very close to reality, but not quite there, are very uncomfortable for humans. This issue highlights that it is not just about visual realism. You need to be either side of the valley. He talked about the ‘Rubber hand illusion’, where researchers stroke the hand of a subject (out of the view of the subject) and at the same time a rubber hand that it is in a feasible position for the real hand. If researchers then threaten the rubber hand the subject thinks it is their own and may react quite strongly. The effect was only discovered in 1998 and there is still a lot to be learned.

There is another project to see if VR can be useful. Experiments in neuroscience are very difficult with MRI – you can’t use it to study the effect of VR because no movement is possible during an MRI. The group is now using ECGs instead. It can be difficult to control and stimuli are often very simplistic but VR can be more complex in stimulating reactions. The hope is that you could correlate brain signals with reported feelings and in that case, VR could really help to better understand stimuli.

Panel Session

Schroeter then ran a panel session and each of the speakers introduced themselves and their companies.

Volucap is a new company in Studio Babelsburg for volume video capturing, based on early work on volume video capture at the 3IT. Sven Bliedung is from the company and said that it is related to HHI, Studio Babelsburg and Arri. The lighting system is from Arri and is said to be able to mimic ‘any light situation’. 3It has 120 light panels in its prototype system but Volucap has a ‘much more complex’ set up. The studio is a very good location for the facility as it can provide services such as make up and costumes which are important.

Bliedung said that capture has to be very good to look natural. He believes it is the only studio in Europe able to do volume capture (although there are rumours of another in Grenoble). There are two other studios in the US that use Microsoft software. Microsoft has a whole system for showing Mixed Reality, but there is little or no content so the company is now trying to help enable the availablity of content.

Soneke Kirchhof is from reallifefilm international/INVR.Space which are working on content as well as technology. How do you tell stories with the latest technology? That is the biggest question, Kirchof said, and if you can’t answer it, nobody will care. How do you immerse an audience?

Nico Nonne is from Trotzkind which is focusing on computation and technology – e.g. combining ‘body parts’ from different takes and building realistic full characters so that users can then be interactive with the character. Currently the firm is developing a three minute experience working with reallifefilm.

All the companies are trying to develop a standard workflow. At the moment it is quite complex and the aim is to make it simpler.

Der Moench am Meer

Der Moench am Meer

Georg Tschurtschentaler is from gebreueder beetz filmproduktion and he said that it is really about content and distribution. His firm has been running a project for a three part VR series about art masterpieces so that the viewer can really immerse themselves in the emotion behind a painting. On project is based on a famous German artwork ‘Der Moench am Meer’ or the monk at the sea. You can’t see the face of the monk, so the viewer can use their imagination. The company made a story based on finding a lot of under painting underneath the picture during a recent two year restoration) The group shot video of a monk using volumetric capture. It’s not clear how you should interact? The project posed many questions about how you move and relate to the volumetric character. The firm has been working on a ‘room scale’ installation for users to interact with and they can then go to look at the painting.

Another project in Norway has been developed around “The Scream” by Edvward Munch and, in France, another around Monet’s “Lilies”. The production has been financed by Arte and the Norwegian and French authorities. French funding is better and France is more advanced in this kind of field. What do museums have to do to attract digital natives? is a question that is being asked. Is it hard to find money for “VR and volumetric?”, Tschurtschentaler was asked. He said that those that win Emmy awards are all big budget. There is some money in the Arts and branded content can be interesting for financing development.

Trotzkind has developed a VR-based escape room – which generates revenue as people pay for the experience as a form of location-based entertainment (LBE). Kirchhof said that there is definitely not enough money for creative content at the moment, although there is some more serious content being developed. VR hardware makers should support content makers to help the market to develop, but they don’t so content makers have to find new ways to generate revenues from LBE or other alternatives.

Volucap is said to be working with Microsoft – it is working with a lot of companies in this area. Partners are always needed – collaboration is always helpful.

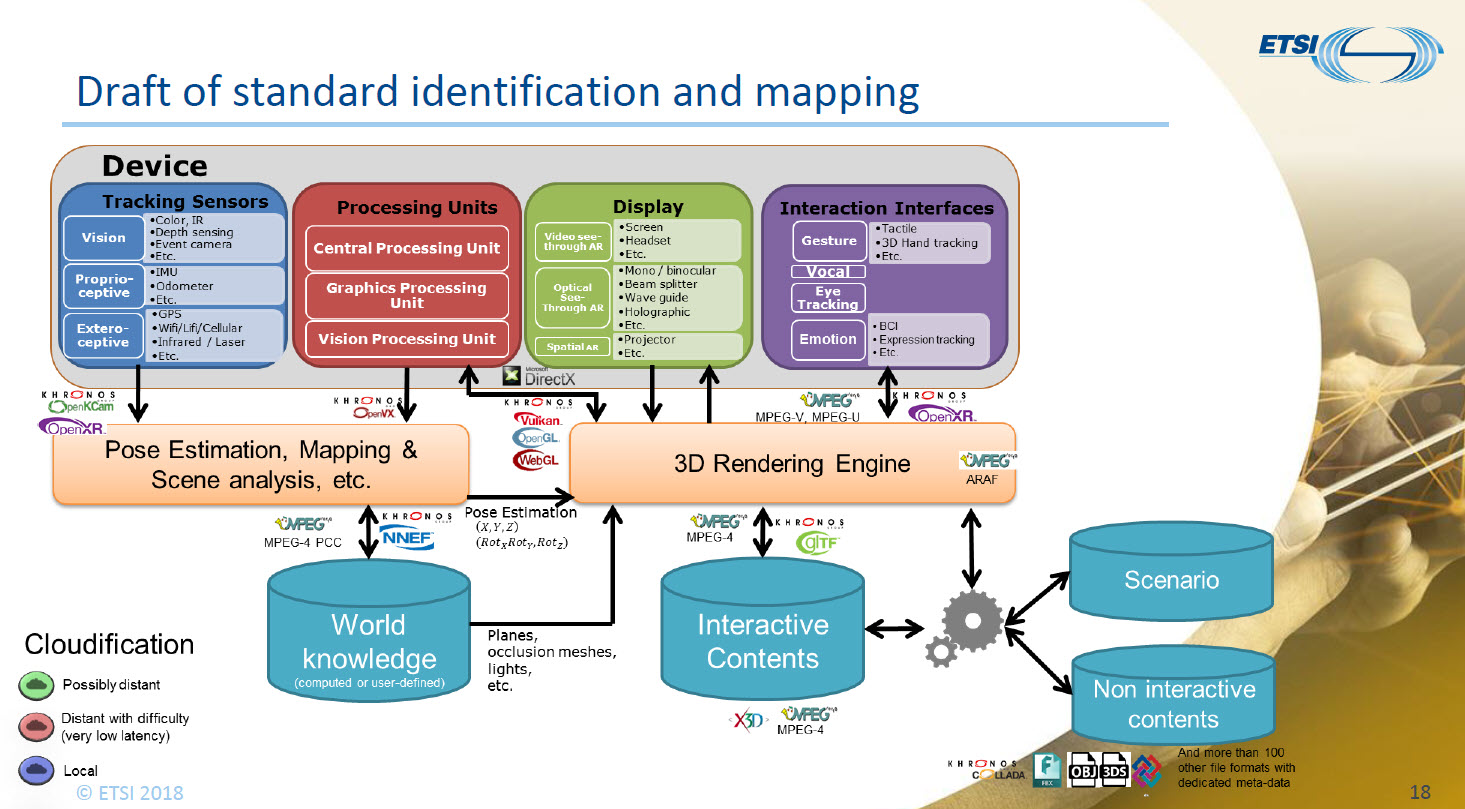

Ralf Schaefer, Director of the Video Division at Fraunhofer Heinrich Hertz Institute (HHI) ,talked about the Industry Specification Group on Augmented Reality Framework (ETSI)

AR is a promising opportunity with forecasts of $80 billion by 2021. Vertical and industrial markets are developing but there is a danger that just a few companies could dominate so the ETSI group is trying to develop standardised interfaces to allow open development and the group was created in November 2017. There was a kick off meeting in December with partners and there were 16 by May this year. There are German and French members, but so far none of the huge companies such as Google, Microsoft et al – but the founders are working hard on this to try to get them more involved.

The first workshop was in February in Berlin and then in Paris in May. Topics discussed were:

-

AR standards landscape

-

AR Industrial use cases

-

AR framework architecture to identify gaps in needed standards

The group has already identified a number of bodies working on standards, but nobody seems to be looking at an overview. To understand AR use cases, the group has surveyed around 60 companies in English, German and French and asked about projects and applications etc The results are in and now the project is at the analysing stage.

AR VR Standards have been identified. Click for higher resolution

AR VR Standards have been identified. Click for higher resolution

The framework has been shared with b<>com which is a French research institute that is based in Rennes. Schaefer stressed that although there are a lot of interested parties working on different parts of the standard landscape, nobody else is looking at the ‘big picture’.

The Group is looking for companies to get involved and those interested should contact Schaefer. He said that it is not expensive and it is easy to get involved.

Demonstrations

There were a number of demonstrations at the event

-

Vive prototype with “the monk by the sea”

-

Oculus Go with project examples.

-

Autonomous driving with model car. 22nm chip Global Foundries.

-

Ipsos, the market research company ran in Hamburg a car clinic. The company tried showing new car concepts with VR headsets as well as real cars and when they compared the results, they found that they are almost the same so you can avoid the need to provide physical prototypes.