A recently published research report, “3D in Education: South-east London Trial”, by Professor Anne Bamford of University of the Arts-London provides us with some insightful action research findings relative to 3D in education, notions that are perhaps relevant to both the recent virtual reality push and the display industry in general.

Background

This study showcased the findings of a science education using stereo 3D in thirteen Greenwich schools in the Southwark Diocese (UK). Sensavis, XMA and hardware partners (including LG and HP) collaborated on this project, which “emerged from the earlier LiFE project (Learning in Future Education) that examined the impact of 3D learning in eight European country pilot studies.” (The earlier LiFE† research demonstrated “marked impact of 3D imagery on improving student understanding, retention, attention and recollection, resulting in enhanced academic achievement.”) In this most recent effort, teachers and students worked alongside designers and programmers to develop content that made it easier to teach difficult topics in the science curriculum.

Although small in size (the concluding survey was completed by 192 pupils and 10 teachers) the trial included ten primary schools and three secondary schools. According to the report, three of the schools were rated as being ‘outstanding’, nine of the schools were rated as being ‘good’, and one school was rated as needing improvement. Greenwich is diverse and densely populated community, with most schools housing a majority of pupils from coming from non-English speaking backgrounds and low family income.

Procedures

In this study, 3D visualization was used by teachers for classroom instruction, with the equipment located in differing settings within each school: science rooms, ICT suites, the school hall, classrooms, library, special needs intervention rooms and /or maths classrooms. In addition, eight schools had selected a mobile solution for their use. (Still, teachers preferred using fixed equipment in the classroom, thus keeping setup time to a minimum.)

A post-study survey was administered, with responses coming from 49% girls and 51% boys; respondents were between 9 and 13 years of age, almost evenly split. Just under two-thirds of the pupils were in primary schools, with the remaining one third were enrolled in secondary schools.

Findings

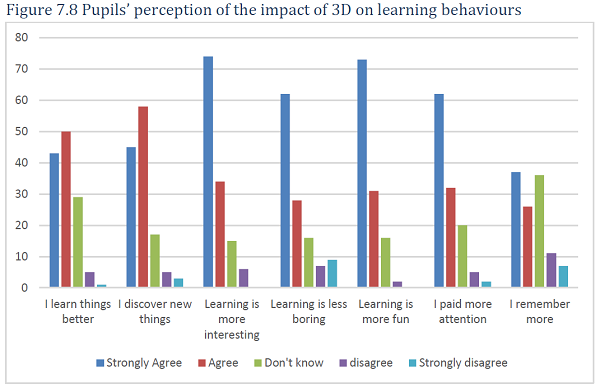

The findings coming out of this study are both dichotomous and incongruous. “The pupils were very positive towards 3D learning [see Figure 7.8 below] especially the younger pupils. They felt it helped their learning and they wanted it in more subjects. Over 72% of pupils wanted 3D learning at least once per day or more often than that.”

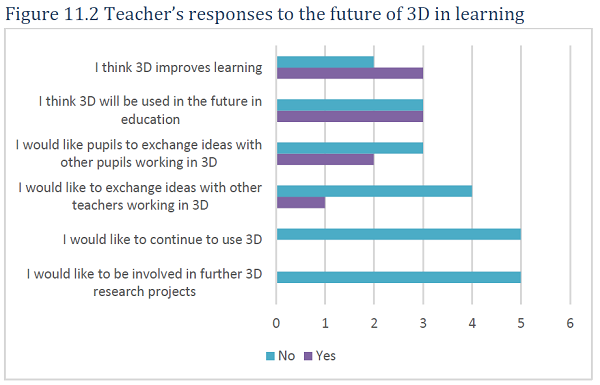

Teachers, not so much. The author states, “it would appear that the enthusiasm of the pupils was not equally shared by their teachers.” Bamford goes on to explain: “By contrast, while the teachers acknowledged the benefit of 3D to the pupils’ learning, they did not show an interest to continue nor did they provide enough time using the 3D to be able to claim or evidence any sort of clear impact on pupil attainment. The teachers acknowledged that 3D was likely to be used in the future of education but none of the teachers showed an interest to continue to use 3D or be a part of future research into 3D and learning (Figure 11.2).”

The lack of teacher interest in 3D was also translated into the language of motivation for purchasing: “The amount of funds the schools were also prepared to invest in 3D reflected the low level of interest from the teachers. There were no schools prepared to invest more than £2000. Just over half (58%) would pay between £1,000-£1999 while the remainder of schools would only pay less than £999.”

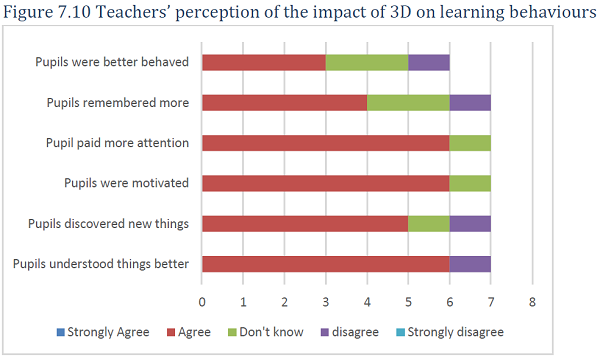

Still, not all the news coming from teachers was negative. Teacher’s perception of 3D on student learning behaviors trended rather positively (see Figure 7.10). And teachers made some notable comments about the impact and value of 3D learning for students:

“We have noticed that the pupils ask more questions”.

“The dynamics of the class changed dramatically – there was a lot of discussion and excitement”.

“When we showed the 3D to the pupils, they were very interested”.

Study Conclusions

The report concludes: “It is difficult to determine from the results of this study the likely direction for 3D in the future, other than to more directly appeal to pupils.” The study goes on to suggest in underwhelming wording: “Clearly, no piece of technology alone will answer every educational challenge, but education has been comparatively slow in looking to the potential of resources and ‘aides’ to forge the monumental leaps many learners, teachers, schools and educational systems need to make.” Typical to most studies, more research is then suggested.

Analysis and Implications

This study offers a number of implications for properly conducting educational case studies for 3D and VR specifically, and the display industry in general. I have personally led the largest and most successful pilot project of 3D in the U.S. (the Boulder Valley 3D Project), involving thousands of students. In my opinion, this study represents a relatively small footprint in the overall research. My bottom line analysis: these trials were a case study in poor study design and poor planning for piloting school trials. In my mind, these findings are less of an indictment of the technology, and more an indictment of weak study design, weak project implementation, and weak leadership. (Incidentally, I teach workshops on how to better frame successful case studies. Also, see my recent article, When Teachers Give Products Failing Marks, for some insight on why teachers can be this way, and how your organization can prevent this from happening. )

Here are some of my key concerns with the methodology, design, and piloting strategies found in the South-east London Trials:

Failure to normalize frequency of use. One of the problems with this study was that frequency of usage was not truly normalized or standardized from the outset. Expectations were indeed set in terms of frequency of 3D-based instruction the children would receive (at least three times per week), but these expectations were clearly not followed. The study reports: “A major problem identified by the pupils was that the teachers did not use enough 3D in the lessons (not enough in terms of the frequency).” These student comments reveal the inside story:

We only used the 3D TV once

We don’t use the 3D TV enough

We never use 3D TV

The teachers could let us use it more

Lack of commitment. Basically, most of these data are invalidated by an uncommitted staff, resulting in both diminished n and reduced treatment. In fact, the report author complains teachers did not “provide enough time using the 3D to be able to claim or evidence any sort of clear impact on pupil attainment.” No surprise there. In fact, the results of this study are inconsistent with nearly all previous studies, in which teachers typically demonstrate high levels of sustainable motivation and commitment. This may have been the result of overextending already overworked teachers; or perhaps administrators, parents, or community members simply foisted an unwanted or ill-timed initiative on these schools.

Tuning out school culture. Many technologies are undoubtedly exciting to students. Yet as technology is introduced in schools, we can never tune out school culture for whatever the reason.

Using feeble methodology. Although they help tell part of the story, student and teacher post-implementation surveys are the weakest form of research; they are particularly susceptible to inept planning and design. If you are planning on producing data through your case study, use more useful data points, such as unit test scores, homework turn-in-rate, performance improvement over time, or reduced failure rates, to name a few.

Misreading solutions. The study gingerly offers the following recommendation: ”Perhaps the 3D developers could explore ways to more directly appeal to the pupils who may adopt the technology outside of school, if teachers do not support it in school.” I think they missed the point. Bypassing teachers and administrators is a recipe for disaster. Was this truly fertile ground for a pilot study, or just the virtual playground of some interested parents?

It is easy to waste a lot of time and resources on ill-conceived, hastily designed efforts like this. Instead, see my recommendations for dealing with educational pilots in my previous article. –Len Scrogan

† Bamford, Anne (2011) “LiFE: Learning in Future Education. Evaluation of Innovations in Emerging Learning Technologies”