There are three fundamental problems with making headsets for AR/VR/MR. First, which of the three is it? Second, it’s never about what you see, but how you see it. Third, you have to have a hook, it has to be a game, and it has to be good. Meta’s got none of these.

| Product | Original Price | New Price | Effective Date |

| Meta Quest 2 (128GB) | $399.99 | No change | |

| Meta Quest 2 (256GB) | $499.99 | $429.99 | March 5th, 2023 |

| Meta Quest Pro | $1,499.99 | $999.99 | -March 5th, 2023 (US, CA) -March 15th, 2023 (Other Supported Countries) |

Let me start by saying that the discussion here is about VR headsets. The assumption is also made that some of these headsets may also have some sort of mechanism to allow the real world to be visible within the virtual world, becoming AR headsets. That seems to fit Meta’s issues pretty well because it seems as if about 80% of headset technology is trying to figure out how to accommodate human vision and how almost every device that is in production or being developed falls short of its goals in doing so. Sure, any true headset enthusiast believes nothing is good enough. That’s what makes it fun to chase the elusive perfect product. Headset aficionados are excited by the possibility of lots of people using headsets from Meta, Sony, and Apple pushing products because it gives them a larger sample of the population to test their theories on.

Yup, I think most headset lovers and the people who make them hope there will be way more guinea pigs testing headsets and providing valuable data on their vision as it is tracked and manipulated to experience the virtual world. This would be a great way to get that great product that is so dependent on adapting to every eye. In other words, headsets are going to mess with your eyes, but if everybody could just use them and tell the developer how they mess with their eyes, they can do better next time. That will be $399 to $3,000, please.

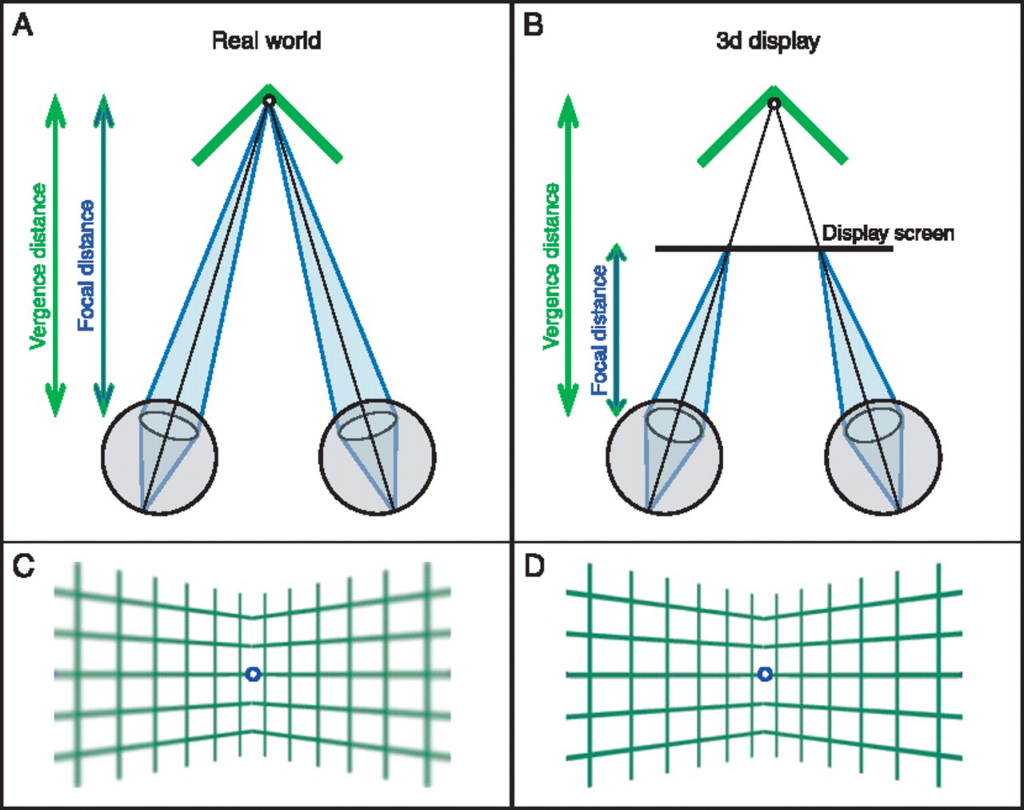

Nothing about the display? I mean, we love to talk about displays and figure out the specifications—what is the resolution per eye, the refresh rate, etc.—but there’s only so much you can spend on the bill of materials (BoM) and the display technology comes after the eye technology. It’s the lenses and eye tracking that need the bulk of the budget. It boils down to one simple problem: the real world gives our eyes lots of cues to help us appreciate depth and perspective. We’ve been doing it for centuries and are pretty good in the real world. The digital display is flat and has to fake it, which means the machinery of any 3D display has to poke your eyes to force them to think that they are seeing something in 3D. It’s crazy but, it’s true. Never have so much effort and money gone into figuring out how to keep up with your eye movements.

Crazy Eyes, Displays, and Biological Rabbit Holes

We all have crazy eyes. It happens when your favorite soccer team is being crushed 7-0 while you frantically look for missing defenders on your 75-inch TV. Our eyes do a lot of fidgeting. We even have different classifications for eye movement. You have fixation, which is a nice easy one to build around because it just means that you have the ability to focus on a stationary object and give it all your attention. It’s creepy in a crowded public space, but it helps us to perceive objects in detail and to focus our attention.

There’s a pursuit, where the eye moves smoothly as it tracks an object, like following a ball as it hits the back of a net for the 7th time while you are emotionally spent. There are saccades, rapid eye movement, our ability to shift our focus from one object to another, explore a scene quickly, and most notably, follow the words on a page, or screen, as we read. Then, there’s vergence. You hear about vergence accommodation around headsets all the time.

Vergence is the movement of your eyes in opposite directions, either towards each other (convergence) or away from each other (divergence). It is important for maintaining binocular vision and why we see the world as it is, in 3D, with depth. When we look at an object that is close to us, our eyes converge to bring the object into focus on both retinas. When we look at an object far away, our eyes diverge to maintain binocular vision. One of the reasons why headsets can create eye fatigue is the fact that the display is a flat 2D display at a fixed distance for your eyes, your eyes are trying to see a 3D scene by focusing at virtual objects at different virtual depths within that scene, and it’s hard because it is not natural. In the real world, you just focus on a point in space and the rest of the space around it falls into place nicely, being blurred at just the right amount to give us the sense of depth that it has.

Then, there’s motion sickness from being stationary while your eyes are experiencing a roller coaster movement. You can literally make yourself dizzy or sick by just standing on a chair, and moving your head around. Eye fatigue, that’s a common problem with all digital displays, and in headsets, it is compounded by watching a display with poor image quality, blurred motion, and flicker at millimeters distance from your eyes. Last but not least, wearing and using a headset for any length of time sucks. There are more scientific terms, I am sure, but the correct term is, it sucks. It sucks to be stuck in a virtual tomb around your head for any length of time. For some people, it is about a minute, for others, not that much more.

Measuring Users for Headsets

If you’re a headset engineer, your blood should be at boiling point right about now. I have just laid out a very simple overview of the challenges of relying on the human eye to make your headsets successful, but you relish the opportunity to solve these problems. These are not blockers for you. They are obstacles to be surmounted. Yes, but you don’t make products to solve technical problems, you make products to serve a user’s need.

And what is that need, precisely? I mean, what is the need that Meta is meeting with its headsets? I know what Sony is doing with the PSVR2—they have a sequel to a gaming product that did well enough to warrant a sequel. I have no idea what need Apple is addressing, but I have a sneaky suspicion that it will be targeted at a specific type of creative and not the mass market. So, stick that in your rumor mill and smoke it, Apple stans. Meta makes no sense because everything it produces falls short of being a gaming device, or a professional device, or any demographic’s device. No one would even be talking about Meta if they hadn’t dropped $40 billion to get to a 30% price drop on their flagship product. I am going to assume that Meta wants to reach all of its billions of active monthly users. Great idea. They want all them eyeballs. Is Meta doing enough to get them? Probably not because they have to make compromises that don’t have anything to do with the user. For the user, every headset has to start with a friendly eye-to-eye meet and greet.

A headset needs to be able to measure the interpupillary distance (IPD) of every user so it can adjust its lenses to be at exactly the right place for a user’s pupils. There are digital IPD rulers and there are very sophisticated optometry devices that do this, and they can cost anywhere from a few dollars for an app to several thousand dollars in a doctor’s office.

Any headset worth its investment needs to have eye tracking to adjust its output to the user’s eye movement and to address vergence accommodation. Now you’re getting into several hundred dollars to several thousands of dollars in terms of equipment, and I am going to take a wild guess and say that not a single commercial headset product is doing this at the level of accuracy that would make it a perfect match.

For the display guys, there’s an absolute need to have a high refresh rate, low latency, and motion-tracked displays with no tearing, artifacts, or blur. People can get sick of those in a pretty short space of time. Literally get sick, as we said before. The sky’s the limit when it comes to display costs because headsets are displays, yes? No. They are not display devices.

Granted, if you make displays, and you pretty much know that you can never take any business from Samsung, you are going to want to get into the headset business because your microLEDs, your nanoLEDs, your really cool tech, can make a difference here. You have a shot at this market that you don’t necessarily have playing in the big pond of display manufacturing. But, you have to take your seat and wait for the engineers to figure out who gets what from the BoM pie because, I think we said this before, a headset is not a display.

For our dear headset engineering friends, this is a great opportunity to show their chops by making just the right trade-offs to create the right headset. Except, they don’t actually know who it is right for. Is it right for gen pop, and their panoply of eye issues, something that has made the prescription glasses market worth $200 billion today? Is it right for the professional market, which would gladly pay several thousand dollars for a headset if you could prove two things: return on investment (ROI) in terms of productivity or profitability, and zero liability because no one wants to be on the hook for their workers’ wellbeing. Neither of these points is documented to any degree that is convincing or meaningful enough to define the right headset. That’s why we always get a return to games or virtual worlds or something trite.

Every Headset an Eye Doctor

Herein lies the point of this screed. Headsets would do better to become walking ophthalmology centers than try to do the two other things they actually think they’re there to do: create immersive interactive environments and or superimpose a virtual world onto the real one. Meta is dropping prices because its headsets made compromises that seemed perfectly legitimate to a multi-billion dollar engineering company but that has absolutely no relationship to product-market fit (I knew I would get around to using my opening gambit eventually).

More importantly, it’s not Meta’s issue. The company is just too drunk on its own Kool Aid to notice that it is a universal problem for all headsets faced, as they are, by the biology of human vision. Something that we have known about in the context of 3D display technology for decades. Let me reiterate that: something that we have known about for tens of years.

If you have had an eye test in the last 10 years, or if you wear corrective lenses today, you know exactly how much effort and cost go into something that is merely one fraction of the BoM of a headset. And yes, your typical prescription lenses would never cut it in a headset but, even so, you can have the best eye care professionals around you and still end up with a pair of glasses that don’t quite work right. Hundreds of dollars worth of corrective lenses that just help your eyes out compared to what? Tens of dollars that try and accommodate your eyes in a headset? Headset builders are scaling ophthalmology down to price their products competitively. Makes no sense.

A headset is not a display. The display is actually the easiest part of the solution to spec out. The main focus of a headset is forcing a user into an unnatural environment and trying to make them think it is natural, using the power of Lenscrafters staffed by two squirrels and an Arduino laptop. That doesn’t sound like any damn product-market fit analysis to me.

References

If you want to pursue any further reading on the subject of visual perceptions, computer graphics, and 3D displays then you may want to look at the work of Andrew J Woods. There is also a number of papers from a computer graphic god, Kurt Akeley, written while he was doing a Ph.D. after he had co-founded Silicon Graphics, and Martin S Banks, professor of optometry, vision science, neuroscience, and psychology at UC Berkeley.

You will go back to 1993 through the late aughts, but it just goes to show that the problems of headsets have not changed because human biology has not changed. We just have no way of solving those problems for everyone. No amount of money has been able to change that fact.