This year’s Display Summit event took place with support from Rockwell Collins. Most of the content was about big displays, but this session covered mobile displays.

This session focused on the use of AR/VR/MR/HUD for professional applications. Burt Muller of Radiant Vision Systems used his time to provide an overview of light, color and metrology. Radiant tries to design instrumentation is designed to try to replicate human visual perception in measurements.

One instrument described was the tristimulus filter wheel system that is designed to match the response of the human eye.

Muller also talked about contrast measurement, resolution measurement and a number of other common display tests like uniformity, mura, JND, particle defects and more. He also described how HUD and near-to-eye metrology can be done with their instruments.

Mats Johansson from Eon Reality noted that his company focuses on using AR/VR for enterprise training applications. He said that numerous studies have shown the benefits of AR in improved learning, but this success is driven by good content and an application. For example, using AR with service technicians he claimed that it means they can learn something 12x faster with a cost savings of 92%. Similarly, operator training with AR can be performed 2.7x faster with a cost savings of 63%.

Johansson introduced an interesting concept he called “bandwidth”, which he defined as “the speed of the connection between your brain and the digital version of yourself.” Today, that time is about 20 seconds, but it will drop to 8-10 second by 2020, 2-3 seconds by 2027 and become instantaneous sometime after 2037.

He then broke the industry knowledge transfer problem into several categories: cost, risk, complexity, quality and difficulty to access. In terms of hardware platforms, he sees three categories: smartphone VR with gyro orientation tracking (GearVR); standalone AR with inside out tracking (Hololens); and Tethered VR with outside in tracking (Vive). For positional tracking one can use fiducials or images or the more sophisticated spatial mapping, VPS or SLAM approaches.

Johansson said EON Reality is hardware agnostic and they have established training centers to help their customers. He then described a number of applications where Eon Reality has provided solutions like a virtual trainer for fast knowledge transfer; AR assisted training fueled by AI and IOT data; and creator AVR fast knowledge builder. The graphic below summarized how they see their company fitting into this complex ecosystem.

Yuval Boger from Sensics talked about how to future-proof AR/MR system solutions. With hardware and software in this area changing so quickly, how do end users find a way to implement upgrades without constantly changing out hardware and software every year or two?

He said the historical way to develop AR/VR application in the training and simulation space is to build everything internally, relying on peripherals supported by the provider of the image generator database being used. But the Open Source Virtual Reality (OSVR) software platform offers an alternative approach that uses a common interface and a set of libraries to assist in high performance VR rendering. There are already hundreds of companies participating in this ecosystem, claimed Boger.

The graphic below summarizes the concept. The OSVR application layer sits in between the hardware platforms and the application platform to simply interoperability. This is a big deal as without this layer, application needs to be written for specific hardware implementations.

OSVR is middleware that sits between the device and the application. It does require additional development to support this middleware, but it allows the application to be written once and played on a number of OSVR-compliant platforms.

In development is a move to migrate the OSVR middleware to an OpenXR platform. Boger says they are currently working with Khronos to standardize this approach by mid 2018.

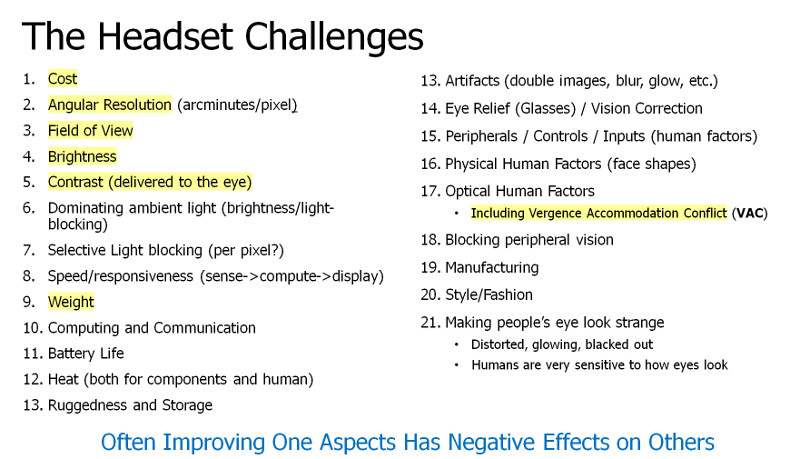

Karl Guttag from KGOnTech spoke next about some of the design trade-offs with AR and VR optical systems. He summarized 21 different challenges in headset design, but only focused on a handful in his presentation.

Ultimately, he believes we will need 7.2K x 6K resolution per eye to offer 1 arcminute of angular resolution over a 120º field of view, so there is obviously a big pixel gap today. To get high angular resolution with a big display, it needs to be far away from the eyes, which is not the direction you want to go from an ergonomic point of view. If you use a microdisplay, the display needs to be close to the eye with large and expensive optics, which is not very attractive either.

Today you can get wide FOV with low angular resolution or just the opposite – but not both together. One possible way to address this challenge is with foveated rendering – i.e. rendering high resolution in a small FOV based upon where the eye is looking. But there are challenges here as well.

See-through AR optics are particularly challenging as 80-90% of the display light is ultimately lost, so you need very bright displays to overpower ambient light, along with transparency of more than 80%.

He then went through some pros and cons of the various imager solutions (LCOS, DLP, OLEDoS, microLEDs), as well as laser mirror or fiber scanning approaches. One conclusion is that, for microdisplays, smaller pixel pitches are not likely as diffraction becomes an issue and optics get expensive with pitches below around 6 microns. He does not think microdisplays can achieve both high angular resolution and wide FOV simultaneously.

The larger flat panel approach may be better, but can these processes scale to the 6-12 micron range to serve the AR/VR needs?

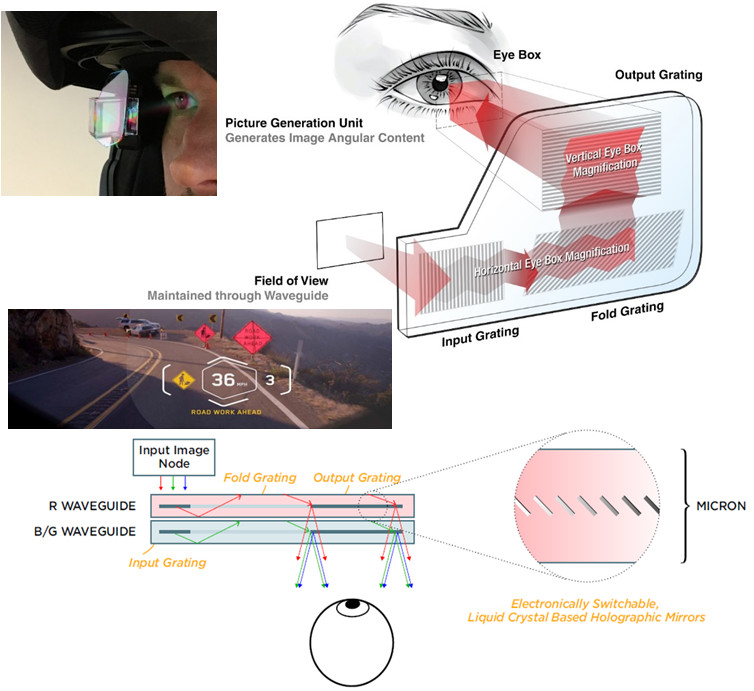

Jonathan Waldern from DigiLens provided a nice overview of the waveguide optical technologies his firm designs and manufactures for HUDs and near-to-eye displays. Overall, they offer licensed transfer of technology, services and support of their photopolymer phase grating materials.

One of the key technologies the company has developed is a suite of high modulation index photopolymer materials that allows for the manufacture of thinner gratings with larger angular bandwidth. These gratings can be placed on glass, plastic or films and design of waveguides is supported in ZMax.

Rockwell Collins is an investor in the company and DigiLens has already transferred manufacturing of gratings for HUDs used in aircraft. It is working with Continental on auto HUDs and BMW for a motorcycle HUD as well. He then showed a schematic of the motorcycle HUD to illustrate how it works. Note that there are two waveguides sandwiched together with one optimized for the red and the other for green/blue.

The same technology can be applied for use with AR glasses and Waldern noted they have designs that now support 1.5 arc minutes of angular resolution with 92% transparency. A FOV of 50º is possible with a single waveguide, with two offering a 90º FOV.

During the exhibition part of the event, Waldern showed a new waveguide-based module that uses a 640×345 resolution DLP pico projector with a 30º FOV and a 2mm thick waveguide, which is able to support brightness of more than 10K cd/m² and full color. This should be available very soon.

The company’s switchable Bragg grating technology also allows the integration of a variety of optical function as diffractive elements coupled to the waveguide – which can be turned on and off with a voltage.

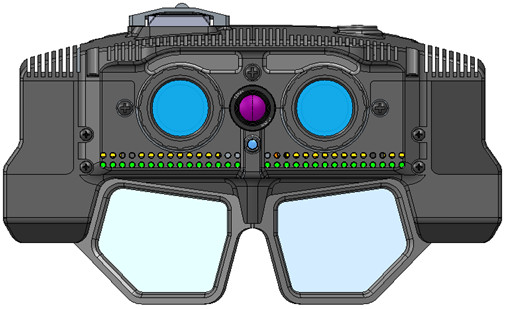

Lee Hendrick from Rockwell Collins talked about the Integrated Digital Vision System (IDVS) that the company has developed as an R&D project. It is a multi-spectral, binocular head-worn vision system for the ground soldier that is used as an “eyes out” tactical information display.

It is based upon a monocular DLP engine with a wide FOV using a DigiLens waveguide. Also integrated is a sensor assembly that includes binocular night vision sensors and a LWIR thermal sensor. A prototype is planned by the end of 2017 and may be on display at I/ITSEC in December. In the future, Rockwell Collins intends to upgrade this to full color displays while reducing cost and increasing ruggedness.

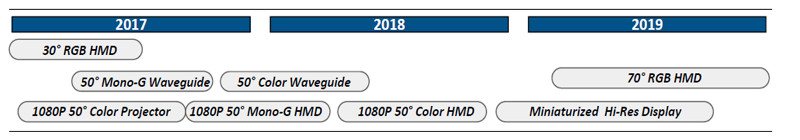

Hendrick also described another project – the Soldier Waveguide HMD that offers a full color 854 x 480 resolution image from a DLP pico projector and a Digilens waveguide offering a 30º diagonal FOV with 1500 fL of brightness in a 3mm thick waveguide. But a number of additional HMDs are planned as well, as shown in the roadmap below.

Carlo Tiana, also from Rockwell Collins, closed out the day with an interesting look at the requirements for transparent displays (i.e. HUDs) in aircraft along with the associated vision systems (used for pilotage in critical phases of flight).

In the HUD area, he described and showed a number of military and commercial aircraft HUDs manufactured by Rockwell Collins, including some the new ones with waveguides from DigiLens.

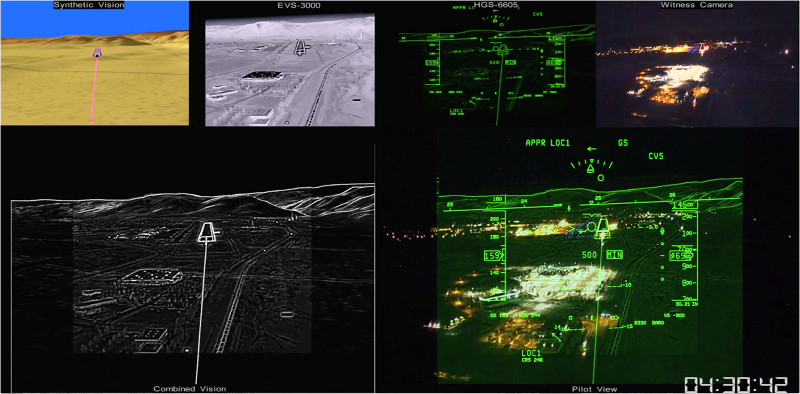

It was the vision systems area that offered insight into the information and use case that we don’t often hear about that was most interesting (at least to me). These systems need to incorporate LWIR, SWIR and visible sensor data along with synthetic data that includes terrain, obstacles and airfield-specific information to create images that add value for the pilot to land the plane in poor visibility. These images need to be registered very accurately to the flight path and rendered on the HUD.

The images above show how the separate sensor and synthetic images and how these are combined for the pilot’s view in the HUD.

Eric Virey from Yole provided their market assessment of the microLED market opportunity. He noted that there is considerable interest in the microLED maket with over 140 players on their list including majors like Apple, Foxconn, Sony, Samsung, LG BOE, Intel and more. Patent activity is increasing too.

He talked about the clear benefit of microLEDs in direct-view and microdisplay-based applications and then turned to discuss some of the challenges. For direct view displays, using a traditional one-at-a-time pick and place technique would take about 41 days to create a 4K display. Obviously, this is not acceptable, so a mass transfer technique is needed. He pointed to an important milestone that has just been reached by PlayNitride: the ability to mass transfer 200K microLED die per second, thus exceeding what he thinks is a minimum rate needed for consumer devices of 30K device transfers per second.

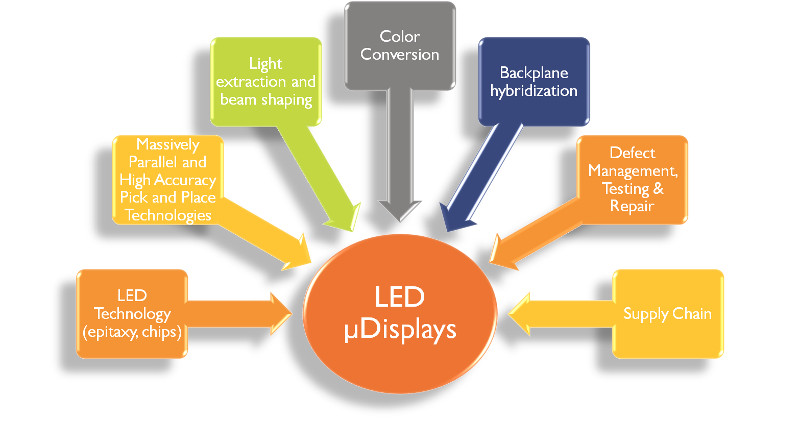

There are currently about 6 different methods being explored for mass transfer. But that is just one of the key challenges to commercializing microLED displays, as shown in the graphic below.

Virey then turned his attention to some of the applications for microLED such as smartwatches, AR/MR headsets, HUDs or RPTVs, large displays for ProAV or Cinema applications. These are all in various stages of development with all facing challenges before they can come to market.

For TVs, Virey sees some interesting possibilities because the cost of a microLED TV scales with the number of pixels, not the area as it does with OLED and LCD TVs. As a result, a 55” TV could cost the same as a 110” TV. He also discussed a kind of hybrid TV solution where a conventional backplane is fabricated on later generation fabs with microLEDs placed on top as the frontplane layer.

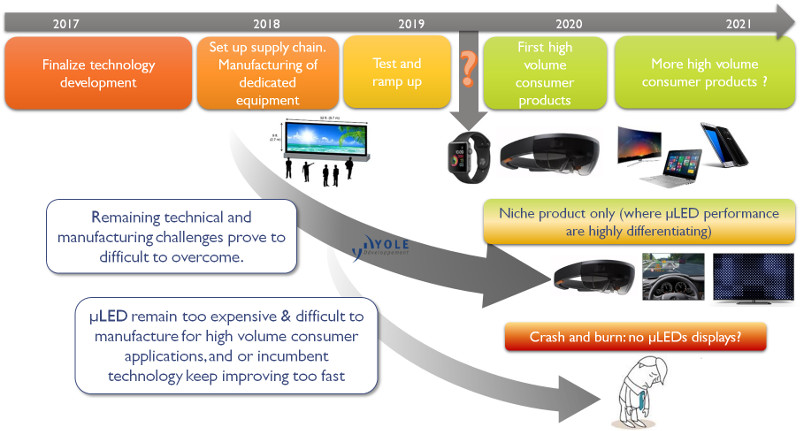

He concluded by noted that there are so many “moving parts” in the microLED market and so many challenges that it is hard to predict the future. Therefore, he hedged offering three scenarios as outlined in his graphic below.

– CC