Although the announcements from Nvidia and StarVR were highlights of the show, and there were plenty of vendors on the floor, there was also an ‘Experience Hall’ for start-ups and research projects as well as an Immersive Pavillion that focused on AR and VR.

In the Immersive Pavillion, we first met with Innosapiens Pvt which is developing the WE AR Sight project – an open sources AR device for the visually impaired. It is very low power and designed to be low cost (less than $200) that uses a Raspberry Pi Zero processor and communicates with a smartphone that provides the processing power for, for example, OCR reading or for facial and object recognition. The system includes a basic depth perception module that helps wearers to also avoid obstructions. The mechanical design is intended to allow fabrication by 3D printers.

Innosapiens is making this open source headset. Image:Meko

Innosapiens is making this open source headset. Image:Meko

The second demo we looked at was of gaze-aware video streaming that is being developed by the Royal Institute of Technology (KTH) in Stockholm which is working with Ericsson and we had previously seen the technology on the Ericsson booth at Mobile World in February. It is also working with Tobii. This time, we got a bit more information than previously. One of the problems with most approaches to the problem of trying to optimise the resolution of streamed HEVC is that there is often a big ‘group of pictures’ – perhaps up to 25 frames – between full i-frames. That makes it hard to switch streams quickly.

KTH has tested HEVC with two streams, one with all i-frames. If the gaze system decides that a change is needed, it can ‘grab’ an i-frame from the second stream. Although the P frames that are between the i-frame and the next i-frame in the original stream are not correctly coded for the revised i-frame, the group has shown in testing that they are normally close enough that there is only a small, and visually imperceptible, quality loss. However, you do get a low latency response to the change in the user’s gaze and high quality using a lot less bandwidth. The researchers claim that up to 83% less bandwidth is used compared to other techniques.

https://ieeexplore.ieee.org/document/8269373/

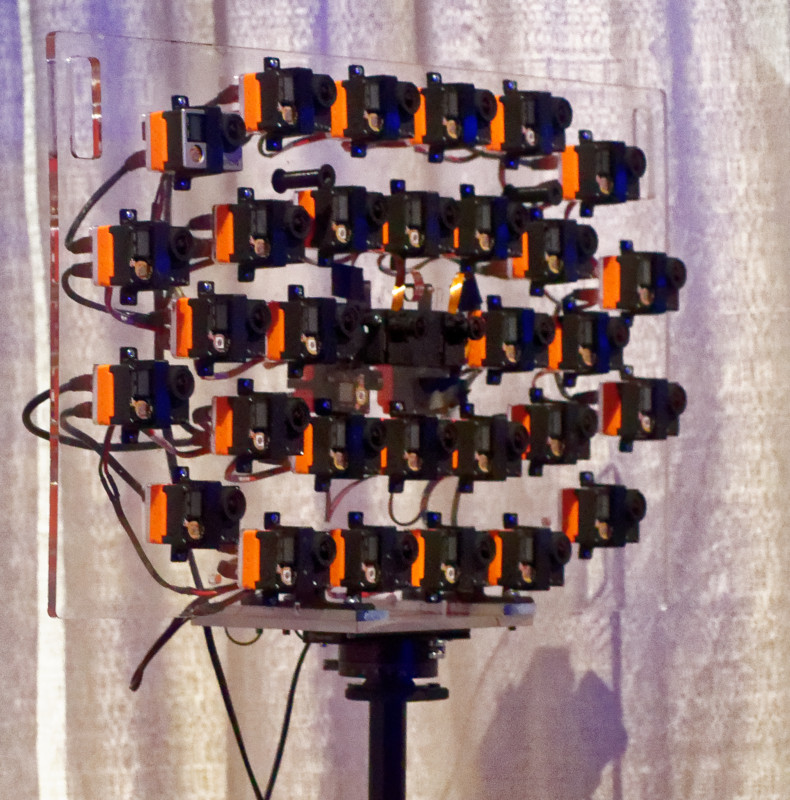

Google was in the Immersive area and was showing its work with light fields. It had two very impressive camera arrays, one using a static array of 32 cameras (one stereo pair and 30 others) and the other using a scanning array that can allow circular images and uses ‘just’ 16 cameras. Staff told us that there is no specific plan as to what to do with the technology at the moment – Google is just in an exploratory phase. The company also said that it is getting a good response to the VR180 format which limits the image in stereo to 180º, but considerably reduces the technology challenges compared to 360 deg video.

Google had two light field cameras. Image:Meko

Google had two light field cameras. Image:Meko

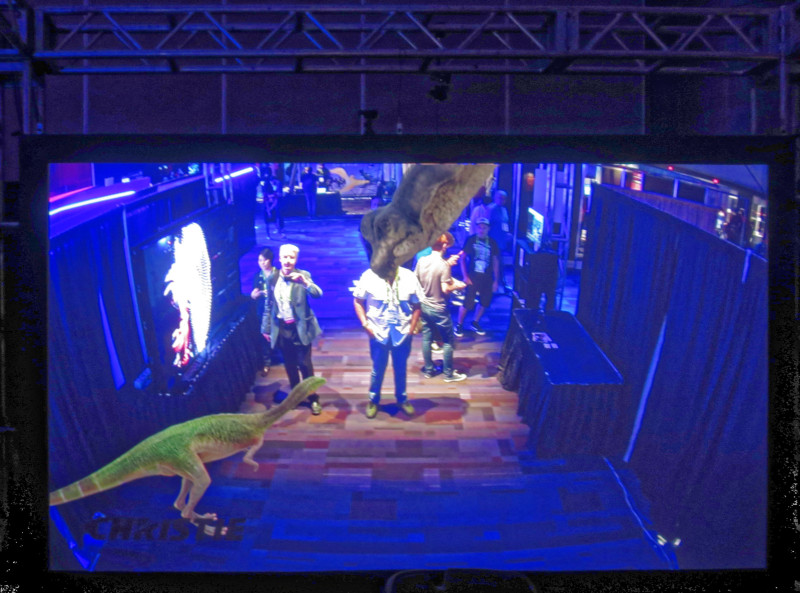

Inde was showing its ‘BroadcastAR’ system which adds AR content to projected and CAVE environments. The system includes gesture recognition that allows experiences to be developed where the viewer can interact with virtual objects on the display. The system is aimed at location-based entertainment and the company has developed the ‘Definitive Dinosaur Experience’ for the Jurassic Park attraction at Universal Studios, Orlando with Amex. It also has an ‘Ocean Adventure’ in a shopping mall in Budapest.

Your reporter with a couple of dinosaurs. Image:Meko

Your reporter with a couple of dinosaurs. Image:Meko