The success of mobile devices, we believe, is partly driven by the sensors that are available on them. The desktop has not had the same development in input – the keyboard and mouse remain the key input devices. We have been following Intel’s RealSense quite a lot, but we hadn’t caught up with what is happening in mobile, so we were pleased to attend a talk by David Beard – Developer Evangelist for Qualcomm’s Vuforia technology. (Beard clearly is close to this topic and “didn’t take prisoners” as his audience was mainly developers, but we think we got the key points!)

The success of mobile devices, we believe, is partly driven by the sensors that are available on them. The desktop has not had the same development in input – the keyboard and mouse remain the key input devices. We have been following Intel’s RealSense quite a lot, but we hadn’t caught up with what is happening in mobile, so we were pleased to attend a talk by David Beard – Developer Evangelist for Qualcomm’s Vuforia technology. (Beard clearly is close to this topic and “didn’t take prisoners” as his audience was mainly developers, but we think we got the key points!)

Vuforia is basically a software platform that can track objects and images, using cameras on the tablet or phone, like the Intel device, but the API can also be used to track the gaze of the user in VR and AR applications, and that is different from the Intel approach.

Beard started by saying that the platform is designed to allow and enable new applications. The SDK is provided to allow apps to see things in the real world and add vision functions to apps.

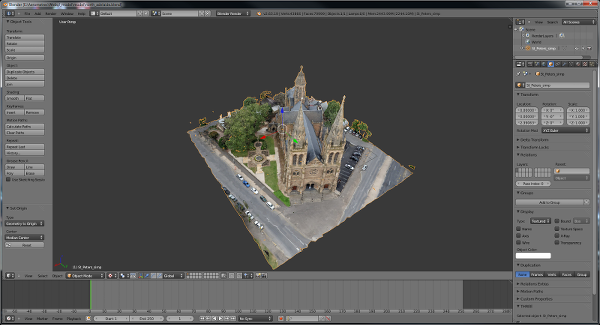

There is a simple API that allows an app to understand what the mobile device can see and where the device is, to allow rendering of virtual content onto surfaces from the real world. The system can track images (such as QR codes or book covers), objects and shapes, including small objects and text can be read. It builds the data into a series of “Meshes” that Beard described as “smart terrain”. This means, for example, that the app can understand the difference between a table and the objects on it.

The platform supports iOS and Android and can support a range of different programming languages and platforms. In response to questions, Beard clarified that although apps for mobile devices can be developed on a PC, they are not designed to be run on a PC.

As well as phones and tablets, the Samsung Gear VR, Epson BT200 and ODG R-6 are currently supported. The Google cardboard will be added later including support for the calibration features. The API is designed to run on most mobile processors, but has some extra features if a Qualcomm Snapdragon processor is used.

Qualcomm also has cloud services as part of Vuforia – there is an object recognition database that has around 1,000,000 objects registered.

The firm’s focus is on binocular displays. It supports “optical see through” (transparent glasses) and “video see through” where the user sees a solid display, but a camera gives the real world view behind.

Challenges for the system include calibration to fit to people’s different faces – there is a lot of variation with different inter-pupillary measurements, different distances between the ears etc. Later, the calibration system will be based on gaze cameras.

Beard mentioned (more than once) that power is a real problem in continued use of AR headsets at the moment, both the ODG and Moverio headsets get hot when rendering. This is a real challenge that Qualcomm is working on.

He then went on to look at some use cases. First there is the “Hybrid Reality” case, where AR is used as a portal/transition into VR – blending real and virtual worlds. This kind of app can bring physical objects into the virtual world. As an example, the Mattel View-Master uses a system based on image targets. The viewing device uses gaze detection to find out where the attention of the user is. When the viewer looks for a while at a “hotspot”, the system launches interactions.

A second use case was in architecture – he showed an airport building in Orly, Paris. In this case, the application starts by showing building plans that have been input into the system to look at a design. The application starts in A/R mode, then goes to VR. The transition has to be carefully managed.

Gaze detection is a very popular feature. Gaze can be applied in different applications and displays, so popular as one of a number of modalities used for control and interaction. Beard demonstrated another use case, the “Peronio” Pop-up book (http://tinyurl.com/peronio).

Beard then went on to show how a developer would add the gaze function using Vuforia and how it can integrate the gaze data with the inertial and motion tracking from the Oculus system.

Beard then went through the mechanics of using Unity, which is beyond the scope of this article. Basically, you create a Vuforia AR camera in the development environment, then configure that to support binocular cameras, which are designed to be converged like real eyes. An Oculus camera can also be imported, then the Vuforia and Oculus cameras are joined together to make transitions between the Vuforia and Oculus.

Gaze support can be easily implemented to allow interactions with the 3D environment.