Nvidia had a press event at CES that was away from the main Mandalay Bay and, as with previous years, highlighted the importance of automotive to the company’s future by being in the North Hall on the show floor.

The press event started with a video that showed applications in automotive and AI that are powered by the company’s GPU technology. The video was accompanied by music generated by an intriguing project which used AI and deep learning based on many classical musical works by famous composers to create the soundtrack. Check here for more on this and samples of compositions, which are created electronically, but then played by real musicians. We also liked the warning system that stopped a driver opening a car door because of an approaching cyclist.

Jen-Hsun Huang of Nvidia, who has been doing this kind of event for 25 years, made the main presentation and he talked about the three key industries. Gaming is a key industry and PC gaming continues to grow and is a $100 billion industry. AI and Deep Learning is potentially a $3 trillion industry and is affecting every industry and technology and is one of the fastest developing areas at the moment. Developments and algorithms are improving very fast and a lot of the work has been applied to autonomous vehicles, the control of which is a difficult technical challenge. However, the world of transport, including cars, trucks and mobility as a service is a $10 trillion opportunity.

Gaming

Huang is very optimistic about how much VR headsets can improve over the next couple of years and he said that eSport is now the biggest sport in the world. He said that 600 million people now watch eSports (although his presentation said that this number would be reached by 2020). That’s more people than watch the NFL, Huang said (and that reflects a US view – in 2015, the Premier League is reckoned to have been seen in 730 million homes, representing more than three billion people! – Man. Ed.) Many users share their gaming via YouTube. The fastest growing console in the US is the Nintendo Switch, built on Nvidia technology and that is both high powered and mobile at the same time.

At the show there were 10 new desktop gaming PCs, three notebooks and three displays based on NVidia technology. The Big Format Gaming Display (BFGD) is designed to change the gaming experience, by bringing smooth motion based on G-Sync and other technology to very large displays. (and was being shown at the show by HP, Asus and Acer – those were the three)

Nvidia’s Max-Q gaming notebook is half the weight of previous platforms.

Huang talked about the Max-Q design which is about taking a very powerful notebook platform and optimising it for low power. Traditionally, gaming notebooks are very fat and heavy – Huang showed a 10lb (4.5kg) 60mm thick device as his reference, but the new one is 20mm thick and just 5lbs (2.25kg) weight. The new one that he presented was a Gigabyte model, but it had 4X the performance of a MacBook Pro and 2X the performance of a high end console, but in a notebook format. To achieve this, the whole system from PSU to CPU has been optimised. Huang said that although he was a ‘first generation gamer’, one day everybody in the world would be a gamer.

Nvidia Really Does AI

Turning to the second opportunity, Huang said that Nvidia was lucky enough to start on deep learning a long time ago. He showed the Nvidia Volta module which is a very high power processor (claimed to be the single most powerful processor ever made, but small enough that he took it from his back jeans pocket!) which can produce 125 Teraflops via its Tensor Core. Eight of these can combined into a single system and that means 1 PetaFLOPs – enough to join the ranks of the world’s top 500 supercomputers.

Huang had 1/8 th of a supercomputer in his back trouser pocket!

Huang had 1/8 th of a supercomputer in his back trouser pocket!

Huang said that all the cloud service providers globally have adopted the Volta and every server maker in the world also has systems that use Volta. They can all use Nvidia’s software which can be downloaded and the whole system can be downloaded as a virtual machine. The DGX is a supercomputer with 8 processors that can be directly used by those that can’t build their own systems.

Nvidia has also developed the Titan V as the most powerful GPU ever made, but can be used for deep learning and other non-graphic applications.

Huang claimed that the Nvidia TensorRT has a 10X better cost of ownership than other approaches for applications such as image analysis and processing. The equivalent of four racks can be housed in the single unit using Nvidia technology. He showed how such a system can be used to recognise the type of flower from images. The system had learned itself how to identify different flower types using an inference engine. He claimed that to run the equivalent of the GDX system, a system based on traditional server architectures would have taken up the whole stage, rather than being in a single box. “The more you spend”, he said, “the more you save!”.

The deep learning technology has been developed to be used in ray-tracing which can calculate the most likely colour of any pixel, speeding up that graphics process. It has also been used to teach VR systems how to animate speech so that in a virtual world, a character has been created that speaks any text and looks realistic.

Nvidia uses dual ‘competing’ learning systems, one creating human images and the other rejection those that it thinks are false, and this process allows both the production and detection to get better and better at a high speed. The result is very authentic looking, but artificial human faces.

Huang then showed a video of another musical composition, but this one was taught to mimic the compositional style of John Williams.

Transportation is a Huge Opportunity

Transportation is one of the key applications for deep learning and AI. There are around 82 million traffic accidents globally per year, with around 1.3 million fatalities and more than $500 billion in costs. The hour a day that is spent in commuting represents $10k of lost wages (at the $80k earnings of an ‘average person’ according to Huang!).

Autonomous Vehicles (AV) will have a huge impact, partly because another billion people on the planet will increase traffic by a factor of three. That becomes difficult or impossible to sustain and AV could drive down the cost per mile to the same level as personally-owned cars. What if the car is not the means to go to a destination but is the destination?

Commercial freight is a huge business and in the US, 3.5 million truckers are employed and can drive 11 hours per day. When driver logging was enabled in the US, productivity dropped by 11% or 12%. The use of Amazon and online shopping is increasing the demand for delivery services, but there are currently not enough drivers. AV could help or solve this, perhaps by allowing drivers more rest, Huang said.

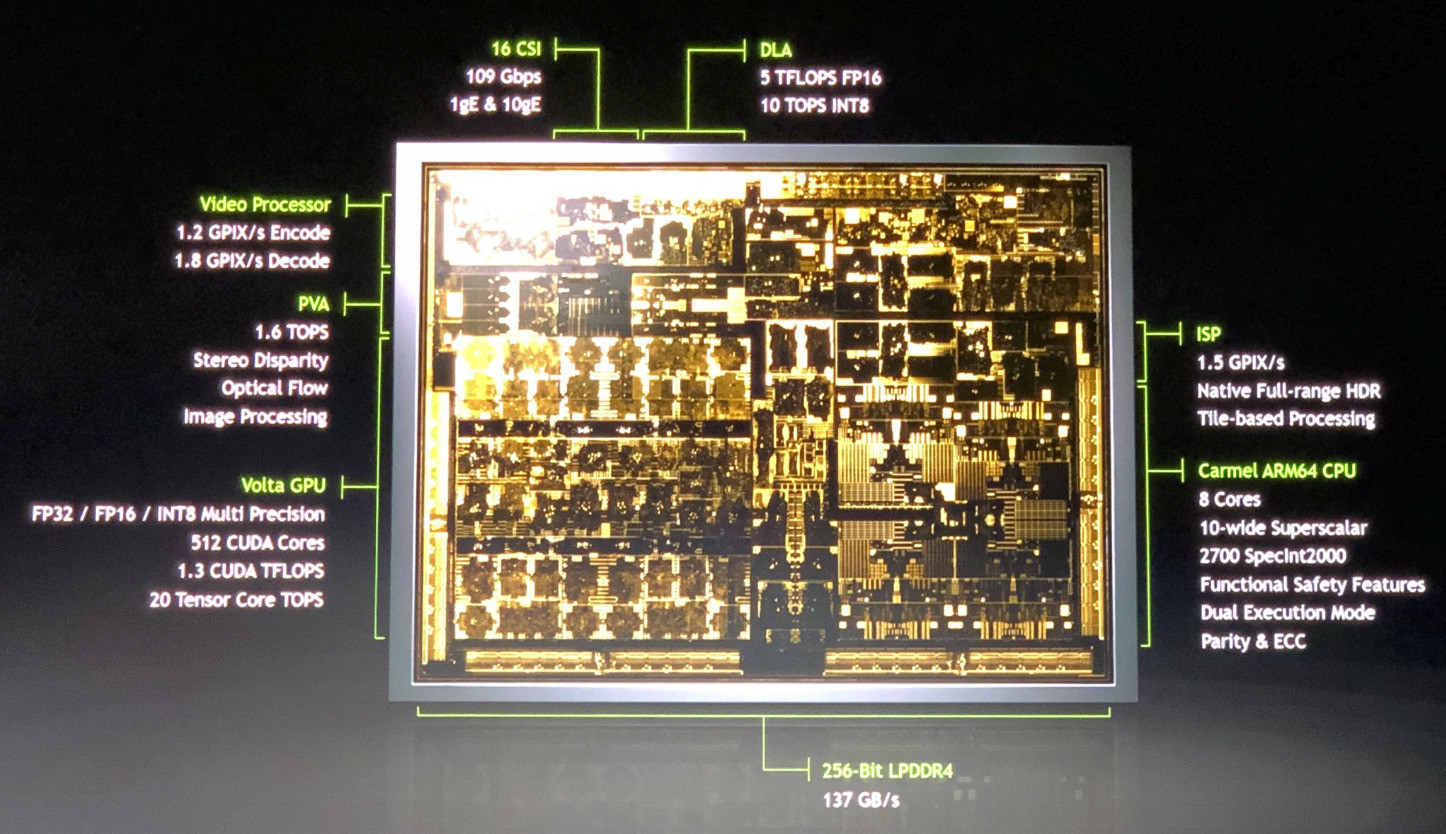

Huang said that the level of complexity needed for AV is a different order of magnitude in complexity compared to previous markets that Nvidia has entered. He announced a new processor called Drive Xavier which is said to be the first autonomous driving processor. Reaching to his other pocket, he pulled out the latest chip – said to be the largest SoC ever made and that took 8000 engineer years to develop and is 350mm 2, based on 12nm FF technology and with 9 billion transistors.

Nvidia’s Xavier Drive Chip is built around safety and redundancy. Click for higher resolution

Nvidia’s Xavier Drive Chip is built around safety and redundancy. Click for higher resolution

It has integrated error correction and duplication of circuits and uses 8 bit, 16 bit and 32 bit calculations to work on any network and with special circuitry for camera comparisons to enable stereo analysis. All cameras can be be analysed at 1.5 Gbps. Development of systems has been done on a four chip 300 W 24 Teraflop system, but this is now down to a single chip with 30 Teraflops and just 30W power consumption. It will power level 4 and level 3 autonomy. (Level 4 means autonomy in defined conditions, rather than in any environment, which is level 5). Sampling of Xavier will be in Q1, with production towards the end of the year.

The company has been developing a complete stack for navigation and control. In December, an Nvidia engineer drove 8 miles and through 23 intersections in Holmdel, New Jersey, with eight turns and two stop signs without touching the steering wheel on the car. Huang announced that Baidu and ZF would use Xavier to develop a new car platform for China, based on the Nvidia Xavier.

For mobility as a service (without a driver at all and a ‘Robotaxi’), makes things more complicated and Nvidia has the Drive Pegasus which has dual Xavier and dual GPU system which should be able to enable a level 5 autonomous car. Nvidia plans to sample the board this year. Aurora, a start-up which is working with BMW and Hyundai to build AVs is using the Nvidia platform and Uber will also work with the firm to build self-driving vehicles, Huang announced.

The biggest challenge is to build a system that is safe if there is a system or device failure and it’s a huge challenge – nobody has made a system that meets Asil-D safety levels before. Airlines are seeing what Nvidia is doing, and are ‘knocking on the door’. Every part of every CPU/GPU/Video in the system is designed for safety and systems have complete traceability.

Nvidia’s VR simulator allows high speed and repetiitive testing. Click for higher resolution

Nvidia’s VR simulator allows high speed and repetiitive testing. Click for higher resolution

Testing has also become incredibly difficult and complex and there are many variables. A simulated environment is much better as you can repeat tests to solve problems. Fortunately, Nvidia has a lot of experience of building virtual environments, and by running the tests on a supercomputer, the test can be run very fast – much faster than real time. The use of virtual environments also means that individual parameters can be changed – e.g. by changing the position of the sun – which helps as Lidar is sensitive to sun. Tests can also be made to sensors and dangerous situations can be simulated that you would not want to try in the real world. New versions of software can run in the simulator to ensure that no adverse effects are created.

The AI needs to be in the car because of the sensors and data about the driver and environment, the contextual awareness so cars will be AI systems. There will be millions of cars, taxis and trucks. The driving experience will be created by the AI choices of the car maker so Nvidia has built a platform for this.

Interactions with computers in the future will communicate via gaze, voice and gesture and computers will communicate back by AR and so Nvidia has a new software platform, Drive AR to allow access to the data in a car. In five years, users will not accept cars that don’t respond by voice and without AR in a HUD (or other display). Points of interest in the environment should be shown on the display, perfectly registered with the real world. Huang showed how VR could be used to be in the simulator to allow checking of what is happening.

Huang announced that it is working with Volkswagen to put AI into the firm’s cars. Dr Herbert Dies of VW came on stage. He said that, today, most of us love our cars, the brands, the design and the performance but there are negatives in terms of accidents and pollution. That will change as the car becomes less negative – environmentally friendly and safer. Some say there will be more shared mobility, but Dies said he is not so sure of this. Traffic jams won’t go away because there will be an increased demand for mobility, so more time will be spent in cars. That means you will want your own environment for this longer time.

Connectivity is essential to allow the upgrading of cars during their lifetime. Dies said that designers need new skills and Nvidia is supplying some of these. Product life cycles are getting significantly faster. Tier 2 (technology suppliers) will have to work more closely with both Tier 1 suppliers and vehicle makers. Up to now, there have been 60 or 70 small computers in a car, each sub-system with its own isolated processor, but in the future there will be just two or three big processors. That means a lot of changes. Dies said that there will be five new VW electric vehicles in 2020 with new interiors and built on completely new platforms. Huang showed a concept VW Microbus of the future, the Buzz.

On its booth, Nvidia was highlighting the automotive applications including the Xavier drive platform. Although we got a briefing from staff, they didn’t move much from the comments that Huang had made in his talk.

Nvidia showed the Xavier Drive system on its booth. Image:Meko

Nvidia showed the Xavier Drive system on its booth. Image:Meko

Analyst Comment

We were disappointed that Huang didn’t cover the LFGD very much in his talk and we only heard that there was a group promoting the display in a hotel suite at the end of the show. However, we did get to talk about it with HP and Asus, so have some more information in those reports. (BR)