Nvidia’s Jensen Huang gave a long talk at the Siggraph show and after showing a great set of images from computer games and PC renderings over the years, he pointed out that this year was the thirtieth anniversary of the launch of Pixar’s Renderman, which transformed the graphics and cinema industry. Their ‘relelentless pursuit of image perfection’ has been outstanding. 1995 saw Toy Story being created, using 800,000 CPU hours of rendering on a 100MHz, 27MFlop Sparc CPU.

With ‘Cars’, Pixar introduced ray tracing and in 2006, they used global illumination for the first time which made ‘light work as it should’ and that saved a huge amount of time for the Pixar artists. In 2014, with ‘Finding Dory’, the reflections and refraction of water exploded the level of calculation needed and in response, developers introduced AI-based de-noising which reduced the amount of render time neede, again. However, the level of computing was still huge. With ‘Big Hero 6’, the level of CPU power needed for rendering was 200 million CPU core hours, with those CPUs now running at 2GHz. That was a 4000 times increase in the computation power needed compared to Toy Story. Artists will always use as much power as possible for as many hours as they can stand, Huang said.

For the last few years, Moore Law has, more or less, come to an end in terms of clock speed and other factors, although because of architectural and software changes, GPUs are ahead of the curve but there seems to be a law , Huang believes that makes the demands of animators grow whatever the technology does!

Huang went through the progress of Nvidia’s GPU technology which has exploited hardware to keep taking away roadblocks in software and tools – that allows faster development -1:12:45 than pure hardware development. This has been in pursuit of ‘photoreal’ imagery. You have to deal with huge levels of geometry, sub-surface modelling, physics processing, animation and transparency are all barriers that have had to be overcome. Facial animation has needed a huge amount of power.

There has been a huge roadblock and that is the simulation of the effects of light. Up to now, there have been a lot of ‘hacks’ to create something that looks real. It was first written in 1979, by Turner Whitted (now working at Nvidia), that there was such a thing as a multi-bounce recursive ray tracing algorithm. Huang went through what that algorithm does including dealing with reflections. It uses Snells Law in the case of refraction and it is incredibly computationally intensive. At the time the paper was written, a Vax $1.5 million computer took 1.5 hours to create a single frame at low resolution (512 x 512) – around 60 pixels per second were created (not frames!). Whitted said at the time it would take a Cray supercomputer behind every pixel to generate real time ray tracing. That turns out to have been ‘pretty close’ to reality.

Now, Huang said, we can put that level of power behind each pixel – in fact, he continued, there is the equivalent of several Crays’ behind each pixel. That is a new way of rendering that was introduced at the Game Developer Conference (GDC) in March and is called Nvidia RTX. As announced at the GDC, it needed four GPUs connected together to generate five rays per pixel in real time. Checkout the animation here.

Huang had a surprise for the audience at Siggraph – the render was running not on the big system with four GPUs as seen at the GDC, but on a single card with a single GPU, the ‘world’s first’ ray-tracing GPU – the Quadro RTX Family. There are several versions with up to 10 gigarays (a new term!) per second. That compares to the fastest CPU (not GPU) which can perform just ‘a few hundred thousand’ per second, Huang said.

Huang played with the lighting on the shiny RTX – ‘It uses real physics’, he quipped.

Huang played with the lighting on the shiny RTX – ‘It uses real physics’, he quipped.

The system can perform up to 16 Teraflops per second (TIPS). Huang had fun showing off the shiny surface of the new board (“it supports real physics”, he said). The board comes with a new NVLink multi-GPU connector which allows connections between GPUs and buffers at 100Gbps. The card can support 500 trillion Tensor operations per second.

The Turing has a ray tracer, AI and a GPU.

The Turing has a ray tracer, AI and a GPU.

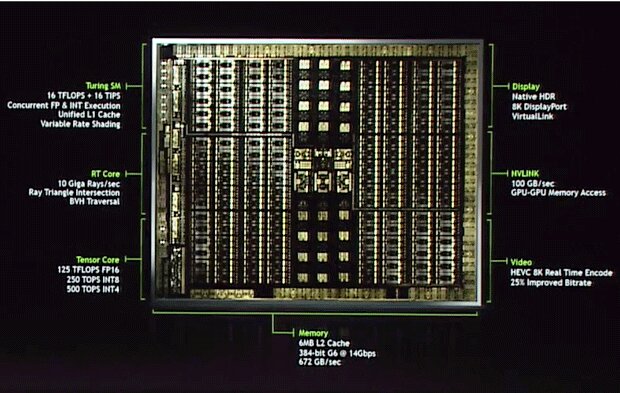

There are three types of processors in the GPU (although it is one chip), the SM for compute and shading, a brand new processor called the RT Core for ray tracing and the Tensor Core for deep learning and AI. The new Turing GPU, Huang said, is the biggest leap for graphics since the introduction of the CUDA GPU compute architecture in 2006. Making all three interoperable will allow developers to create amazing new imagery. Applications to support the GPU will arrive shortly.

The chip can support motion-adaptive shading (which reduces the shading needed) and foveated rendering to allow the optimisation of processing to different areas of the image. This will allow the focusing of the computing power. He then explained the importance of bounding volume calculations and how they interact with shaders and he said that has been the reason that it has taken ten years to develop. The processing can be performed at different levels of floating point performance for optimisation.

The system has been designed for HDR ‘through and through’ and the card will support 8K displays. The video processing can encode 8K video with a high level of quality and low bitrates.

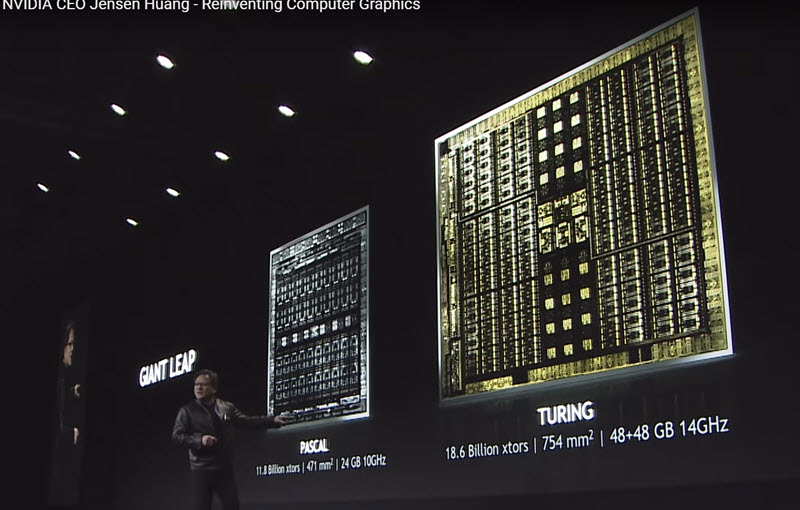

Huang then went on to show the Turing GPU compared to Pascal. The transistor count goes up from 11.4 billion to 18.6 billion and area from 471 mm² to 754 mm² and is the largest processor ever made (apart from the Volta from Nvidia). It can drive 48GB + 48GB of frame buffer at 14GHz. Floating point and integer operations can be performed at the same time.

The Turing is bigger than Pascal and is the second largest chip ever made.

The Turing is bigger than Pascal and is the second largest chip ever made.

There is a new software stack with APIs and tools to allow the support of applications. All sorts of new applications will be possible at very high speed because of the Tensor Core. The Nvidia Material Description Language will be made open source, Huang announced, to allow the capture of different materials. Nvidia is also working with Pixar to support the Universal Scene Description (USD) Language, to allow models to be moved between different applications such as Maya and Renderman.

There is a new software stack which is ‘Computing Graphics Reinvented’, Huang said. He showed an example of the ‘Cornell Box’, a standardised scene used for analysing ray tracing performance and accuracy. The demo was developed to show how different effects can be added.

Huang then showed a short clip, rendered in real time, to show off the features of the Turing. (Huang made the point that the display system used for the presentation really wasn’t good enough to show the full power).

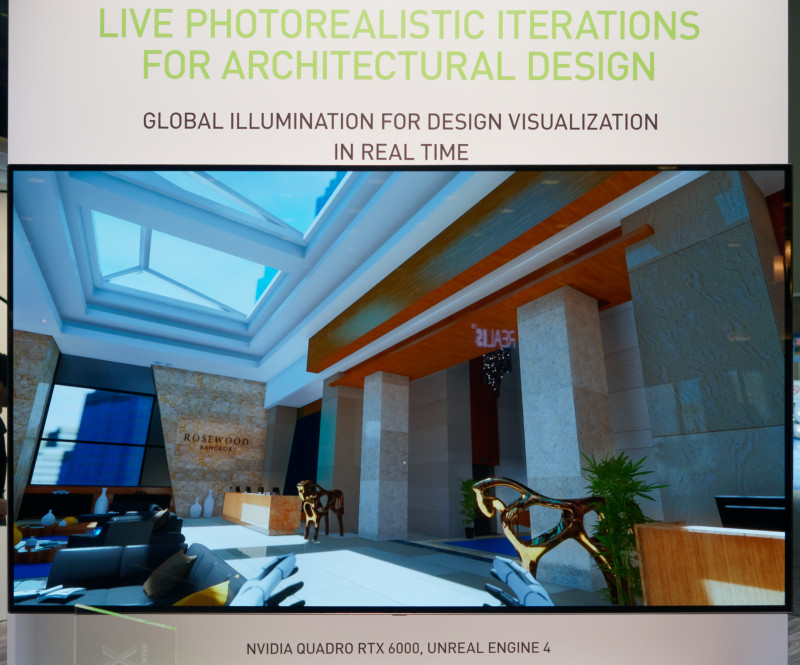

Unless you can do ray tracing and support global illumination with huge scenes live rendering is not possible and it has been hard, going on to impossible to create simulations, so most are now done on CPUs. Although artists can and do use their knowledge to make traditional games and applications look incredible, at the moment, the lights can’t move or react. That is the case in architecture and design or other professional applications. Even applications such as the IKEA catalogue are created using pre-renders on huge render farms. Turing will allow a big speed up in workflow.

As well as the Pixar birthday, it is 70 years since Porsche was founded and the company created a special video to celebrate. The video had to look right, Huang said, but then revealed that it was being shown in real time! It was developed with the engineers at Unreal and was very, very impressive. It will allow truly photo-realistic renderings that will be indistinguishable from reality and allow movie-quality gaming.

This Porsche image was being rendered in real time, Huang said.

This Porsche image was being rendered in real time, Huang said.

Huang said that the key to developing the capability was in adding both ray tracing and AI and that has allowed the pulling in of the timeline for realtime rendering by five to ten years. Huang said that the new technology allows a 6X acceleration in conmputer graphics. The use of AI allows the calculations to be done at lower resolution, but if the AI is well trained, the scaling is not visible. Anti-aliasing is improved by the AI technology which can learn using multiple jittered images to create a ‘Ground Truth’ that allows the lower resolution to be processed to look much better when scaled.

Huang then showed some scenes of architectural design showing changes in a Solidworks model that showed up instantly in the real-time render – very impressive!

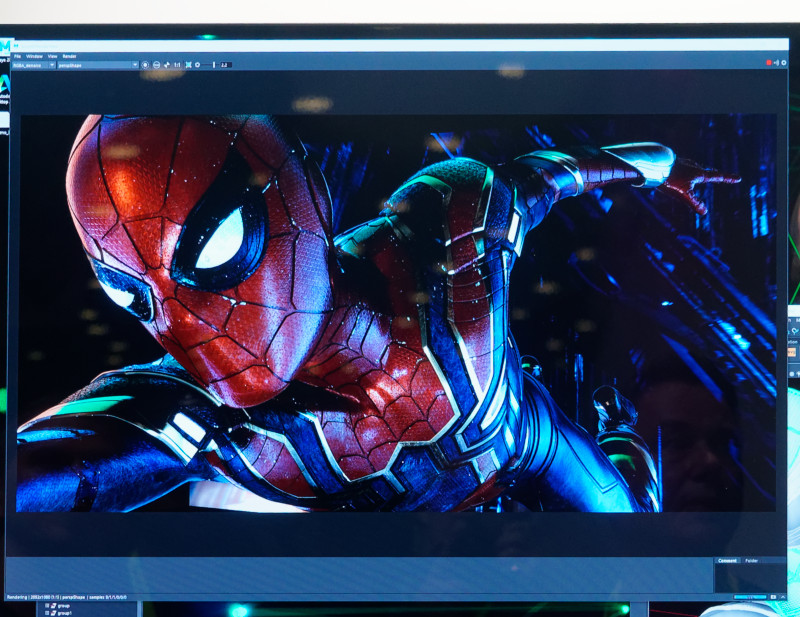

We took this photo on the booth, but the image was the same as Huang showed in his presentation (image:Meko)

We took this photo on the booth, but the image was the same as Huang showed in his presentation (image:Meko)

Nvidia worked with the Autodesk Arnold team who, Huang said, “Were blown away by the power”. Cinema quality is available in real time. Nvidia has created an RTX server for production with 8 GPUs for accelerating film rendering. It can be used as a workstation or as a rendering server. Sometimes as much as 20% of a $100 million movie might be spent on the render farm, and he showed a $2 million render farm based on 240 dual 12-core Skylake processors and using 144 kW. Using four RTX 8 GPU servers, the power consumption can be reduced to 13kW and the cost reduced by 75% in 10% of the space.

A three second shot (typical for a movie) can be rendered in one hour on a $500,000 system, compared to five or six hours on traditional architectures. That allows five or six scenes per day, which will change workflows. Huang had a list of twenty companies working on support for RTX in professional graphics and he said that he has never seen demand like it from software companies.

The Quadra RTX starts at $2,300 for the RTX5000 which has 16GB or can be used with NVLink for 32GB of frame buffer and supports 6 gigarays/second. The RT6000 goes up to 24GB/48GB and supports 10 gigarays/second at $6,300. The ‘monster’ RTX8000 is at the top with 48GB/96GB, 10 gigarays/second and costs $10,000. “The more you buy”, he said, “the more you save”.

Every system maker has jumped in for servers and workstations and there will be a wide variation in system configurations from all the main vendors. We checked out the connectivity to the card and as well as USB Type-C, the card supports VirtualLink and we have written that up separately. The interface supports graphics, fast USB for sensor data transmission back to the system and 27W power.

In summary, its the biggest announcement since CUDA, with 6X graphics performance to allow much more to be done for the same budget.

The final demo showed all the features.

Audio Ray Tracing

In another part of the show, Nvidia was showing ‘audio ray tracing’. Only a couple of weeks ago, I wrote after the visit to Antycip that few users of simulation use audio because of the difficulty of keeping the audio in sync with the visuals, spatially. At Siggraph, Nvidia showed me how the company can not only locate objects in space and synchronise them with the motion of the user, but can also modify the audio to reflect the effects of reverberation in the spaces that the user is in virtually. I was able to hear a clear difference when the surfaces in a room were shiny or soft, could hear the change in the audio as I went through a door and the gradual change as a sliding door came across. As the partition was made of glass, I could still hear something through the glass.

This kind of simulation would be great for architects to use to check the effect of different design decisions, such as the use of different materials and other design details. This kind of technology should be incredibly useful in simulators to add to the realism.

The technology can work with existing GPUs but the big new RTX GPU should be able to make it better still and it is already very impressive. I suspect that Nvidia can use the AI features of the new RTX to train the system to be even better.

It would be great if building designers could actually take the audio qualities of their buildings into account. After all, we must have all been in places where, for example, public announcements simply couldn’t be heard, or environments with so much harsh noise that they are simply not comfortable to be in for any length of time.

RTX for VR

RTX is going to be very important for VR and can be used for pre-warping of images to correct for optical distortion. Since the ‘Maxwell’ product line, Nvidia has been developing special technology for VR although originally this basically meant improving the performance by reducing the resolution on the edges of images, where the lenses are not so good. Early one, the image was basically divided into 9 (like Tic Tac Toe) and the pixels near the edges were simply ‘smooshed together’ (a technical term in this context!).

From Pascal, Nvidia had a technique called ‘lens matched shading’ (LMS) which used continuous gradation of the resolution from the middle and giving a much smoother image.

The new generation has a new API and can support Variable Rate Shading, which is important to match the frame rate to VR headsets. The image is rendered into a buffer and then processed using ‘many shaded samples’ per pixel. After that, a look-up process provides the image for the eyepiece and the part of the image that is of interest can be optimised. The technique can support both foveated rendering and for optics matching. The processor can also support motion adaptive shading which means that designers can exploit the trade off between high resolution and fast motion in human vision.

Turing also has enough power to make the processing content sensitive – that is to say, the system can detect if the image is out of focus or has a very shallow depth of field, and can decide whether to try some processing.

The first generations of the algorithm used simple offset geometry to adjust for the eyes, allowing just one geometry pipeline, with dual shaders. However, now four full images can be processed and can be supported with displays at different angles (one of the reasons that there is a lot of shared interest between Nvidia and StarVR, which uses dual angled displays) (StarVR Headset is Immersive) The ability to support four different zones will be particularly important for multi-resolution approaches like that from Varjo, which uses high resolution in the centre of the frame and lower resolution in the periphery.

Analyst Comment

We went around the booth and saw the demos live, which were very impressive. A very close inspection showed very slight artefacts on fine edges for a couple of frames during live ray traced demos. However, the engineers have only had the chips for ‘a few weeks’, so there is time to improve. We did discover that the RTX5000 and RTX6000 GPUs will be available in Q4, while the RTX8000 will be ‘later’. Key to the new system, we heard, is the de-noising technology, which Nvidia presented last year as a software solution and has implemented in hardware this year.

The AI technology looks as though it will also have a significant impact on video. As Samsung has said in its comments on up-scalin, AI can be really useful and the amount of power that Nvidia can bring to bear on the task makes the up-scaling demos for 4K that we saw, very good indeed. The AI can also be used to improve noise reduction, Slo-mo’ creation, inpainting and line removal.

This really was a revolutionary breakthrough that will have big impacts on architecture, engineering, marketing, animation and visual effects, immediately. Of course, eventually, Nvidia will be looking to bring the technology from $thousands to $hundreds, although without Moore’s Law, that will be a challenge as this is a very big chip.(BR)

Real time Movies can be rendered 6 times faster – 1 hour per scene, not 6. Staff were switching on the fly to wire frames so show it was real time. Image:Meko

Real time Movies can be rendered 6 times faster – 1 hour per scene, not 6. Staff were switching on the fly to wire frames so show it was real time. Image:Meko