Media over IP (MoIP) has made impressive strides forward recently. One sign of this progress is the publication of “NABA Media over IP Sub-Committee: Report to the NABA-TC” by the North American Broadcasters Association (NABA). The NABA calls itself a sister organization to the EBU and covers the US, Mexico and Canada. To distribute the information in this 94 page report, the NABA, in association with the Alliance for IP Media Solutions (AIMS), Society of Motion Picture and Television Engineers (SMPTE) and the Video Services Forum (VSF), held a full-day workshop on the technology. The workshop was hosted by NBCUniversal in New York on October 16, 2017. This report on MoIP can be though of as a follow-on to my Display Daily from June 13th titled “Is Video over IP Ready for NAB and InfoComm?” The short answer from the NABA and the participants in this workshop is “yes,” at least for NAB. There was no discussion of MoIP in a InfoComm/Pro AV application. There was also no discussion of cinema content.

Full members of the NABA. There are also 9 associate and 14 affiliate members (Credit: NABA)

Full members of the NABA. There are also 9 associate and 14 affiliate members (Credit: NABA)

While the technology was called “Media over IP” rather than “Video over IP”, I suspect that was mostly to distinguish the technology’s acronym MoIP from VoIP, commonly used for “Voice over IP” and sometimes used for “Video over IP.” Talking to the representative of National Public Radio (NPR) at the social hour after the meeting, he told me that audio, e.g. radio, has been exclusively IP-based for about a decade. The presentations were essentially 100% related to video, not the audio portion of media. For audio, the MoIP community has adopted the Audio Engineering Society AES 67 and re-labeled it SMPTE ST-2110-30, PCM Audio.

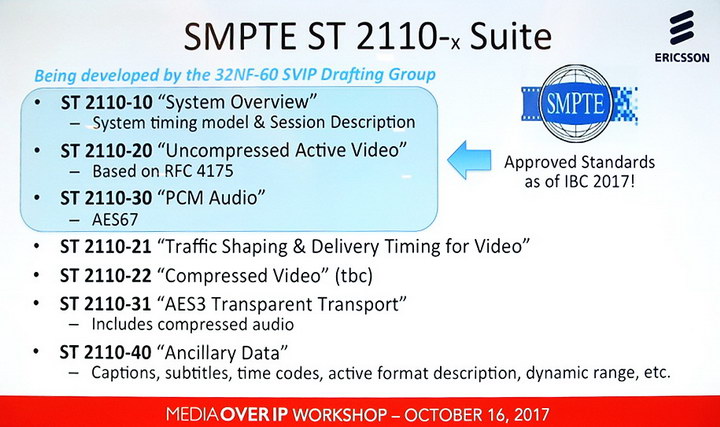

Of the four sponsors of the conference, SMPTE is the only standards writing organization. As such, it is expected that eventually the SMPTE ST-2110 suite of standards will govern MoIP for the broadcast and content creation communities. Three of the standards in this suite have already been approved, as shown in the image, and the others are under development. The workshop focused on broadcast, streamed and OTT content, including live content.

The SMPTE ST-2110-X Suite of standards that will govern MoIP presented by Matthew Goldman, SVP Technology at Ericsson and current SMPTE President. (Source: MoIP Workshop)

The SMPTE ST-2110-X Suite of standards that will govern MoIP presented by Matthew Goldman, SVP Technology at Ericsson and current SMPTE President. (Source: MoIP Workshop)

Besides SMPTE 2110, there is another existing standard, SMPTE ST 2022-6, SDI over IP. This is the basis of most current MoIP installations and the ST 2110 family of standards is backwards-compatible with ST 2022. In fact, some speakers at the workshop were careless and used ST 2110 and ST 2022 almost interchangeably but the Workshop was mainly about ST 2110.

In the past, and still largely in the present, real-time video streams are carried via Serial Digital Interfaces (SDI) connections. The original SDI interface from SMPTE 259M and ITU-R BT.656 has been followed by a series of faster but similar interfaces, including ED-SDI, HD-SDI, 3G-SDI, 6G-SDI and 12G-SDI. SDI developments have not ended with the beginnings of MoIP since SDI is likely to be commercially important for years to come, even if it is mostly used in the many legacy video installations.

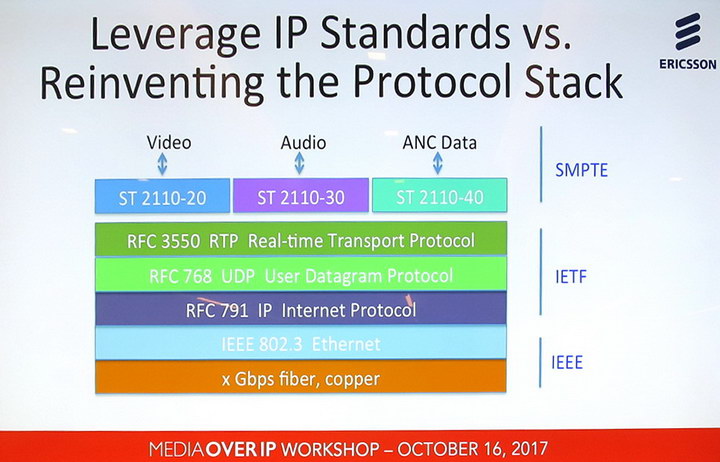

These SDI interfaces have one major thing in common: all levels of the protocol stack are determined by the video industry, e.g. SMPTE and the many manufacturers of SDI-based hardware. Since these are dedicated video interfaces, the sales volumes are relatively small compared to IP hardware, driving costs up. Dedicated hardware includes not just the cables carrying SDI signals but the transmitters, receivers, connectors, switchers and servers for the signals, plus the custom ICs needed to process SDI video. One of the major goals of MoIP is to have the video industry, e.g. SMPTE, define only the top, video specific, level of the protocol stack, not the lower IP levels, as shown in the image. This would allow the use of generic COTS IP hardware, including cables, servers, switchers and processors, for real-time video.

MoIP Protocol stack, with only the top level being video-specific. (Source: MoIP Workshop)

MoIP Protocol stack, with only the top level being video-specific. (Source: MoIP Workshop)

The use of commodity Ethernet switches, servers and cables is expected to do more than reduce cost: it is expected to significantly enhance the flexibility and agility of video plants such as post production facilities, control rooms and production trucks for sports and other remote live productions. For production trucks, there is another major advantage, saving weight. A relatively heavy SDI cable can only carry one video signal point-to-point while a relatively lighter weight Ethernet cable or fiber can carry multiple video signals.

In terms of switchers, a common SDI switcher is 576 x 1152. Four of these can be run together as a 1152 x 2304 SDI switch and that’s just about the biggest that is currently feasible. According to Thomas Edwards, VP of Engineering and Development at Fox, this is barely enough for a large, live video project. On the other hand, Tim Canary, VP, Engineering, NBC Sports, said an IP router (switcher) can currently be 1152 x 1152 and are expected to be 6912 x 6912 in the near future. In the long term, the needs for switching video signals are expected to reach 13,824 x 13,824 for 1080i signals. He said this is expensive with IP routers but practical while SDI routers are expected to top out at about 2304 x 2304. Thus MoIP will allow broadcasters to do things that simply would not be possible if they stick with dedicated video interfaces in the SDI family.

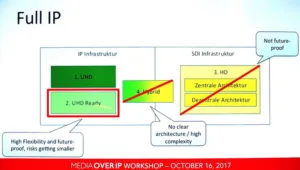

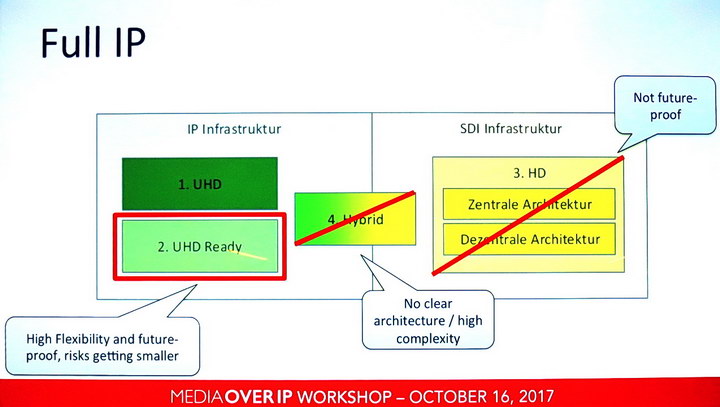

Andreas Lattmann is the CTO of tpc Switzerland ag (TPC), said to be the leading broadcast service provider in Switzerland. He described a project aimed at creating a new building, increasing the firm’s capabilities and adding a complete new control room. Several technology possibilities were evaluated and in the end, a UHD-ready IP infrastructure was chosen. Centralized and decentralized SDI infrastructures were rejected by TPC because they were not future-proof and a hybrid architecture was rejected because it was complex and not standardized.

Control room architecture possibilities considered by tpc Switzerland ag. (Source: A. Lattmann at the MoIP Workshop)

Control room architecture possibilities considered by tpc Switzerland ag. (Source: A. Lattmann at the MoIP Workshop)

System Timing

The Internet and, by extension, Internet Protocol (IP) was originally designed by the US military as a very robust way to deliver messages even in the face of network node or link failures. The example used by one of the speakers was delivering a message from Washington to NORAD, even in the case where Chicago was a smoking hole. IP will automatically rout the signal via an available channel. Because of this, you can never be sure exactly when a signal will be delivered, even if you are sure it will be delivered.

Video has very different requirements – exact synchronization of signals at the microsecond level is required. This is one of the reasons why dedicated video hardware systems such as SDI were originally developed.

Part of this problem is (mostly) solved by SMPTE 2059, which is the implementation of the IEEE 1588 Precision Time Protocol (PTP). This allows clocks in assorted Internet-connected devices to be synchronized to each other at the sub-microsecond level. While the PTP was originally developed in 2002 for applications such as process control, it has found multiple applications where its accuracy is needed, such as astronomy, high speed stock trading and video. One problem with the PTP is that it is best when it is synchronizing a limited number of clocks. This number of clocks is sufficient for a normal-sized MoIP installation but if you have a large system with many clocks and a fairly wide geographical distribution (e.g. with parts in different parts of a large city such as Los Angeles), the quality of the clock synchronization degrades. This can be overcome by dividing the system into two PTP domains, each with its own master and many slave clocks.

Another problem with MoIP is latency. One speaker said that you would think that three 1Mbit/S video streams could easily fit in a 10Mbit/S Ethernet pipe. Unfortunately, it isn’t that simple. Video tends to have bursts of data and if the three streams happen to have bursts at the same time, you have problems. The IP will eventually sort out all the packets in all three streams but the latency may differ from what you want or need for video.

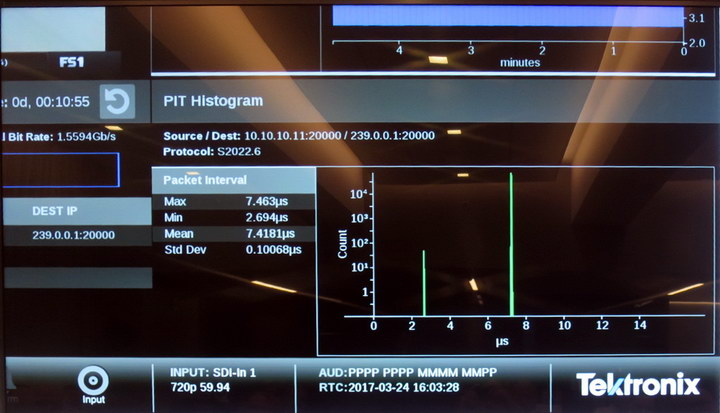

Packet latency with a Nevion VS902 multi-format IP media transport card over a 5 minute time span. While most packets arrive with a 7.463µs latency, some arrive with only a 2.694µs latency. (Source: MoIP Workshop)

Packet latency with a Nevion VS902 multi-format IP media transport card over a 5 minute time span. While most packets arrive with a 7.463µs latency, some arrive with only a 2.694µs latency. (Source: MoIP Workshop)

The latency in the system will depend on a wide variety of factors, including the number of streams and the capabilities of the IP routers and switchers, which are brand dependent. Results of a rather extensive study of this problem were presented at the workshop. The latency of different packets under any level of system stress varies.

In a relatively unstressed system, the latency was shown to vary from a minimum of 2.7µs to a maximum of 7.5 µs, with the bulk of the packets at the 7.5µs latency. With moderate stress and different hardware, the experiment showed latency varied from a minimum of 1.2µs to a maximum of 1.0ms, with an average of 7.4µs. These two results are probably workable and would not cause system problems. But with maximum stress, latency varied from 0ms (presumably actually the 1.2µs minimum transmission time with no delay) to 12.6ms, with an average latency of 7.4µs. This is the sort of stress that could be expected in a virtualized MoIP system in a public or private cloud where the video system is competing with other video or non-video applications for the cloud resources. In the very worst case, this can lead to lost frames or even a meltdown in the system and complete failure of the video signal. Excuse me, I know it’s the Superbowl, but please stand-by while I reboot all my routers.

Clearly this is a problem that needs to be solved before cloud-based MoIP can be implemented.

Security

As everyone who reads the daily newspaper knows, Internet security is a major problem and all IP-based systems are subject to hostile hacking attacks. Unfortunately, the discussion of security for MoIP was minimal at the workshop and, frankly, I found it unacceptable. The security discussion was focused on just one issue: piracy of the content. Pretty much a single solution was proposed: isolate the MoIP network from the Internet in general. Think of it, for example, as “The Great Firewall of NBCUniversal.”

This approach to security has two problems. First, there are other things hackers can do besides steal content. There is ransomware, where nothing is stolen, just encrypted and left in place. There are denial of service attacks, viruses, Trojan horses, etc. and the security of a MoIP system must deny all of these different types of attacks a foothold on the MoIP system.

Isolation alone is not sufficient security. First, when an IP system gets big enough, there is inevitably going to be a disgruntled employee or contractor who has access to the system. Second, no MoIP system is likely to be completely isolated (Just consider the current problems with Wi-fi security – Man. Ed.). In the short term, managers are going to want to be able to see progress on projects under their control.

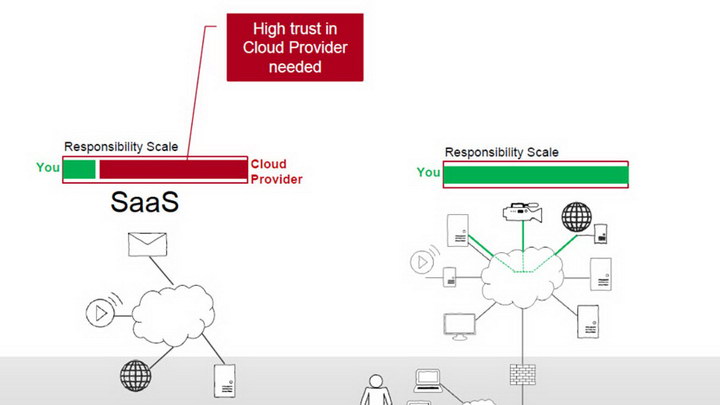

In an SaaS public cloud system, the cloud provider is largely responsible for security. In a private cloud MoIP system, the system owner is responsible for 100% of the security. (Source: A. Schneider, via the EBU)

In an SaaS public cloud system, the cloud provider is largely responsible for security. In a private cloud MoIP system, the system owner is responsible for 100% of the security. (Source: A. Schneider, via the EBU)

“Virtualization” was one of the buzz words used in the MoIP workshop by several speakers and is seen as the end product of the move from dedicated video hardware to IP hardware. According to the NABA, “The move to virtualized network and server infrastructure in on premise, off premise or hybrid cloud environments is one of the most desired infrastructure changes for broadcasters.”

“It is important that security becomes a minimum quality requirement for [cloud MoIP] broadcast systems,” said Andreas Schneider, Chief Information Security Officer (CISO) at Swiss broadcaster SRG, at a recent EBU Media Cybersecurity Seminar in Geneva.

To me, this virtualization meant that there would be a Ethernet-connected camera at one end of the video chain and an Ethernet-connected display at the other end of the chain. In between, there would be no dedicated video hardware at all, no video processors, no storage, no video switchers. Everything would be done on a public or private cloud and the resources needed would be shared resources that can be repurposed for multiple tasks. Isolation of a system like this, especially if a public cloud is used, is virtually impossible. Even speakers at the MoIP Workshop who pointed out problems with this cloud-based model, such as shared resources never being enough under some circumstances, saw these problems as issues to be overcome, not reasons to not implement virtualization.

JT-NM Roadmap

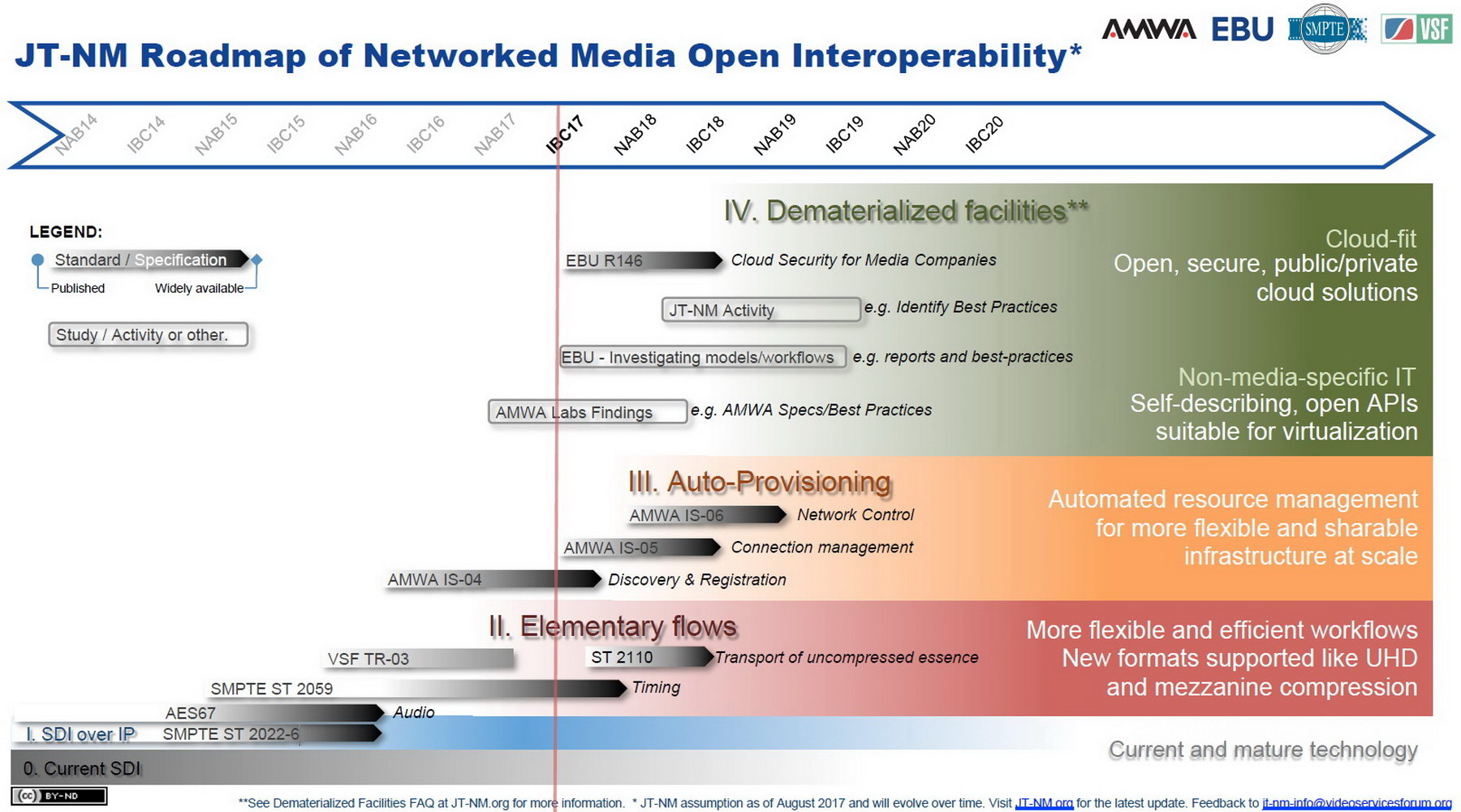

Many of the speakers referred to the JT-NM roadmap, which was treated by everyone as the official guide to the status and future directions of MoIP. The current version of it was announced at IBC 2017 and is shown in the figure.

The Joint Task Force on Networked Media (JT-NM) is sponsored by The Advanced Media Workflow Association (AMWA), The European Broadcasting Union (EBU), SMPTE and the VSF. One thing the JT-NM does is sponsor an Interop event at IBC and NAB. (I have written about the one at NAB 2017.) Each Interop event has hardware from multiple vendors all connected together via Ethernet to show their MoIP compatibility. At IBC 2016, there were 34 participants, of which 13 were ST 2110 capable. At NAB 2017 the event had 41 participants and at IBC 2017 there were 52 participants, with all participants at NAB 2017 and IBC 2017 ST 2110 capable. Clearly the vendor base for MoIP is growing, as is the adherence to the ST 2110 standards.

JT-NM Roadmap announced at IBC 2017 (Source: JT-NM)

JT-NM Roadmap announced at IBC 2017 (Source: JT-NM)

One interesting feature of this roadmap is it is not divided by dates. Rather, the timeline is NAB and IBC meetings, which take place in April and September respectively.

Level 0 of this roadmap is the current SDI infrastructure, shown to be eventually fading away. Level 1, SDI over IP and Level 2, Elementary flows are largely in place now. The standards for these levels are largely in place already and draw on standards from other bodies, especially AES 67 for audio and SMPTE ST 2059, which is the IEEE 1588 PTP standard re-packaged for video use. While the VSF TR-03, isn’t officially a “standard,” most people making MoIP equipment or software adhere to its recommendations, which will eventually be largely incorporated into ST 2110.

Level 3 of this timetable deals primarily with automated resource management and allows a MoIP system to keep track of available resources and ensure they are properly used. This is largely a software process, not a hardware process. This level is not yet in place except as AMWA IS-04. While this also isn’t a “standard,” it is generally adhered to by the software designers.

Level 4, the highest level, will guide virtualization or “Dematerialized Facilities.” This level is either in the study phase (AMWA Best Practices) or in the planning stage for studies to start in the future. The only standard specifically on course for this stage is EBU R 146, cloud security for Media Companies. Not only this hasn’t been approved yet, it isn’t a standard either, only a recommendation. There’s a lot of work to be done before virtualized media production using MoIP becomes a reality.

On the other hand, the first key steps of MoIP are here now and in use in real media applications. MoIP has also been designed into green-field control rooms and media production trucks. MoIP is here and like it or not, media companies will need to adopt it. In general, however, they seem to like it for the cost savings and flexibility it provides. –Matthew Brennesholtz