Over the last year, a lot (or most) of the discussion in the TV business has been around the concept of more, better and faster pixels. We’ve covered UltraHD, high dynamic range (HDR) and wide colour gamut (WCG) as well as higher frame rates (HFR). However, there is, to coin a phrase, an elephant in the room.

TVs take a very big amount of power and the EPA in the US gives a sense of this with the quote that, “If all televisions sold in the United States were ENERGY STAR certified, the energy cost savings would grow to more than $620 million each year, preventing 9 billion pounds of annual greenhouse gas emissions, equal to those from more than 885,000 vehicles”. Bear in mind, that’s not how much fuel is used, it’s just how much would be saved if all US TVs met the Energy Star requirements. In Europe, too, TVs are subject to regulations on maximum power and retailers have to tell consumers how well each TV does in power rankings.

The power authorities have to make their plans based on assumptions about the development of the market. I remember reading, some years ago, the EPA white paper that justified the allowing of mercury in the CCFLs that were in the back of early LCD TVs. The logic was that LCDs used less power than CRTs, and some heavy metals, including mercury, are released into the atmosphere by power generation, especially the burning of coal. The calculation was made that because LCDs used less power than CRTs, the amount of mercury in the CCFLs was less than that caused by the additional power usage of the CRTs.

That’s a good ‘holistic’ approach to the problem, but by the time I read the paper, some time after it was written, average TV sizes were growing rapidly following the introduction of LCD. I remember wondering whether, if the calculation was repeated taking into account the increase in screen size, the same conclusion would have been reached. The original document did not take into account the change in screen sizes and assumed sets would be replaced at the same size.

Now, average TV sizes are growing, as we reported from the IHS Business Conference in our SID report, and so power consumption may be going up. Furthermore, HDR, WCG and UltraHD all make the TV less economical in power usage. A well modulated direct-lit LED set should use less power than an edge lit set, especially if it has good dynamic dimming, but if you boost the peak brightness to 1,000cd/m² or more, you will offset some of the advantage.

There are more transistors and black masking in an UltraHD set than in a FullHD set, so the aperture ratio goes down, meaning more power being needed for the same brightness (although some of this effect could be offset if you use IGZO or other oxide TFTs, which can be smaller).

Wide colour gamut always means more power consumption for the same brightness because of the eye’s response to colour, although the same apparent brightness may be available in a WCG display because of the Helmholtz-Kohlrausch (H-K) effect.

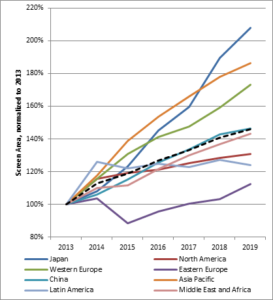

At the recent SID Business Conference, Paul Gray of IHS asked if the industry was really ready to deal with the power issues. Since the conference, he has done an analysis of the increase in area of new TV sales in a number of regions.

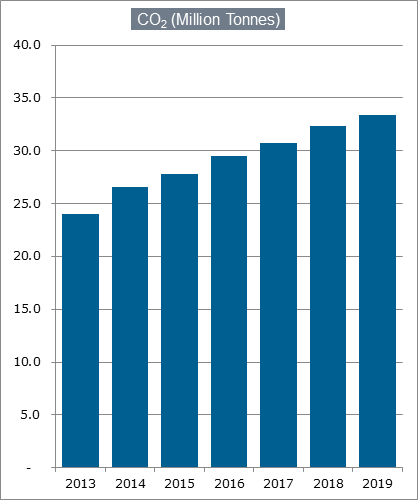

He then took the top limit of the California Energy Commission 2009 specifications for TVs in 2013 and converted the area change to CO2 production, with this result.

That’s not heading in the direction that energy regulators want!

As a matter of interest, I thought I’d check out a typical 55″ set with high end features and compare the power consumption to the Californian regulation. This allows up to 188W (0.12 x Area in sq inches + 25). However, when I looked on the Samsung website, there is no power consumption quoted. The owners manual doesn’t include the data and the “quick guide” says how power consumption is calculated, but doesn’t quote a number.

Now, my life has been improved ever since somebody said to me, “Never ascribe to malice what can be explained by inefficiency”, so I’m prepared to assume that Samsung missed it by mistake. On checking a retailer’s site, I found the power to be quoted at 110W, although there is no clear indication of the picture conditions. The nearest equivalent set I could find from Samsung that doesn’t have HDR, WCG or UltraHD has power consumption of 83W, which is 24.5% less. Both fit the European A+ class, the retailer says.

However, I did the calculation for the SUHD set, and by my reckoning, it should have an A rating, not an A+ (Energy Efficiency Index of 0.286).

Of course, an A rating is still not bad for a high end TV, but it shows that the effect of these technologies, combined with increasing set size may work against the efforts of energy regulators around the world.

(We contacted Samsung’s PR company to check the power ratings, but, at press time, had not heard what the power/rating was.) – Bob Raikes