In case you missed it, your G-Mail, Photo’s and other apps are about to change in some very interesting ways, and that’s just the tip of the iceberg of what empowered AI programming can deliver to the masses.

It’s all thanks to the Google Tensor AI chip initiative and the recently announced next generation TPU (short for Tensor processor unit), Google’s TPU 3.0 ,presented last week by Google CEO, Sundar Pichai, at the yearly developer event, Google I/O.

The changes coming include a feature called “smart compose” in your G-Mail account. This feature uses AI to “auto-suggest” not just words, but complete sentence while composing an e-mail. It’s an extension of the current feature “auto reply” that predicts then offers certain answers to e-mails, that users can select. The “smart compose” feature resides at the bottom of the compose e-mail window.

Google CEO Sundar Pichai demonstrating the company’s AI TPU 3.0 chip technology at Google I/O 2018Google photo’s will also get an AI upgrade allowing its users to auto color a black and white photo, or eliminate background colors in an image to enhance the foreground subject with full color saturation. Both features were highlighted in the keynote presentation given by CEO Pichai at the start of Google IO 2018 last week in Mountain View, CA.

Google CEO Sundar Pichai demonstrating the company’s AI TPU 3.0 chip technology at Google I/O 2018Google photo’s will also get an AI upgrade allowing its users to auto color a black and white photo, or eliminate background colors in an image to enhance the foreground subject with full color saturation. Both features were highlighted in the keynote presentation given by CEO Pichai at the start of Google IO 2018 last week in Mountain View, CA.

The one cool feature I liked best in the keynote presentation was taking an image of a document, and having it translated into a .pdf file. The audience of mostly engineers and developers attending the event began to cheer at that prospect.

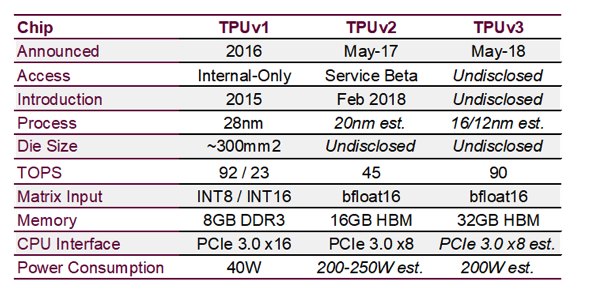

Source: Paul Teich, Nextplatform.com As for the chip hardware, the company revealed little in the way of details on the actual TPU 3.0 architecture and truth be told, we are still accessing the company’s TPU v.1 for basic internet search routines. But Google is committed to AI development and the TPU 3.0 is a clear announcement in that direction.

Source: Paul Teich, Nextplatform.com As for the chip hardware, the company revealed little in the way of details on the actual TPU 3.0 architecture and truth be told, we are still accessing the company’s TPU v.1 for basic internet search routines. But Google is committed to AI development and the TPU 3.0 is a clear announcement in that direction.

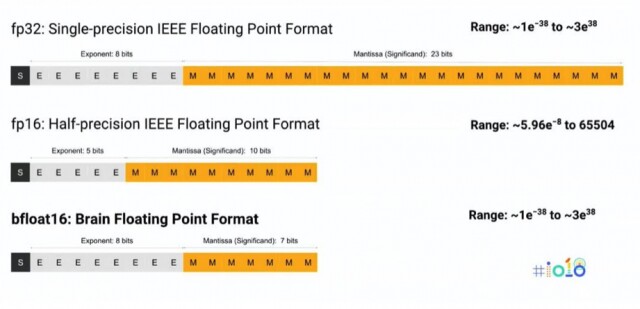

Google’s internal floating point format bfloatIn general, Google’s Tensor AI architecture is designed to be “more tolerant of reduced computational precision” (perhaps like how the human brain filters out much of the irrelevant noise when working on a problem.) According to Google, this approach requires fewer transistors per operation allowing the system to “…squeeze more operations per second into the silicon, use more sophisticated and powerful machine learning models and apply these models more quickly, so users get more intelligent results more rapidly,” according to Google senior fellow Jeff Dean in a cloud computing blog post.

Google’s internal floating point format bfloatIn general, Google’s Tensor AI architecture is designed to be “more tolerant of reduced computational precision” (perhaps like how the human brain filters out much of the irrelevant noise when working on a problem.) According to Google, this approach requires fewer transistors per operation allowing the system to “…squeeze more operations per second into the silicon, use more sophisticated and powerful machine learning models and apply these models more quickly, so users get more intelligent results more rapidly,” according to Google senior fellow Jeff Dean in a cloud computing blog post.

Put another way, “Google invented its own internal floating point format called “bfloat” for “brain floating point” (after Google Brain). The Bfloat format uses an 8-bit exponent and 7-bit mantissa, instead of the IEEE standard FP16’s 5-bit exponent and 10-bit mantissa. Bfloat expresses values from ~1e-38 to ~3e38, which is orders of magnitude wider dynamic range than IEEE FP16,” according to research on the topic done by Paul Teich. He said “Google invented its bfloat format because it found that it needed data science experts while training with IEEE FP16 to make sure that numbers stayed within FP16’s more limited range.” For a complete rundown on Google’s Tensor AI technology don’t miss the excellent article from Paul Teich’s on next platform.com covering the full history of Google TPU architecture development.

So the move to AI is accelerating and can be seen today in tasks shape and face recognition, asking Siri to show you pictures of cat’s, or even the simple improvements in voice interface. It is beginning to touch our lives in new and exciting ways, and yes–it’s just the tip of the iceberg, so stand by for more news. — Stephen Sechrist

Google ARCore 1.2 Brings Several New Features

Google Assistant Now Rolling Out

Video, Artificial Intelligence and Skynet Might be Scary!

Watch to Learn: Replicating Human Vision for Accurate Testing of AR/VR Displays

INT Unveils Next Generation Display Solution for VR and AR Applications