Light field displays, volumetric displays and eventually, holographic displays are all in development with the first commercial products coming soon. Such displays require a lot of image points (some call them voxels or volume pixels) and a lot of data. As a result, methods to format, encode and deliver these intense data streams are also in development. Two upcoming events will focus on commercial activities in these areas.

At the Display Summit, for example, a company called SeeReal will describe their approach to a holographic display. One of the issues with holographic displays today is their limited field of view, resolution and image size – plus the large computational power needed to generate the diffraction patterns to drive the spatial light modulators. SeeReal tackles this problem by using an eye tracking system so they only have to render what your fovea is looking at. I have seen a demo of this system and it is one of the most impressive 3D displays I have seen (see SeeReal Shows Electronic Holographic Display.)

FoVI3D will talk about their integral imaging approach for making a light field display. Powered by a 2D array of OLED microdisplay and microlenses, the system offers vertical and horizontal parallax with an image appearing in a tabletop format (see Light Field Display Papers at SID – subscription required). This second generation approach should be a big improvement in image quality over their previous demonstrator.

Light Field Labs will provide some insight into their display approach as well. They have not disclosed too much to date, but plan to work the whole food chain of light field data processing, formatting encoding and displays (see Light Field Lab, Inc. Taking Ecosystem Approach – subscription required)

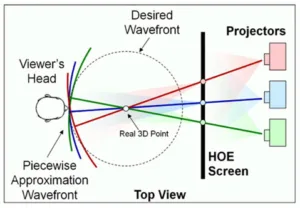

Another way to simplify the problem is to limit the rendering to just horizontal parallax. Holografika and Third Dimension Technologies will present their approaches using a technique called holographic stereography. For this, an array of projectors illuminates a special holographically-defined screen that compresses each projector image into a narrow (~1º) horizontal FOV and wide vertical FOV. Each projector has a different perspective of the scene so when combined provides a glasses-free 3D image with a large sweet spot. Holografika has constructed large commercial versions of this architecture while Third Dimension Technologies has delivered a flight simulator (see Third Dimension Technologies Shows Light Field Simulator to the Air Force – subscription required).

LightSpace will talk about their volumetric display system (see LightSpace 3D Volumetric Displays Are Back – subscription required). It consists of two main components. The first is a high speed, single-chip DLP engine that is used for rear projecting video onto the second main component: a stack of about 20 air-spaced screens. The arrangement of the screens can be visualized as similar to the slices in a loaf of bread. Each screen is individually electrically addressable and can be driven to quickly switch from a transparent state to a scattering state. More specifically, each screen in the so-called Multi-planar Optical Element (MOE) is a liquid crystal scattering shutter. In previous versions of the LightSpace display, the MOEs were composed of Polymer Dispersed Liquid Crystal material. The latest version of the MOEs is reported to use a new liquid crystal formulation.

And there are other commercial companies working on light field displays as well such as Leia which is pursuing a diffractive light field backlight system to power their display (see Leia Looks to Develop Light Field LCDs – subscription required). Avalon Holographics is another company in the hunt with a light field display approach. Plus, there are numerous University and research efforts that will take longer to commercialize.

At the Streaming Media for Field of Light Displays (SMFoLD) workshop, which will be held on Oct. 3 just before Display Summit, presenters will focus on the distribution part of the light field ecosystem.

Here, there is much debate about how to deliver the vast amounts of data in real time that a light field image can require. For synthetic data, one can deliver data as mesh and textures perhaps with additional metadata. But for video content, there could easily be 50 to 100 views to deliver to the display system. Do you focus on extensions to conventional video codecs to reduce the massive redundancy or should you use these images to create a 3D model and encode as mesh, textures and metadata? Many are trying to understand the trade offs in terms of processing power, cost, bandwidth and efficiency.

At the SMFoLD workshop, several approaches to this formatting and encoding problem will be introduced, and there will be discussions about current activities in the relevant standards bodies in this area as well. We anticipate a vigorous panel session to debate various approaches.

So how real are light field display applications? Certainly there is much interest in the AR/VR community about being able to provide a 3D image that does not have any side effects, so understanding the delivery and display ecosystem for such next generation products is critical. But there are also many 3D data sets in military, medical, intelligence and commercial applications where existing stereo 3D systems can be upgraded to a more robust light field or other form of advanced 3D display. Some of these will be profiled at the workshop as well. – CC