The final talk of the second day was from Dan Cashen of Nippon Sieke, one of the largest makers of HUDs globally. The company makes most of the HUDs in autos in Europe and America, he claimed. His topic was the challenge to the whole system if AR is to work well, from the camera to the display.

After defining AR, Cashen looked at non-immersive displays. Rear view mirrors, for example, are non-immersive as the display blocks the driver’s real world view. Can the display replace the view through the windshield? No, so you can’t use a non-immersive display.

Immersive HUDs add live information, but the process pipeline for immersive or non-immersive is the same (capture, registration, rendering and display) – and that’s tricky.

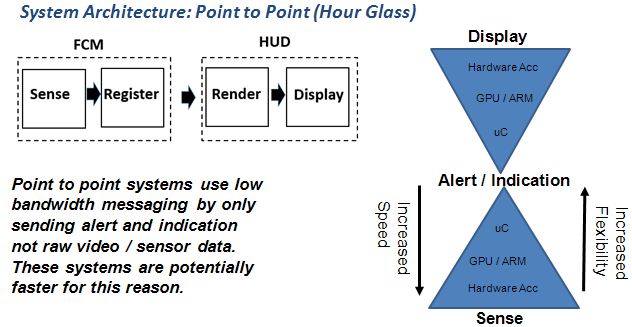

Sensing is the process of getting the raw image data. Then you find objects in the image and map them onto the world, then render the graphics and send it to the display. That’s a centralised architecture. Nippon Seike uses a dual component architecture, so the image is registered at the same point in the system as the capture. The render engine goes into the display.

The sensor includes hardware acceleration and a GPU/Arm processor and then a microcontroller – the microcontroller sends information of which icons, for example, are needed and which are then rendered at the display. Latency is the single most important thing for an A/R HUD.

Beyond 7m you don’t see depth by disparity, but by scale. That makes it harder to differentiate the HUD content from the real world image. If there is a difference between the HUD and the real world – it’s hard to deal with. If there’s a mistake or an accident – it’s difficult to decide whose fault it is – the driver or the HUD maker?

Cashen went through a typical use case which was of highlighting the lanes in the car in difficult light. On the assumption of an eyebox of 100 mm wide, you can only allow 0.5º error. The HUD is not just a box – it’s a compound optical system. Windshields are very approximate shapes because of the manufacturing process. There are lots of variations of windshield and its mounting, the HUD and its mounting plus user movement and car movement. He went through the maths and explained the error issues that show that eye movement alone is enough to cause too much error if uncorrected.

Cashen then showed how latency makes these errors even worse. Even a 100ms latency puts a 20m object outside the error required for the system. It’s very challenging and that’s why you need the different system architecture that Nippon Seike has developed.

The pipeline is challenging and new technologies are being used and developed.