CY Vision (San Jose, CA) is a company that has been quietly developing advanced Head-up Display (HUD) technology but is now ready to start talking about what it has been doing. In a call with co-founder and Koç University (Istanbul) Professor, Hakan Urey, I learned a lot more about their approach. In essence, it is a next-generation augmented reality HUD that provides full-color binocular images with variable focal points. That’s a mouthful so let’s dig a little deeper.

Let’s start with basic architectures for HUDs. Almost all HUDs today are image-based. That means they create an image on a direct-view or microdisplay and then “project” the virtual image to a fixed focal plane, typically at the front of the car in an auto HUD.

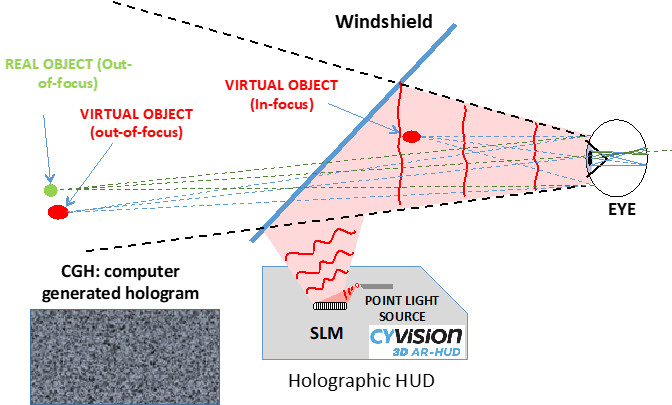

The other approach uses a spatial light modulator (SLM), essentially a slightly modified microdisplay. A hologram of the image you want to display is computed and driven to the SLM which is illuminated by a laser or LED. This approach has the advantage of allowing all the light to be used in displaying content instead of blocking a lot of light, as with the image-based approach. The downside can be the heavy computational load needed to calculate the phase hologram.

CY Vision is pursuing the SLM approach and has now developed a performance level that I don’t think anyone else has demonstrated yet. However, they are not the first to pursue such an approach, there have been other SLM based HUD approaches that were commercialized.

So how is the CY Vision approach different, I asked Urey?

“I don’t really know the details, but at CY Vision, we have created a full color, SLM-based HUD that uses eye-tracking to calculate an optimized computer-generated hologram (CGH) that avoids the accommodation-vergence issues present in typical stereoscopic displays while providing an image that mimics human vision – i.e. distance objects are out of focus when viewing closer objects. I don’t think there has been any other approach with the depth cues like ours.”

CY Vision is trying to develop a display solution that will fit the needs of future drivers, especially as autonomous driving becomes more prevalent. HUD developers typically define an AR HUD as one that adapts the HUD images as events evolve. For example, if you need to make an exit in a mile, a small arrow may first appear in the HUD. As you draw closer, the arrow increases in size and may shift in the FOV the closer you get. Current HUDs do not do this and many other envisioned AR tasks.

More importantly, the world continues to be digitally mapped (some call this the digital twin) so that data on every building, not just streets, will be available to the navigation/GPS system. You will soon be able to ask your car to suggest and guide you to the best Italian restaurant within five miles, the nearest historical site, the cheapest gas, etc. As autonomy takes over, you will be able to take your eyes off the road more of the time. This means you may use your HUD as an interface to some social or professional tasks such as having a virtual avatar inside the car, making a holographic video call, etc.

All these use cases and more suggest the need for a display that can change the focal length of the image, provide appropriate depth cues and spatially-track icons.

CY Vision’s HUD technology combines a pupil tracker (senses pupil and gaze position in real-time). It can also create very accurate parallax to deliver separate images for each eye, tailored to the viewer. Plus, it is a machine learning-based algorithm so should get better over time.

As with all HUDs, there is a tradeoff between eyebox and FOV. Larger eyeboxes mean smaller FOVs. Both are also impacted by the number of pixels available in the imager or SLM. However, the company claims to be able to offer the largest FOV for any available dashboard volume (>20 degree FOV can be achieved with less than 10 liter volume).

The real beauty of a CGH is the ability to “write” lenses to the SLM. This is how the light is steered, how the focal plane and depth of focus can change as well. “It’s all done with software in real time using our custom developed algorithms and parallel processing capabilities of modern GPU and FPGA/ASIC solution,” explained Urey.

Being able to write optical functions to the SLM also allows one to correct for aberrations and calibrate out distortions introduced by the combiner – i.e. the windshield. The same optical design and hardware can be adapted to all car models with simple changes in the software.

So far, CY Vision has focused on showing symbology, icons, and uses computer vision and AI to detect and show relevant info to the driver. Company is also developing AR content based on available hardware installed in the car (radar, cameras, lidar, etc). They are not planning any public demos yet, but already started proof of concept studies with major OEMs and are doing private demos to other tier one auto suppliers. Their business model is also flexible too.

While focus is mainly on AR HUDs, the technology is also applicable to AR eyewear as well.

I look forward seeing demos from CY Vision at some point.